An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Case Study Observational Research: A Framework for Conducting Case Study Research Where Observation Data Are the Focus

Affiliation.

- 1 1 University of Otago, Wellington, New Zealand.

- PMID: 27217290

- DOI: 10.1177/1049732316649160

Case study research is a comprehensive method that incorporates multiple sources of data to provide detailed accounts of complex research phenomena in real-life contexts. However, current models of case study research do not particularly distinguish the unique contribution observation data can make. Observation methods have the potential to reach beyond other methods that rely largely or solely on self-report. This article describes the distinctive characteristics of case study observational research, a modified form of Yin's 2014 model of case study research the authors used in a study exploring interprofessional collaboration in primary care. In this approach, observation data are positioned as the central component of the research design. Case study observational research offers a promising approach for researchers in a wide range of health care settings seeking more complete understandings of complex topics, where contextual influences are of primary concern. Future research is needed to refine and evaluate the approach.

Keywords: New Zealand; appreciative inquiry; case studies; case study observational research; health care; interprofessional collaboration; naturalistic inquiry; observation; primary health care; qualitative; research design.

PubMed Disclaimer

Similar articles

- Observation of interprofessional collaborative practice in primary care teams: An integrative literature review. Morgan S, Pullon S, McKinlay E. Morgan S, et al. Int J Nurs Stud. 2015 Jul;52(7):1217-30. doi: 10.1016/j.ijnurstu.2015.03.008. Epub 2015 Mar 19. Int J Nurs Stud. 2015. PMID: 25862411 Review.

- An exemplar of naturalistic inquiry in general practice research. McInnes S, Peters K, Bonney A, Halcomb E. McInnes S, et al. Nurse Res. 2017 Jan 23;24(3):36-41. doi: 10.7748/nr.2017.e1509. Nurse Res. 2017. PMID: 28102791

- Standards and guidelines for observational studies: quality is in the eye of the beholder. Morton SC, Costlow MR, Graff JS, Dubois RW. Morton SC, et al. J Clin Epidemiol. 2016 Mar;71:3-10. doi: 10.1016/j.jclinepi.2015.10.014. Epub 2015 Nov 5. J Clin Epidemiol. 2016. PMID: 26548541

- Using observation to collect data in emergency research. Fry M, Curtis K, Considine J, Shaban RZ. Fry M, et al. Australas Emerg Nurs J. 2017 Feb;20(1):25-30. doi: 10.1016/j.aenj.2017.01.001. Epub 2017 Feb 4. Australas Emerg Nurs J. 2017. PMID: 28169134

- Real-world research and the role of observational data in the field of gynaecology - a practical review. Heikinheimo O, Bitzer J, García Rodríguez L. Heikinheimo O, et al. Eur J Contracept Reprod Health Care. 2017 Aug;22(4):250-259. doi: 10.1080/13625187.2017.1361528. Epub 2017 Aug 17. Eur J Contracept Reprod Health Care. 2017. PMID: 28817972 Review.

- A realist evaluation protocol: assessing the effectiveness of a rapid response team model for mental state deterioration in acute hospitals. Dziruni TB, Hutchinson AM, Keppich-Arnold S, Bucknall T. Dziruni TB, et al. Front Health Serv. 2024 Jul 15;4:1400060. doi: 10.3389/frhs.2024.1400060. eCollection 2024. Front Health Serv. 2024. PMID: 39076771 Free PMC article.

- Using Qualitative Methods to Understand the Interconnections Between Cities and Health: A Methodological Review. Silva JP, Ribeiro AI. Silva JP, et al. Public Health Rev. 2024 Apr 8;45:1606454. doi: 10.3389/phrs.2024.1606454. eCollection 2024. Public Health Rev. 2024. PMID: 38651134 Free PMC article. Review.

- Preference-based patient participation in intermediate care: Translation, validation and piloting of the 4Ps in Norway. Kvæl LAH, Bergland A, Eldh AC. Kvæl LAH, et al. Health Expect. 2024 Feb;27(1):e13899. doi: 10.1111/hex.13899. Epub 2023 Nov 7. Health Expect. 2024. PMID: 37934200 Free PMC article.

- Dilemmas and deliberations in managing the care trajectory of elderly patients with complex health needs: a single-case study. Kumlin M, Berg GV, Kvigne K, Hellesø R. Kumlin M, et al. BMC Health Serv Res. 2022 Aug 12;22(1):1030. doi: 10.1186/s12913-022-08422-3. BMC Health Serv Res. 2022. PMID: 35962337 Free PMC article.

- Tailoring and Evaluating an Intervention to Support Self-management After Stroke: Protocol for a Multi-case, Mixed Methods Comparison Study. Elf M, Klockar E, Kylén M, von Koch L, Ytterberg C, Wallin L, Finch T, Gustavsson C, Jones F. Elf M, et al. JMIR Res Protoc. 2022 May 6;11(5):e37672. doi: 10.2196/37672. JMIR Res Protoc. 2022. PMID: 35522476 Free PMC article.

- Search in MeSH

LinkOut - more resources

Full text sources.

- Ovid Technologies, Inc.

Other Literature Sources

- scite Smart Citations

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Non-Experimental Research

32 Observational Research

Learning objectives.

- List the various types of observational research methods and distinguish between each.

- Describe the strengths and weakness of each observational research method.

What Is Observational Research?

The term observational research is used to refer to several different types of non-experimental studies in which behavior is systematically observed and recorded. The goal of observational research is to describe a variable or set of variables. More generally, the goal is to obtain a snapshot of specific characteristics of an individual, group, or setting. As described previously, observational research is non-experimental because nothing is manipulated or controlled, and as such we cannot arrive at causal conclusions using this approach. The data that are collected in observational research studies are often qualitative in nature but they may also be quantitative or both (mixed-methods). There are several different types of observational methods that will be described below.

Naturalistic Observation

Naturalistic observation is an observational method that involves observing people’s behavior in the environment in which it typically occurs. Thus naturalistic observation is a type of field research (as opposed to a type of laboratory research). Jane Goodall’s famous research on chimpanzees is a classic example of naturalistic observation. Dr. Goodall spent three decades observing chimpanzees in their natural environment in East Africa. She examined such things as chimpanzee’s social structure, mating patterns, gender roles, family structure, and care of offspring by observing them in the wild. However, naturalistic observation could more simply involve observing shoppers in a grocery store, children on a school playground, or psychiatric inpatients in their wards. Researchers engaged in naturalistic observation usually make their observations as unobtrusively as possible so that participants are not aware that they are being studied. Such an approach is called disguised naturalistic observation . Ethically, this method is considered to be acceptable if the participants remain anonymous and the behavior occurs in a public setting where people would not normally have an expectation of privacy. Grocery shoppers putting items into their shopping carts, for example, are engaged in public behavior that is easily observable by store employees and other shoppers. For this reason, most researchers would consider it ethically acceptable to observe them for a study. On the other hand, one of the arguments against the ethicality of the naturalistic observation of “bathroom behavior” discussed earlier in the book is that people have a reasonable expectation of privacy even in a public restroom and that this expectation was violated.

In cases where it is not ethical or practical to conduct disguised naturalistic observation, researchers can conduct undisguised naturalistic observation where the participants are made aware of the researcher presence and monitoring of their behavior. However, one concern with undisguised naturalistic observation is reactivity. Reactivity refers to when a measure changes participants’ behavior. In the case of undisguised naturalistic observation, the concern with reactivity is that when people know they are being observed and studied, they may act differently than they normally would. This type of reactivity is known as the Hawthorne effect . For instance, you may act much differently in a bar if you know that someone is observing you and recording your behaviors and this would invalidate the study. So disguised observation is less reactive and therefore can have higher validity because people are not aware that their behaviors are being observed and recorded. However, we now know that people often become used to being observed and with time they begin to behave naturally in the researcher’s presence. In other words, over time people habituate to being observed. Think about reality shows like Big Brother or Survivor where people are constantly being observed and recorded. While they may be on their best behavior at first, in a fairly short amount of time they are flirting, having sex, wearing next to nothing, screaming at each other, and occasionally behaving in ways that are embarrassing.

Participant Observation

Another approach to data collection in observational research is participant observation. In participant observation , researchers become active participants in the group or situation they are studying. Participant observation is very similar to naturalistic observation in that it involves observing people’s behavior in the environment in which it typically occurs. As with naturalistic observation, the data that are collected can include interviews (usually unstructured), notes based on their observations and interactions, documents, photographs, and other artifacts. The only difference between naturalistic observation and participant observation is that researchers engaged in participant observation become active members of the group or situations they are studying. The basic rationale for participant observation is that there may be important information that is only accessible to, or can be interpreted only by, someone who is an active participant in the group or situation. Like naturalistic observation, participant observation can be either disguised or undisguised. In disguised participant observation , the researchers pretend to be members of the social group they are observing and conceal their true identity as researchers.

In a famous example of disguised participant observation, Leon Festinger and his colleagues infiltrated a doomsday cult known as the Seekers, whose members believed that the apocalypse would occur on December 21, 1954. Interested in studying how members of the group would cope psychologically when the prophecy inevitably failed, they carefully recorded the events and reactions of the cult members in the days before and after the supposed end of the world. Unsurprisingly, the cult members did not give up their belief but instead convinced themselves that it was their faith and efforts that saved the world from destruction. Festinger and his colleagues later published a book about this experience, which they used to illustrate the theory of cognitive dissonance (Festinger, Riecken, & Schachter, 1956) [1] .

In contrast with undisguised participant observation , the researchers become a part of the group they are studying and they disclose their true identity as researchers to the group under investigation. Once again there are important ethical issues to consider with disguised participant observation. First no informed consent can be obtained and second deception is being used. The researcher is deceiving the participants by intentionally withholding information about their motivations for being a part of the social group they are studying. But sometimes disguised participation is the only way to access a protective group (like a cult). Further, disguised participant observation is less prone to reactivity than undisguised participant observation.

Rosenhan’s study (1973) [2] of the experience of people in a psychiatric ward would be considered disguised participant observation because Rosenhan and his pseudopatients were admitted into psychiatric hospitals on the pretense of being patients so that they could observe the way that psychiatric patients are treated by staff. The staff and other patients were unaware of their true identities as researchers.

Another example of participant observation comes from a study by sociologist Amy Wilkins on a university-based religious organization that emphasized how happy its members were (Wilkins, 2008) [3] . Wilkins spent 12 months attending and participating in the group’s meetings and social events, and she interviewed several group members. In her study, Wilkins identified several ways in which the group “enforced” happiness—for example, by continually talking about happiness, discouraging the expression of negative emotions, and using happiness as a way to distinguish themselves from other groups.

One of the primary benefits of participant observation is that the researchers are in a much better position to understand the viewpoint and experiences of the people they are studying when they are a part of the social group. The primary limitation with this approach is that the mere presence of the observer could affect the behavior of the people being observed. While this is also a concern with naturalistic observation, additional concerns arise when researchers become active members of the social group they are studying because that they may change the social dynamics and/or influence the behavior of the people they are studying. Similarly, if the researcher acts as a participant observer there can be concerns with biases resulting from developing relationships with the participants. Concretely, the researcher may become less objective resulting in more experimenter bias.

Structured Observation

Another observational method is structured observation . Here the investigator makes careful observations of one or more specific behaviors in a particular setting that is more structured than the settings used in naturalistic or participant observation. Often the setting in which the observations are made is not the natural setting. Instead, the researcher may observe people in the laboratory environment. Alternatively, the researcher may observe people in a natural setting (like a classroom setting) that they have structured some way, for instance by introducing some specific task participants are to engage in or by introducing a specific social situation or manipulation.

Structured observation is very similar to naturalistic observation and participant observation in that in all three cases researchers are observing naturally occurring behavior; however, the emphasis in structured observation is on gathering quantitative rather than qualitative data. Researchers using this approach are interested in a limited set of behaviors. This allows them to quantify the behaviors they are observing. In other words, structured observation is less global than naturalistic or participant observation because the researcher engaged in structured observations is interested in a small number of specific behaviors. Therefore, rather than recording everything that happens, the researcher only focuses on very specific behaviors of interest.

Researchers Robert Levine and Ara Norenzayan used structured observation to study differences in the “pace of life” across countries (Levine & Norenzayan, 1999) [4] . One of their measures involved observing pedestrians in a large city to see how long it took them to walk 60 feet. They found that people in some countries walked reliably faster than people in other countries. For example, people in Canada and Sweden covered 60 feet in just under 13 seconds on average, while people in Brazil and Romania took close to 17 seconds. When structured observation takes place in the complex and even chaotic “real world,” the questions of when, where, and under what conditions the observations will be made, and who exactly will be observed are important to consider. Levine and Norenzayan described their sampling process as follows:

“Male and female walking speed over a distance of 60 feet was measured in at least two locations in main downtown areas in each city. Measurements were taken during main business hours on clear summer days. All locations were flat, unobstructed, had broad sidewalks, and were sufficiently uncrowded to allow pedestrians to move at potentially maximum speeds. To control for the effects of socializing, only pedestrians walking alone were used. Children, individuals with obvious physical handicaps, and window-shoppers were not timed. Thirty-five men and 35 women were timed in most cities.” (p. 186).

Precise specification of the sampling process in this way makes data collection manageable for the observers, and it also provides some control over important extraneous variables. For example, by making their observations on clear summer days in all countries, Levine and Norenzayan controlled for effects of the weather on people’s walking speeds. In Levine and Norenzayan’s study, measurement was relatively straightforward. They simply measured out a 60-foot distance along a city sidewalk and then used a stopwatch to time participants as they walked over that distance.

As another example, researchers Robert Kraut and Robert Johnston wanted to study bowlers’ reactions to their shots, both when they were facing the pins and then when they turned toward their companions (Kraut & Johnston, 1979) [5] . But what “reactions” should they observe? Based on previous research and their own pilot testing, Kraut and Johnston created a list of reactions that included “closed smile,” “open smile,” “laugh,” “neutral face,” “look down,” “look away,” and “face cover” (covering one’s face with one’s hands). The observers committed this list to memory and then practiced by coding the reactions of bowlers who had been videotaped. During the actual study, the observers spoke into an audio recorder, describing the reactions they observed. Among the most interesting results of this study was that bowlers rarely smiled while they still faced the pins. They were much more likely to smile after they turned toward their companions, suggesting that smiling is not purely an expression of happiness but also a form of social communication.

In yet another example (this one in a laboratory environment), Dov Cohen and his colleagues had observers rate the emotional reactions of participants who had just been deliberately bumped and insulted by a confederate after they dropped off a completed questionnaire at the end of a hallway. The confederate was posing as someone who worked in the same building and who was frustrated by having to close a file drawer twice in order to permit the participants to walk past them (first to drop off the questionnaire at the end of the hallway and once again on their way back to the room where they believed the study they signed up for was taking place). The two observers were positioned at different ends of the hallway so that they could read the participants’ body language and hear anything they might say. Interestingly, the researchers hypothesized that participants from the southern United States, which is one of several places in the world that has a “culture of honor,” would react with more aggression than participants from the northern United States, a prediction that was in fact supported by the observational data (Cohen, Nisbett, Bowdle, & Schwarz, 1996) [6] .

When the observations require a judgment on the part of the observers—as in the studies by Kraut and Johnston and Cohen and his colleagues—a process referred to as coding is typically required . Coding generally requires clearly defining a set of target behaviors. The observers then categorize participants individually in terms of which behavior they have engaged in and the number of times they engaged in each behavior. The observers might even record the duration of each behavior. The target behaviors must be defined in such a way that guides different observers to code them in the same way. This difficulty with coding illustrates the issue of interrater reliability, as mentioned in Chapter 4. Researchers are expected to demonstrate the interrater reliability of their coding procedure by having multiple raters code the same behaviors independently and then showing that the different observers are in close agreement. Kraut and Johnston, for example, video recorded a subset of their participants’ reactions and had two observers independently code them. The two observers showed that they agreed on the reactions that were exhibited 97% of the time, indicating good interrater reliability.

One of the primary benefits of structured observation is that it is far more efficient than naturalistic and participant observation. Since the researchers are focused on specific behaviors this reduces time and expense. Also, often times the environment is structured to encourage the behaviors of interest which again means that researchers do not have to invest as much time in waiting for the behaviors of interest to naturally occur. Finally, researchers using this approach can clearly exert greater control over the environment. However, when researchers exert more control over the environment it may make the environment less natural which decreases external validity. It is less clear for instance whether structured observations made in a laboratory environment will generalize to a real world environment. Furthermore, since researchers engaged in structured observation are often not disguised there may be more concerns with reactivity.

Case Studies

A case study is an in-depth examination of an individual. Sometimes case studies are also completed on social units (e.g., a cult) and events (e.g., a natural disaster). Most commonly in psychology, however, case studies provide a detailed description and analysis of an individual. Often the individual has a rare or unusual condition or disorder or has damage to a specific region of the brain.

Like many observational research methods, case studies tend to be more qualitative in nature. Case study methods involve an in-depth, and often a longitudinal examination of an individual. Depending on the focus of the case study, individuals may or may not be observed in their natural setting. If the natural setting is not what is of interest, then the individual may be brought into a therapist’s office or a researcher’s lab for study. Also, the bulk of the case study report will focus on in-depth descriptions of the person rather than on statistical analyses. With that said some quantitative data may also be included in the write-up of a case study. For instance, an individual’s depression score may be compared to normative scores or their score before and after treatment may be compared. As with other qualitative methods, a variety of different methods and tools can be used to collect information on the case. For instance, interviews, naturalistic observation, structured observation, psychological testing (e.g., IQ test), and/or physiological measurements (e.g., brain scans) may be used to collect information on the individual.

HM is one of the most notorious case studies in psychology. HM suffered from intractable and very severe epilepsy. A surgeon localized HM’s epilepsy to his medial temporal lobe and in 1953 he removed large sections of his hippocampus in an attempt to stop the seizures. The treatment was a success, in that it resolved his epilepsy and his IQ and personality were unaffected. However, the doctors soon realized that HM exhibited a strange form of amnesia, called anterograde amnesia. HM was able to carry out a conversation and he could remember short strings of letters, digits, and words. Basically, his short term memory was preserved. However, HM could not commit new events to memory. He lost the ability to transfer information from his short-term memory to his long term memory, something memory researchers call consolidation. So while he could carry on a conversation with someone, he would completely forget the conversation after it ended. This was an extremely important case study for memory researchers because it suggested that there’s a dissociation between short-term memory and long-term memory, it suggested that these were two different abilities sub-served by different areas of the brain. It also suggested that the temporal lobes are particularly important for consolidating new information (i.e., for transferring information from short-term memory to long-term memory).

The history of psychology is filled with influential cases studies, such as Sigmund Freud’s description of “Anna O.” (see Note 6.1 “The Case of “Anna O.””) and John Watson and Rosalie Rayner’s description of Little Albert (Watson & Rayner, 1920) [7] , who allegedly learned to fear a white rat—along with other furry objects—when the researchers repeatedly made a loud noise every time the rat approached him.

The Case of “Anna O.”

Sigmund Freud used the case of a young woman he called “Anna O.” to illustrate many principles of his theory of psychoanalysis (Freud, 1961) [8] . (Her real name was Bertha Pappenheim, and she was an early feminist who went on to make important contributions to the field of social work.) Anna had come to Freud’s colleague Josef Breuer around 1880 with a variety of odd physical and psychological symptoms. One of them was that for several weeks she was unable to drink any fluids. According to Freud,

She would take up the glass of water that she longed for, but as soon as it touched her lips she would push it away like someone suffering from hydrophobia.…She lived only on fruit, such as melons, etc., so as to lessen her tormenting thirst. (p. 9)

But according to Freud, a breakthrough came one day while Anna was under hypnosis.

[S]he grumbled about her English “lady-companion,” whom she did not care for, and went on to describe, with every sign of disgust, how she had once gone into this lady’s room and how her little dog—horrid creature!—had drunk out of a glass there. The patient had said nothing, as she had wanted to be polite. After giving further energetic expression to the anger she had held back, she asked for something to drink, drank a large quantity of water without any difficulty, and awoke from her hypnosis with the glass at her lips; and thereupon the disturbance vanished, never to return. (p.9)

Freud’s interpretation was that Anna had repressed the memory of this incident along with the emotion that it triggered and that this was what had caused her inability to drink. Furthermore, he believed that her recollection of the incident, along with her expression of the emotion she had repressed, caused the symptom to go away.

As an illustration of Freud’s theory, the case study of Anna O. is quite effective. As evidence for the theory, however, it is essentially worthless. The description provides no way of knowing whether Anna had really repressed the memory of the dog drinking from the glass, whether this repression had caused her inability to drink, or whether recalling this “trauma” relieved the symptom. It is also unclear from this case study how typical or atypical Anna’s experience was.

Case studies are useful because they provide a level of detailed analysis not found in many other research methods and greater insights may be gained from this more detailed analysis. As a result of the case study, the researcher may gain a sharpened understanding of what might become important to look at more extensively in future more controlled research. Case studies are also often the only way to study rare conditions because it may be impossible to find a large enough sample of individuals with the condition to use quantitative methods. Although at first glance a case study of a rare individual might seem to tell us little about ourselves, they often do provide insights into normal behavior. The case of HM provided important insights into the role of the hippocampus in memory consolidation.

However, it is important to note that while case studies can provide insights into certain areas and variables to study, and can be useful in helping develop theories, they should never be used as evidence for theories. In other words, case studies can be used as inspiration to formulate theories and hypotheses, but those hypotheses and theories then need to be formally tested using more rigorous quantitative methods. The reason case studies shouldn’t be used to provide support for theories is that they suffer from problems with both internal and external validity. Case studies lack the proper controls that true experiments contain. As such, they suffer from problems with internal validity, so they cannot be used to determine causation. For instance, during HM’s surgery, the surgeon may have accidentally lesioned another area of HM’s brain (a possibility suggested by the dissection of HM’s brain following his death) and that lesion may have contributed to his inability to consolidate new information. The fact is, with case studies we cannot rule out these sorts of alternative explanations. So, as with all observational methods, case studies do not permit determination of causation. In addition, because case studies are often of a single individual, and typically an abnormal individual, researchers cannot generalize their conclusions to other individuals. Recall that with most research designs there is a trade-off between internal and external validity. With case studies, however, there are problems with both internal validity and external validity. So there are limits both to the ability to determine causation and to generalize the results. A final limitation of case studies is that ample opportunity exists for the theoretical biases of the researcher to color or bias the case description. Indeed, there have been accusations that the woman who studied HM destroyed a lot of her data that were not published and she has been called into question for destroying contradictory data that didn’t support her theory about how memories are consolidated. There is a fascinating New York Times article that describes some of the controversies that ensued after HM’s death and analysis of his brain that can be found at: https://www.nytimes.com/2016/08/07/magazine/the-brain-that-couldnt-remember.html?_r=0

Archival Research

Another approach that is often considered observational research involves analyzing archival data that have already been collected for some other purpose. An example is a study by Brett Pelham and his colleagues on “implicit egotism”—the tendency for people to prefer people, places, and things that are similar to themselves (Pelham, Carvallo, & Jones, 2005) [9] . In one study, they examined Social Security records to show that women with the names Virginia, Georgia, Louise, and Florence were especially likely to have moved to the states of Virginia, Georgia, Louisiana, and Florida, respectively.

As with naturalistic observation, measurement can be more or less straightforward when working with archival data. For example, counting the number of people named Virginia who live in various states based on Social Security records is relatively straightforward. But consider a study by Christopher Peterson and his colleagues on the relationship between optimism and health using data that had been collected many years before for a study on adult development (Peterson, Seligman, & Vaillant, 1988) [10] . In the 1940s, healthy male college students had completed an open-ended questionnaire about difficult wartime experiences. In the late 1980s, Peterson and his colleagues reviewed the men’s questionnaire responses to obtain a measure of explanatory style—their habitual ways of explaining bad events that happen to them. More pessimistic people tend to blame themselves and expect long-term negative consequences that affect many aspects of their lives, while more optimistic people tend to blame outside forces and expect limited negative consequences. To obtain a measure of explanatory style for each participant, the researchers used a procedure in which all negative events mentioned in the questionnaire responses, and any causal explanations for them were identified and written on index cards. These were given to a separate group of raters who rated each explanation in terms of three separate dimensions of optimism-pessimism. These ratings were then averaged to produce an explanatory style score for each participant. The researchers then assessed the statistical relationship between the men’s explanatory style as undergraduate students and archival measures of their health at approximately 60 years of age. The primary result was that the more optimistic the men were as undergraduate students, the healthier they were as older men. Pearson’s r was +.25.

This method is an example of content analysis —a family of systematic approaches to measurement using complex archival data. Just as structured observation requires specifying the behaviors of interest and then noting them as they occur, content analysis requires specifying keywords, phrases, or ideas and then finding all occurrences of them in the data. These occurrences can then be counted, timed (e.g., the amount of time devoted to entertainment topics on the nightly news show), or analyzed in a variety of other ways.

Media Attributions

- What happens when you remove the hippocampus? – Sam Kean by TED-Ed licensed under a standard YouTube License

- Pappenheim 1882 by unknown is in the Public Domain .

- Festinger, L., Riecken, H., & Schachter, S. (1956). When prophecy fails: A social and psychological study of a modern group that predicted the destruction of the world. University of Minnesota Press. ↵

- Rosenhan, D. L. (1973). On being sane in insane places. Science, 179 , 250–258. ↵

- Wilkins, A. (2008). “Happier than Non-Christians”: Collective emotions and symbolic boundaries among evangelical Christians. Social Psychology Quarterly, 71 , 281–301. ↵

- Levine, R. V., & Norenzayan, A. (1999). The pace of life in 31 countries. Journal of Cross-Cultural Psychology, 30 , 178–205. ↵

- Kraut, R. E., & Johnston, R. E. (1979). Social and emotional messages of smiling: An ethological approach. Journal of Personality and Social Psychology, 37 , 1539–1553. ↵

- Cohen, D., Nisbett, R. E., Bowdle, B. F., & Schwarz, N. (1996). Insult, aggression, and the southern culture of honor: An "experimental ethnography." Journal of Personality and Social Psychology, 70 (5), 945-960. ↵

- Watson, J. B., & Rayner, R. (1920). Conditioned emotional reactions. Journal of Experimental Psychology, 3 , 1–14. ↵

- Freud, S. (1961). Five lectures on psycho-analysis . New York, NY: Norton. ↵

- Pelham, B. W., Carvallo, M., & Jones, J. T. (2005). Implicit egotism. Current Directions in Psychological Science, 14 , 106–110. ↵

- Peterson, C., Seligman, M. E. P., & Vaillant, G. E. (1988). Pessimistic explanatory style is a risk factor for physical illness: A thirty-five year longitudinal study. Journal of Personality and Social Psychology, 55 , 23–27. ↵

Research that is non-experimental because it focuses on recording systemic observations of behavior in a natural or laboratory setting without manipulating anything.

An observational method that involves observing people’s behavior in the environment in which it typically occurs.

When researchers engage in naturalistic observation by making their observations as unobtrusively as possible so that participants are not aware that they are being studied.

Where the participants are made aware of the researcher presence and monitoring of their behavior.

Refers to when a measure changes participants’ behavior.

In the case of undisguised naturalistic observation, it is a type of reactivity when people know they are being observed and studied, they may act differently than they normally would.

Researchers become active participants in the group or situation they are studying.

Researchers pretend to be members of the social group they are observing and conceal their true identity as researchers.

Researchers become a part of the group they are studying and they disclose their true identity as researchers to the group under investigation.

When a researcher makes careful observations of one or more specific behaviors in a particular setting that is more structured than the settings used in naturalistic or participant observation.

A part of structured observation whereby the observers use a clearly defined set of guidelines to "code" behaviors—assigning specific behaviors they are observing to a category—and count the number of times or the duration that the behavior occurs.

An in-depth examination of an individual.

A family of systematic approaches to measurement using qualitative methods to analyze complex archival data.

Research Methods in Psychology Copyright © 2019 by Rajiv S. Jhangiani, I-Chant A. Chiang, Carrie Cuttler, & Dana C. Leighton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Observation Method in Psychology: Naturalistic, Participant and Controlled

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

The observation method in psychology involves directly and systematically witnessing and recording measurable behaviors, actions, and responses in natural or contrived settings without attempting to intervene or manipulate what is being observed.

Used to describe phenomena, generate hypotheses, or validate self-reports, psychological observation can be either controlled or naturalistic with varying degrees of structure imposed by the researcher.

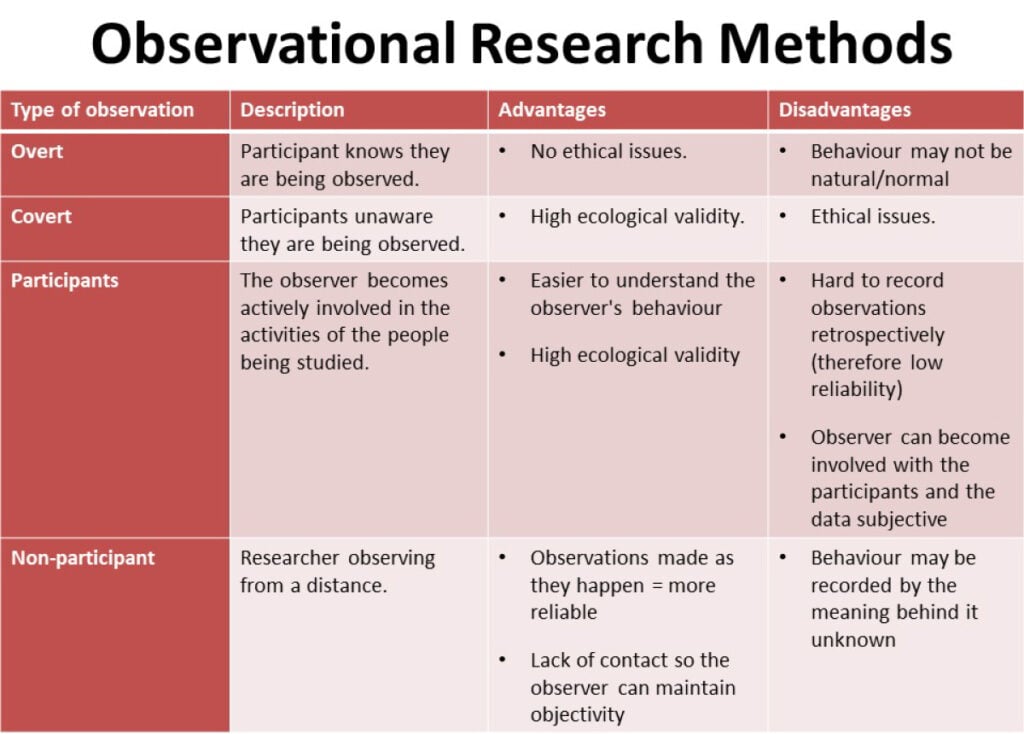

There are different types of observational methods, and distinctions need to be made between:

1. Controlled Observations 2. Naturalistic Observations 3. Participant Observations

In addition to the above categories, observations can also be either overt/disclosed (the participants know they are being studied) or covert/undisclosed (the researcher keeps their real identity a secret from the research subjects, acting as a genuine member of the group).

In general, conducting observational research is relatively inexpensive, but it remains highly time-consuming and resource-intensive in data processing and analysis.

The considerable investments needed in terms of coder time commitments for training, maintaining reliability, preventing drift, and coding complex dynamic interactions place practical barriers on observers with limited resources.

Controlled Observation

Controlled observation is a research method for studying behavior in a carefully controlled and structured environment.

The researcher sets specific conditions, variables, and procedures to systematically observe and measure behavior, allowing for greater control and comparison of different conditions or groups.

The researcher decides where the observation will occur, at what time, with which participants, and in what circumstances, and uses a standardized procedure. Participants are randomly allocated to each independent variable group.

Rather than writing a detailed description of all behavior observed, it is often easier to code behavior according to a previously agreed scale using a behavior schedule (i.e., conducting a structured observation).

The researcher systematically classifies the behavior they observe into distinct categories. Coding might involve numbers or letters to describe a characteristic or the use of a scale to measure behavior intensity.

The categories on the schedule are coded so that the data collected can be easily counted and turned into statistics.

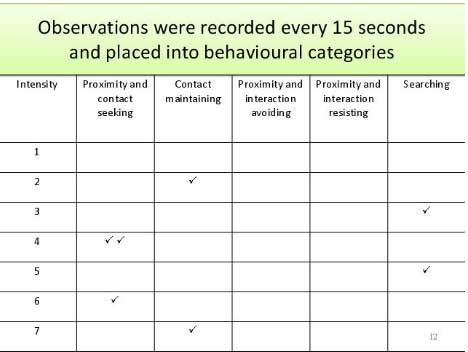

For example, Mary Ainsworth used a behavior schedule to study how infants responded to brief periods of separation from their mothers. During the Strange Situation procedure, the infant’s interaction behaviors directed toward the mother were measured, e.g.,

- Proximity and contact-seeking

- Contact maintaining

- Avoidance of proximity and contact

- Resistance to contact and comforting

The observer noted down the behavior displayed during 15-second intervals and scored the behavior for intensity on a scale of 1 to 7.

Sometimes participants’ behavior is observed through a two-way mirror, or they are secretly filmed. Albert Bandura used this method to study aggression in children (the Bobo doll studies ).

A lot of research has been carried out in sleep laboratories as well. Here, electrodes are attached to the scalp of participants. What is observed are the changes in electrical activity in the brain during sleep ( the machine is called an EEG ).

Controlled observations are usually overt as the researcher explains the research aim to the group so the participants know they are being observed.

Controlled observations are also usually non-participant as the researcher avoids direct contact with the group and keeps a distance (e.g., observing behind a two-way mirror).

- Controlled observations can be easily replicated by other researchers by using the same observation schedule. This means it is easy to test for reliability .

- The data obtained from structured observations is easier and quicker to analyze as it is quantitative (i.e., numerical) – making this a less time-consuming method compared to naturalistic observations.

- Controlled observations are fairly quick to conduct which means that many observations can take place within a short amount of time. This means a large sample can be obtained, resulting in the findings being representative and having the ability to be generalized to a large population.

Limitations

- Controlled observations can lack validity due to the Hawthorne effect /demand characteristics. When participants know they are being watched, they may act differently.

Naturalistic Observation

Naturalistic observation is a research method in which the researcher studies behavior in its natural setting without intervention or manipulation.

It involves observing and recording behavior as it naturally occurs, providing insights into real-life behaviors and interactions in their natural context.

Naturalistic observation is a research method commonly used by psychologists and other social scientists.

This technique involves observing and studying the spontaneous behavior of participants in natural surroundings. The researcher simply records what they see in whatever way they can.

In unstructured observations, the researcher records all relevant behavior with a coding system. There may be too much to record, and the behaviors recorded may not necessarily be the most important, so the approach is usually used as a pilot study to see what type of behaviors would be recorded.

Compared with controlled observations, it is like the difference between studying wild animals in a zoo and studying them in their natural habitat.

With regard to human subjects, Margaret Mead used this method to research the way of life of different tribes living on islands in the South Pacific. Kathy Sylva used it to study children at play by observing their behavior in a playgroup in Oxfordshire.

Collecting Naturalistic Behavioral Data

Technological advances are enabling new, unobtrusive ways of collecting naturalistic behavioral data.

The Electronically Activated Recorder (EAR) is a digital recording device participants can wear to periodically sample ambient sounds, allowing representative sampling of daily experiences (Mehl et al., 2012).

Studies program EARs to record 30-50 second sound snippets multiple times per hour. Although coding the recordings requires extensive resources, EARs can capture spontaneous behaviors like arguments or laughter.

EARs minimize participant reactivity since sampling occurs outside of awareness. This reduces the Hawthorne effect, where people change behavior when observed.

The SenseCam is another wearable device that passively captures images documenting daily activities. Though primarily used in memory research currently (Smith et al., 2014), systematic sampling of environments and behaviors via the SenseCam could enable innovative psychological studies in the future.

- By being able to observe the flow of behavior in its own setting, studies have greater ecological validity.

- Like case studies , naturalistic observation is often used to generate new ideas. Because it gives the researcher the opportunity to study the total situation, it often suggests avenues of inquiry not thought of before.

- The ability to capture actual behaviors as they unfold in real-time, analyze sequential patterns of interactions, measure base rates of behaviors, and examine socially undesirable or complex behaviors that people may not self-report accurately.

- These observations are often conducted on a micro (small) scale and may lack a representative sample (biased in relation to age, gender, social class, or ethnicity). This may result in the findings lacking the ability to generalize to wider society.

- Natural observations are less reliable as other variables cannot be controlled. This makes it difficult for another researcher to repeat the study in exactly the same way.

- Highly time-consuming and resource-intensive during the data coding phase (e.g., training coders, maintaining inter-rater reliability, preventing judgment drift).

- With observations, we do not have manipulations of variables (or control over extraneous variables), meaning cause-and-effect relationships cannot be established.

Participant Observation

Participant observation is a variant of the above (natural observations) but here, the researcher joins in and becomes part of the group they are studying to get a deeper insight into their lives.

If it were research on animals , we would now not only be studying them in their natural habitat but be living alongside them as well!

Leon Festinger used this approach in a famous study into a religious cult that believed that the end of the world was about to occur. He joined the cult and studied how they reacted when the prophecy did not come true.

Participant observations can be either covert or overt. Covert is where the study is carried out “undercover.” The researcher’s real identity and purpose are kept concealed from the group being studied.

The researcher takes a false identity and role, usually posing as a genuine member of the group.

On the other hand, overt is where the researcher reveals his or her true identity and purpose to the group and asks permission to observe.

- It can be difficult to get time/privacy for recording. For example, researchers can’t take notes openly with covert observations as this would blow their cover. This means they must wait until they are alone and rely on their memory. This is a problem as they may forget details and are unlikely to remember direct quotations.

- If the researcher becomes too involved, they may lose objectivity and become biased. There is always the danger that we will “see” what we expect (or want) to see. This problem is because they could selectively report information instead of noting everything they observe. Thus reducing the validity of their data.

Recording of Data

With controlled/structured observation studies, an important decision the researcher has to make is how to classify and record the data. Usually, this will involve a method of sampling.

In most coding systems, codes or ratings are made either per behavioral event or per specified time interval (Bakeman & Quera, 2011).

The three main sampling methods are:

Event-based coding involves identifying and segmenting interactions into meaningful events rather than timed units.

For example, parent-child interactions may be segmented into control or teaching events to code. Interval recording involves dividing interactions into fixed time intervals (e.g., 6-15 seconds) and coding behaviors within each interval (Bakeman & Quera, 2011).

Event recording allows counting event frequency and sequencing while also potentially capturing event duration through timed-event recording. This provides information on time spent on behaviors.

- Interval recording is common in microanalytic coding to sample discrete behaviors in brief time samples across an interaction. The time unit can range from seconds to minutes to whole interactions. Interval recording requires segmenting interactions based on timing rather than events (Bakeman & Quera, 2011).

- Instantaneous sampling provides snapshot coding at certain moments rather than summarizing behavior within full intervals. This allows quicker coding but may miss behaviors in between target times.

Coding Systems

The coding system should focus on behaviors, patterns, individual characteristics, or relationship qualities that are relevant to the theory guiding the study (Wampler & Harper, 2014).

Codes vary in how much inference is required, from concrete observable behaviors like frequency of eye contact to more abstract concepts like degree of rapport between a therapist and client (Hill & Lambert, 2004). More inference may reduce reliability.

Coding schemes can vary in their level of detail or granularity. Micro-level schemes capture fine-grained behaviors, such as specific facial movements, while macro-level schemes might code broader behavioral states or interactions. The appropriate level of granularity depends on the research questions and the practical constraints of the study.

Another important consideration is the concreteness of the codes. Some schemes use physically based codes that are directly observable (e.g., “eyes closed”), while others use more socially based codes that require some level of inference (e.g., “showing empathy”). While physically based codes may be easier to apply consistently, socially based codes often capture more meaningful behavioral constructs.

Most coding schemes strive to create sets of codes that are mutually exclusive and exhaustive (ME&E). This means that for any given set of codes, only one code can apply at a time (mutual exclusivity), and there is always an applicable code (exhaustiveness). This property simplifies both the coding process and subsequent data analysis.

For example, a simple ME&E set for coding infant state might include: 1) Quiet alert, 2) Crying, 3) Fussy, 4) REM sleep, and 5) Deep sleep. At any given moment, an infant would be in one and only one of these states.

Macroanalytic coding systems

Macroanalytic coding systems involve rating or summarizing behaviors using larger coding units and broader categories that reflect patterns across longer periods of interaction rather than coding small or discrete behavioral acts.

Macroanalytic coding systems focus on capturing overarching themes, global qualities, or general patterns of behavior rather than specific, discrete actions.

For example, a macroanalytic coding system may rate the overall degree of therapist warmth or level of client engagement globally for an entire therapy session, requiring the coders to summarize and infer these constructs across the interaction rather than coding smaller behavioral units.

These systems require observers to make more inferences (more time-consuming) but can better capture contextual factors, stability over time, and the interdependent nature of behaviors (Carlson & Grotevant, 1987).

Examples of Macroanalytic Coding Systems:

- Emotional Availability Scales (EAS) : This system assesses the quality of emotional connection between caregivers and children across dimensions like sensitivity, structuring, non-intrusiveness, and non-hostility.

- Classroom Assessment Scoring System (CLASS) : Evaluates the quality of teacher-student interactions in classrooms across domains like emotional support, classroom organization, and instructional support.

Microanalytic coding systems

Microanalytic coding systems involve rating behaviors using smaller, more discrete coding units and categories.

These systems focus on capturing specific, discrete behaviors or events as they occur moment-to-moment. Behaviors are often coded second-by-second or in very short time intervals.

For example, a microanalytic system may code each instance of eye contact or head nodding during a therapy session. These systems code specific, molecular behaviors as they occur moment-to-moment rather than summarizing actions over longer periods.

Microanalytic systems require less inference from coders and allow for analysis of behavioral contingencies and sequential interactions between therapist and client. However, they are more time-consuming and expensive to implement than macroanalytic approaches.

Examples of Microanalytic Coding Systems:

- Facial Action Coding System (FACS) : Codes minute facial muscle movements to analyze emotional expressions.

- Specific Affect Coding System (SPAFF) : Used in marital interaction research to code specific emotional behaviors.

- Noldus Observer XT : A software system that allows for detailed coding of behaviors in real-time or from video recordings.

Mesoanalytic coding systems

Mesoanalytic coding systems attempt to balance macro- and micro-analytic approaches.

In contrast to macroanalytic systems that summarize behaviors in larger chunks, mesoanalytic systems use medium-sized coding units that target more specific behaviors or interaction sequences (Bakeman & Quera, 2017).

For example, a mesoanalytic system may code each instance of a particular type of therapist statement or client emotional expression. However, mesoanalytic systems still use larger units than microanalytic approaches coding every speech onset/offset.

The goal of balancing specificity and feasibility makes mesoanalytic systems well-suited for many research questions (Morris et al., 2014). Mesoanalytic codes can preserve some sequential information while remaining efficient enough for studies with adequate but limited resources.

For instance, a mesoanalytic couple interaction coding system could target key behavior patterns like validation sequences without coding turn-by-turn speech.

In this way, mesoanalytic coding allows reasonable reliability and specificity without requiring extensive training or observation. The mid-level focus offers a pragmatic compromise between depth and breadth in analyzing interactions.

Examples of Mesoanalytic Coding Systems:

- Feeding Scale for Mother-Infant Interaction : Assesses feeding interactions in 5-minute episodes, coding specific behaviors and overall qualities.

- Couples Interaction Rating System (CIRS): Codes specific behaviors and rates overall qualities in segments of couple interactions.

- Teaching Styles Rating Scale : Combines frequency counts of specific teacher behaviors with global ratings of teaching style in classroom segments.

Preventing Coder Drift

Coder drift results in a measurement error caused by gradual shifts in how observations get rated according to operational definitions, especially when behavioral codes are not clearly specified.

This type of error creeps in when coders fail to regularly review what precise observations constitute or do not constitute the behaviors being measured.

Preventing drift refers to taking active steps to maintain consistency and minimize changes or deviations in how coders rate or evaluate behaviors over time. Specifically, some key ways to prevent coder drift include:

- Operationalize codes : It is essential that code definitions unambiguously distinguish what interactions represent instances of each coded behavior.

- Ongoing training : Returning to those operational definitions through ongoing training serves to recalibrate coder interpretations and reinforce accurate recognition. Having regular “check-in” sessions where coders practice coding the same interactions allows monitoring that they continue applying codes reliably without gradual shifts in interpretation.

- Using reference videos : Coders periodically coding the same “gold standard” reference videos anchors their judgments and calibrate against original training. Without periodic anchoring to original specifications, coder decisions tend to drift from initial measurement reliability.

- Assessing inter-rater reliability : Statistical tracking that coders maintain high levels of agreement over the course of a study, not just at the start, flags any declines indicating drift. Sustaining inter-rater agreement requires mitigating this common tendency for observer judgment change during intensive, long-term coding tasks.

- Recalibrating through discussion : Having meetings for coders to discuss disagreements openly explores reasons judgment shifts may be occurring over time. Consensus on the application of codes is restored.

- Adjusting unclear codes : If reliability issues persist, revisiting and refining ambiguous code definitions or anchors can eliminate inconsistencies arising from coder confusion.

Essentially, the goal of preventing coder drift is maintaining standardization and minimizing unintentional biases that may slowly alter how observational data gets rated over periods of extensive coding.

Through the upkeep of skills, continuing calibration to benchmarks, and monitoring consistency, researchers can notice and correct for any creeping changes in coder decision-making over time.

Reducing Observer Bias

Observational research is prone to observer biases resulting from coders’ subjective perspectives shaping the interpretation of complex interactions (Burghardt et al., 2012). When coding, personal expectations may unconsciously influence judgments. However, rigorous methods exist to reduce such bias.

Coding Manual

A detailed coding manual minimizes subjectivity by clearly defining what behaviors and interaction dynamics observers should code (Bakeman & Quera, 2011).

High-quality manuals have strong theoretical and empirical grounding, laying out explicit coding procedures and providing rich behavioral examples to anchor code definitions (Lindahl, 2001).

Clear delineation of the frequency, intensity, duration, and type of behaviors constituting each code facilitates reliable judgments and reduces ambiguity for coders. Application risks inconsistency across raters without clarity on how codes translate to observable interaction.

Coder Training

Competent coders require both interpersonal perceptiveness and scientific rigor (Wampler & Harper, 2014). Training thoroughly reviews the theoretical basis for coded constructs and teaches the coding system itself.

Multiple “gold standard” criterion videos demonstrate code ranges that trainees independently apply. Coders then meet weekly to establish reliability of 80% or higher agreement both among themselves and with master criterion coding (Hill & Lambert, 2004).

Ongoing training manages coder drift over time. Revisions to unclear codes may also improve reliability. Both careful selection and investment in rigorous training increase quality control.

Blind Methods

To prevent bias, coders should remain unaware of specific study predictions or participant details (Burghardt et al., 2012). Separate data gathering versus coding teams helps maintain blinding.

Coders should be unaware of study details or participant identities that could bias coding (Burghardt et al., 2012).

Separate teams collecting data versus coding data can reduce bias.

In addition, scheduling procedures can prevent coders from rating data collected directly from participants with whom they have had personal contact. Maintaining coder independence and blinding enhances objectivity.

Data Analysis Approaches

Data analysis in behavioral observation aims to transform raw observational data into quantifiable measures that can be statistically analyzed.

It’s important to note that the choice of analysis approach is not arbitrary but should be guided by the research questions, study design, and nature of the data collected.

Interval data (where behavior is recorded at fixed time points), event data (where the occurrence of behaviors is noted as they happen), and timed-event data (where both the occurrence and duration of behaviors are recorded) may require different analytical approaches.

Similarly, the level of measurement (categorical, ordinal, or continuous) will influence the choice of statistical tests.

Researchers typically start with simple descriptive statistics to get a feel for their data before moving on to more complex analyses. This stepwise approach allows for a thorough understanding of the data and can often reveal unexpected patterns or relationships that merit further investigation.

simple descriptive statistics

Descriptive statistics give an overall picture of behavior patterns and are often the first step in analysis.

- Frequency counts tell us how often a particular behavior occurs, while rates express this frequency in relation to time (e.g., occurrences per minute).

- Duration measures how long behaviors last, offering insight into their persistence or intensity.

- Probability calculations indicate the likelihood of a behavior occurring under certain conditions, and relative frequency or duration statistics show the proportional occurrence of different behaviors within a session or across the study.

These simple statistics form the foundation of behavioral analysis, providing researchers with a broad picture of behavioral patterns.

They can reveal which behaviors are most common, how long they typically last, and how they might vary across different conditions or subjects.

For instance, in a study of classroom behavior, these statistics might show how often students raise their hands, how long they typically stay focused on a task, or what proportion of time is spent on different activities.

contingency analyses

Contingency analyses help identify if certain behaviors tend to occur together or in sequence.

- Contingency tables , also known as cross-tabulations, display the co-occurrence of two or more behaviors, allowing researchers to see if certain behaviors tend to happen together.

- Odds ratios provide a measure of the strength of association between behaviors, indicating how much more likely one behavior is to occur in the presence of another.

- Adjusted residuals in these tables can reveal whether the observed co-occurrences are significantly different from what would be expected by chance.

For example, in a study of parent-child interactions, contingency analyses might reveal whether a parent’s praise is more likely to follow a child’s successful completion of a task, or whether a child’s tantrum is more likely to occur after a parent’s refusal of a request.

These analyses can uncover important patterns in social interactions, learning processes, or behavioral chains.

sequential analyses

Sequential analyses are crucial for understanding processes and temporal relationships between behaviors.

- Lag sequential analysis looks at the likelihood of one behavior following another within a specified number of events or time units.

- Time-window sequential analysis examines whether a target behavior occurs within a defined time frame after a given behavior.

These methods are particularly valuable for understanding processes that unfold over time, such as conversation patterns, problem-solving strategies, or the development of social skills.

observer agreement

Since human observers often code behaviors, it’s important to check reliability . This is typically done through measures of observer agreement.

- Cohen’s kappa is commonly used for categorical data, providing a measure of agreement between observers that accounts for chance agreement.

- Intraclass correlation coefficient (ICC) : Used for continuous data or ratings.

Good observer agreement is crucial for the validity of the study, as it demonstrates that the observed behaviors are consistently identified and coded across different observers or time points.

advanced statistical approaches

As researchers delve deeper into their data, they often employ more advanced statistical techniques.

- For instance, an ANOVA might reveal differences in the frequency of aggressive behaviors between children from different socioeconomic backgrounds or in different school settings.

- This approach allows researchers to account for dependencies in the data and to examine how behaviors might be influenced by factors at different levels (e.g., individual characteristics, group dynamics, and situational factors).

- This method can reveal trends, cycles, or patterns in behavior over time, which might not be apparent from simpler analyses. For instance, in a study of animal behavior, time series analysis might uncover daily or seasonal patterns in feeding, mating, or territorial behaviors.

representation techniques

Representation techniques help organize and visualize data:

- Many researchers use a code-unit grid, which represents the data as a matrix with behaviors as rows and time units as columns.

- This format facilitates many types of analyses and allows for easy visualization of behavioral patterns.

- Standardized formats like the Sequential Data Interchange Standard (SDIS) help ensure consistency in data representation across studies and facilitate the use of specialized analysis software.

- Indeed, the complexity of behavioral observation data often necessitates the use of specialized software tools. Programs like GSEQ, Observer, and INTERACT are designed specifically for the analysis of observational data and can perform many of the analyses described above efficiently and accurately.

Bakeman, R., & Quera, V. (2017). Sequential analysis and observational methods for the behavioral sciences. Cambridge University Press.

Burghardt, G. M., Bartmess-LeVasseur, J. N., Browning, S. A., Morrison, K. E., Stec, C. L., Zachau, C. E., & Freeberg, T. M. (2012). Minimizing observer bias in behavioral studies: A review and recommendations. Ethology, 118 (6), 511-517.

Hill, C. E., & Lambert, M. J. (2004). Methodological issues in studying psychotherapy processes and outcomes. In M. J. Lambert (Ed.), Bergin and Garfield’s handbook of psychotherapy and behavior change (5th ed., pp. 84–135). Wiley.

Lindahl, K. M. (2001). Methodological issues in family observational research. In P. K. Kerig & K. M. Lindahl (Eds.), Family observational coding systems: Resources for systemic research (pp. 23–32). Lawrence Erlbaum Associates.

Mehl, M. R., Robbins, M. L., & Deters, F. G. (2012). Naturalistic observation of health-relevant social processes: The electronically activated recorder methodology in psychosomatics. Psychosomatic Medicine, 74 (4), 410–417.

Morris, A. S., Robinson, L. R., & Eisenberg, N. (2014). Applying a multimethod perspective to the study of developmental psychology. In H. T. Reis & C. M. Judd (Eds.), Handbook of research methods in social and personality psychology (2nd ed., pp. 103–123). Cambridge University Press.

Smith, J. A., Maxwell, S. D., & Johnson, G. (2014). The microstructure of everyday life: Analyzing the complex choreography of daily routines through the automatic capture and processing of wearable sensor data. In B. K. Wiederhold & G. Riva (Eds.), Annual Review of Cybertherapy and Telemedicine 2014: Positive Change with Technology (Vol. 199, pp. 62-64). IOS Press.

Traniello, J. F., & Bakker, T. C. (2015). The integrative study of behavioral interactions across the sciences. In T. K. Shackelford & R. D. Hansen (Eds.), The evolution of sexuality (pp. 119-147). Springer.

Wampler, K. S., & Harper, A. (2014). Observational methods in couple and family assessment. In H. T. Reis & C. M. Judd (Eds.), Handbook of research methods in social and personality psychology (2nd ed., pp. 490–502). Cambridge University Press.

Case study: Methods and observations of overwintering Eptesicus fuscus with White-Nose Syndrome in Ohio, USA

Research output : Contribution to journal › Article › peer-review

| Original language | English |

|---|---|

| Pages (from-to) | 11-16 |

| Number of pages | 6 |

| Journal | |

| Volume | 38 |

| Issue number | 3 |

| State | Published - 2018 |

| Externally published | Yes |

ASJC Scopus Subject Areas

- Animal Science and Zoology

- General Veterinary

- Eptesicus fuscus

- Pseudogymnoascus destructans

- White-Nose Syndrome

- Wildlife disease

- Wildlife rehabilitation

Other files and links

- Link to publication in Scopus

- Link to the citations in Scopus

T1 - Case study

T2 - Methods and observations of overwintering Eptesicus fuscus with White-Nose Syndrome in Ohio, USA

AU - Simonis, Molly C.

AU - Crow, Rebecca A.

AU - Rúa, Megan A.

N1 - Publisher Copyright: © 2018 International Wildlife Rehabilitation Council. All rights reserved.

KW - Eptesicus fuscus

KW - Pseudogymnoascus destructans

KW - White-Nose Syndrome

KW - Wildlife disease

KW - Wildlife rehabilitation

UR - http://www.scopus.com/inward/record.url?scp=85060529383&partnerID=8YFLogxK

UR - http://www.scopus.com/inward/citedby.url?scp=85060529383&partnerID=8YFLogxK

M3 - Article

AN - SCOPUS:85060529383

SN - 1071-2232

JO - Journal of Wildlife Rehabilitation

JF - Journal of Wildlife Rehabilitation

Comparative case study on NAMs: towards enhancing specific target organ toxicity analysis

- Regulatory Toxicology

- Open access

- Published: 29 August 2024

Cite this article

You have full access to this open access article

- Kristina Jochum 1 ,

- Andrea Miccoli 1 , 2 , 5 ,

- Cornelia Sommersdorf 3 ,

- Oliver Poetz 3 , 4 ,

- Albert Braeuning 5 ,

- Tewes Tralau 1 &

- Philip Marx-Stoelting ORCID: orcid.org/0000-0002-6487-2153 1

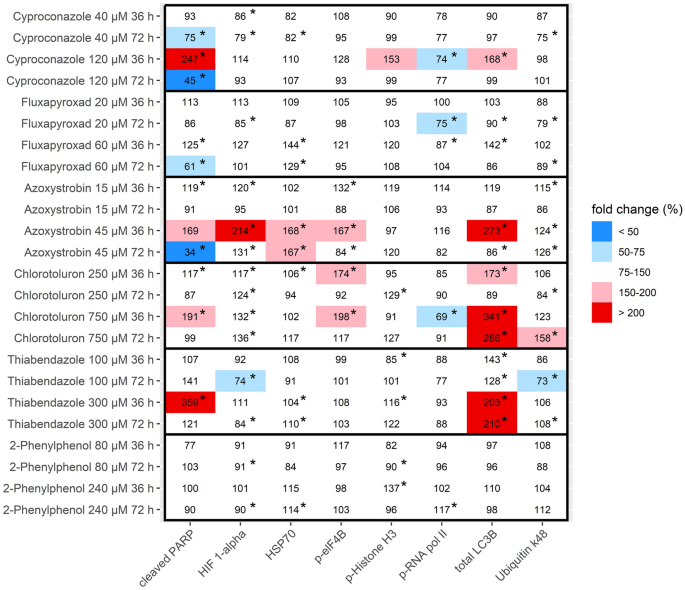

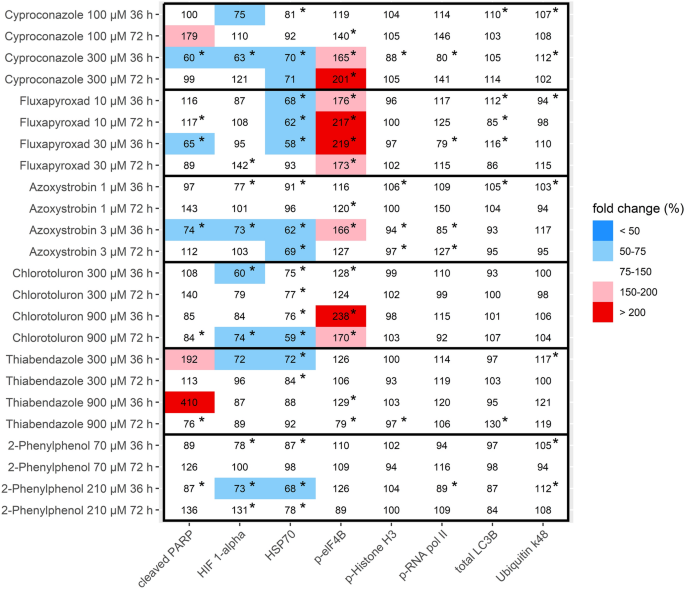

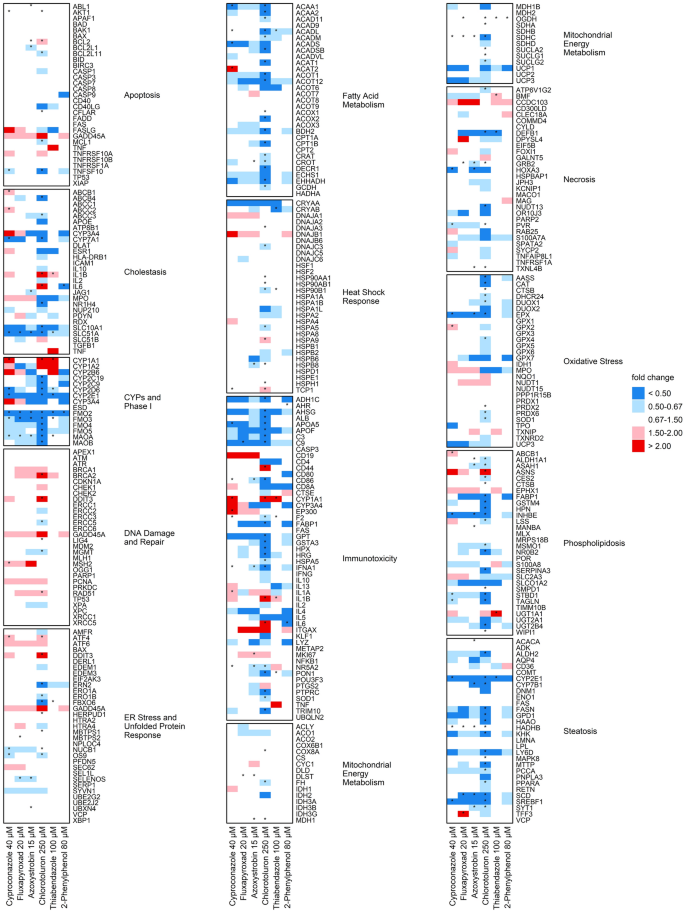

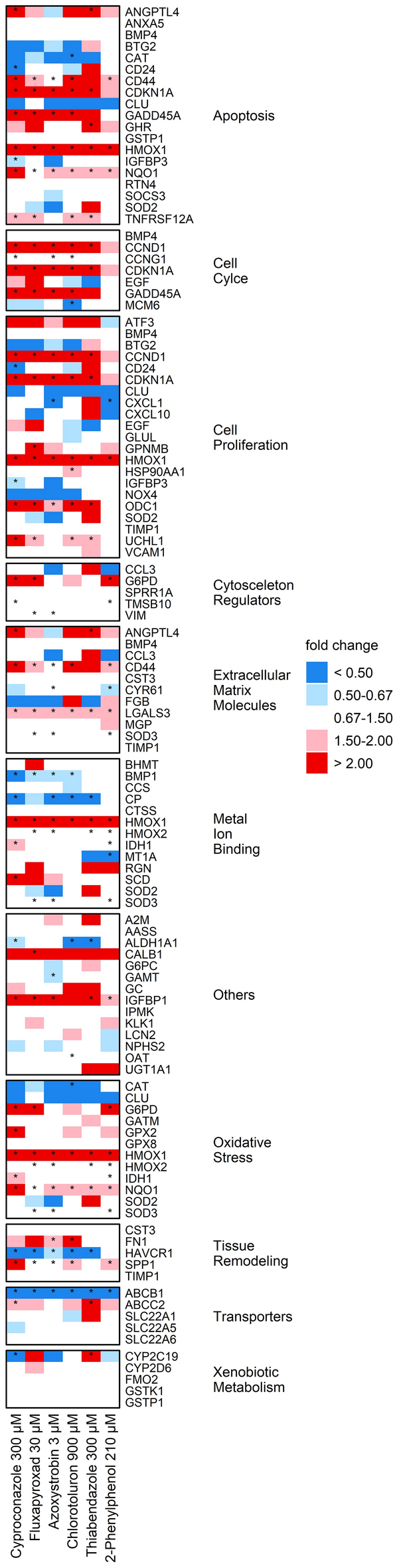

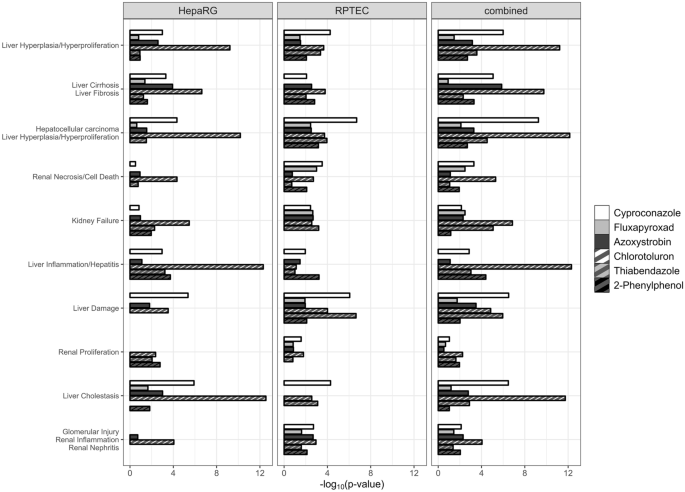

Traditional risk assessment methodologies in toxicology have relied upon animal testing, despite concerns regarding interspecies consistency, reproducibility, costs, and ethics. New Approach Methodologies (NAMs), including cell culture and multi-level omics analyses, hold promise by providing mechanistic information rather than assessing organ pathology. However, NAMs face limitations, like lacking a whole organism and restricted toxicokinetic interactions. This is an inherent challenge when it comes to the use of omics data from in vitro studies for the prediction of organ toxicity in vivo. One solution in this context are comparative in vitro–in vivo studies as they allow for a more detailed assessment of the transferability of the respective NAM data. Hence, hepatotoxic and nephrotoxic pesticide active substances were tested in human cell lines and the results subsequently related to the biology underlying established effects in vivo. To this end, substances were tested in HepaRG and RPTEC/tERT1 cells at non-cytotoxic concentrations and analyzed for effects on the transcriptome and parts of the proteome using quantitative real-time PCR arrays and multiplexed microsphere-based sandwich immunoassays, respectively. Transcriptomics data were analyzed using three bioinformatics tools. Where possible, in vitro endpoints were connected to in vivo observations. Targeted protein analysis revealed various affected pathways, with generally fewer effects present in RPTEC/tERT1. The strongest transcriptional impact was observed for Chlorotoluron in HepaRG cells (increased CYP1A1 and CYP1A2 expression). A comprehensive comparison of early cellular responses with data from in vivo studies revealed that transcriptomics outperformed targeted protein analysis, correctly predicting up to 50% of in vivo effects.

Avoid common mistakes on your manuscript.

Introduction

Given the at times heated discussions about regulatory toxicology in the political and public domain, the quite remarkable track record of toxicological health protection sometimes tends to go unnoticed. Not only are chemical scares such as the chemically induced massive acute health impacts in the 1950ies, 60ies and 70ies a thing of the past (Herzler et al. 2021 ), but in many parts of the world, there are now regulatory frameworks in place which aim at the early identification of potential health risks from chemicals. Within Europe, the most notable in terms of impact are probably REACH (EC 2006 ) and the regulations on pesticides (EC 2009 ) both of which still overwhelmingly rely on animal data for their risk assessments. This has manifold reasons, one being the historical reliability of animal-based systems for the prediction of adversity in humans. However, there are a number of challenges to this traditional approach. These comprise capacity issues when it comes to the testing of thousands of new or hitherto untested substances, the testing of mixtures, the ever-daunting question of species specificity or the limitation of current in vivo studies regarding less accessible endpoints such as for example immunotoxicity or developmental neurotoxicity.

Over recent years, so-called New Approach Methodologies (NAMs) have thus attracted increased attention and importance for regulatory toxicology. The United States Environmental Protection Agency (US EPA 2018 ) defines NAM as ‘…a broadly descriptive reference to any technology, methodology, approach, or combination thereof that can be used to provide information on chemical hazard and risk assessment that avoids the use of intact animals… ’. One instance of an attempt to replace an animal test with an in vitro test system is the embryonic stem cell test in the area of developmental toxicology (Buesen et al. 2004 ; Seiler et al. 2006 ). This stand-alone test was first evaluated for assessing the embryotoxic potential of chemicals as early on as 2004 (Genschow et al. 2004 ). While its establishment as a regulatory prediction model took several more years, one major outcome was the realization that the use of NAMs in general is greatly improved when used as part of a biologically and toxicologically meaningful testing battery (Marx-Stoelting et al. 2009 ; Schenk et al. 2010 ). It should be noted that despite all the potential of such testing batteries a tentative one to one replacement of animal studies is neither practical nor straight forward. The reason is not only the complexity of the endpoints in question but also practical constraints. This was recently exemplified by Landsiedel et al. who pointed out that with the number of different organs and tissues tested during one sub-chronic rodent study, and assuming that 5 NAMs are needed to address the adverse outcomes in any of those organs, it would take decades just to replace this one study. Any regulatory use of NAMs should hence preferably rely on their direct use (Landsiedel et al. 2022 ).

An example from the field of hepatotoxicity testing is the in vitro toolbox for steatosis that was developed by Luckert et al. ( 2018 ) based on the adverse outcome pathway (AOP) concept by Vinken ( 2015 ). The authors employed five assays covering relevant key events from the AOP in HepaRG cells after incubation with the test substance Cyproconazole. Concomitantly, transcript and protein marker patterns for the identification of steatotic compounds were established in HepaRG cells (Lichtenstein et al. 2020 ). The findings were subsequently brought together in a proposed protocol for AOP-based analysis of liver steatosis in vitro (Karaca et al. 2023a ).

One promising use for such cell-based systems is their combination with multi-level omics. In conjunction with sufficient biological and mechanistic knowledge, the wealth of information provided by multi-omics data should potentially allow some prediction of substance-induced adversity. That said any such prediction can of course only be reliable within the established limits of such systems such as the lack of a whole organism and incomplete toxicokinetics and restrictions on adequately capturing the effects of long-term exposure (Schmeisser et al. 2023 ). Regulatory use and trust in cell-based systems will, therefore, strongly rely on how they compare to the outcome of studies based on systemic data (Schmeisser et al. 2023 ).