Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on January 18, 2021 by Pritha Bhandari . Revised on June 22, 2023.

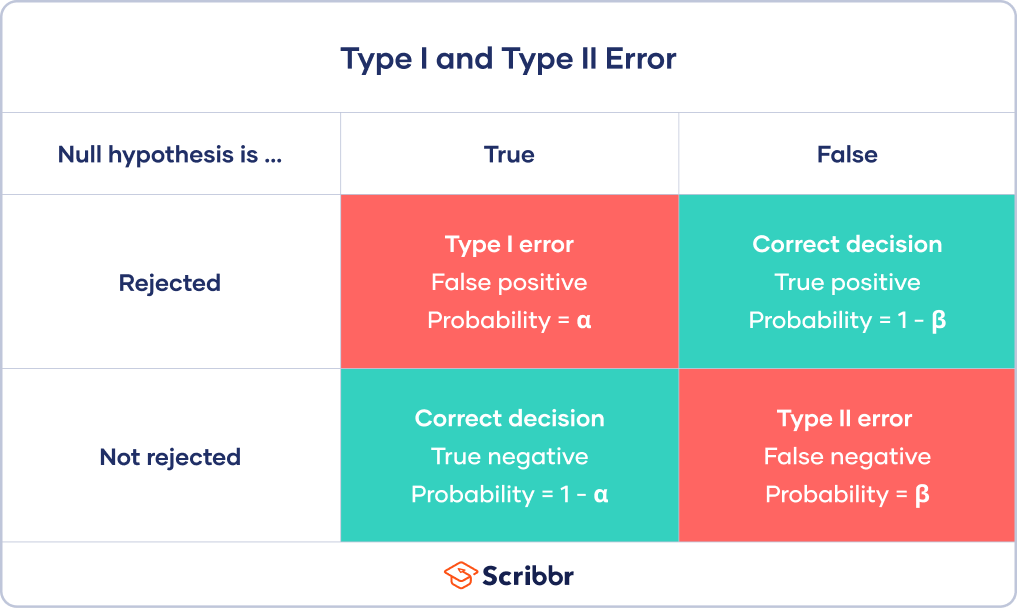

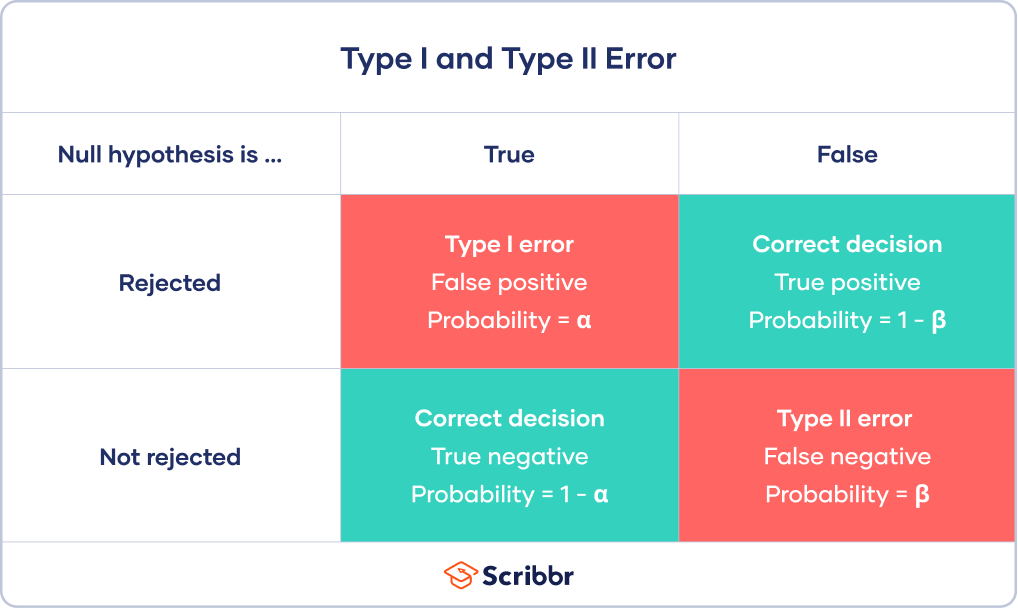

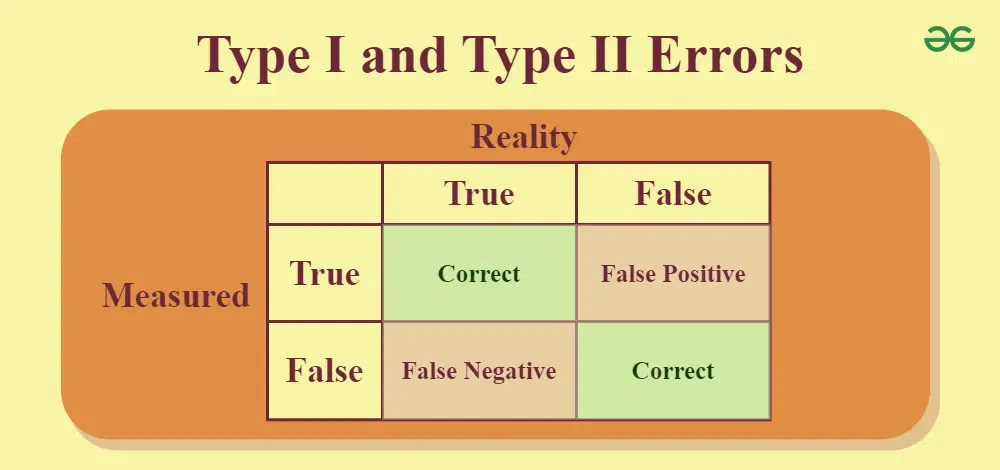

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, other interesting articles, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

Prevent plagiarism. Run a free check.

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

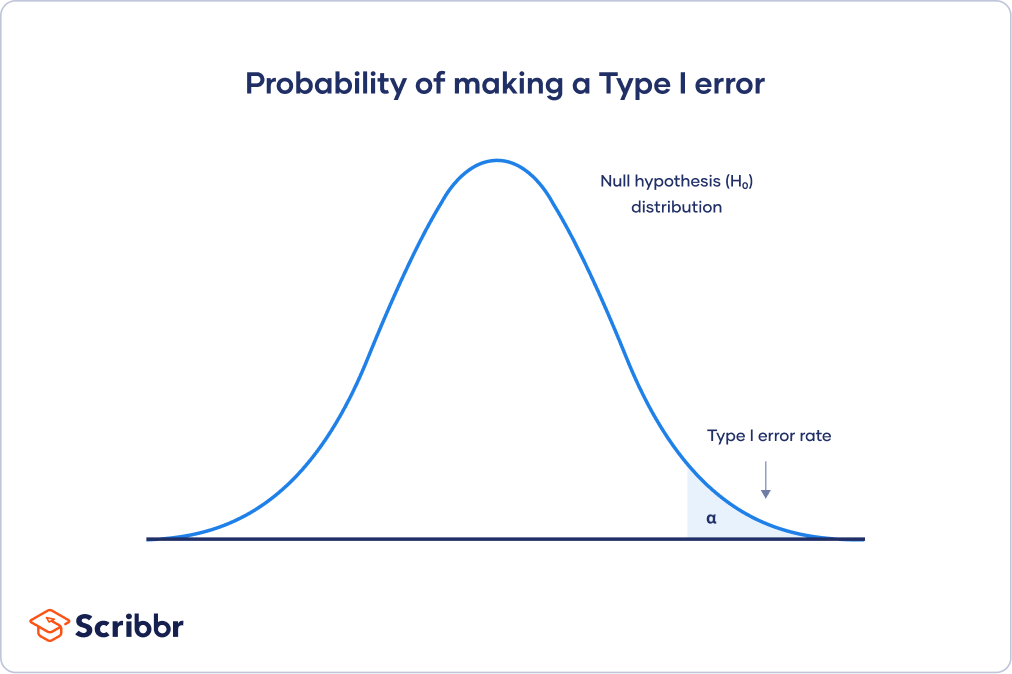

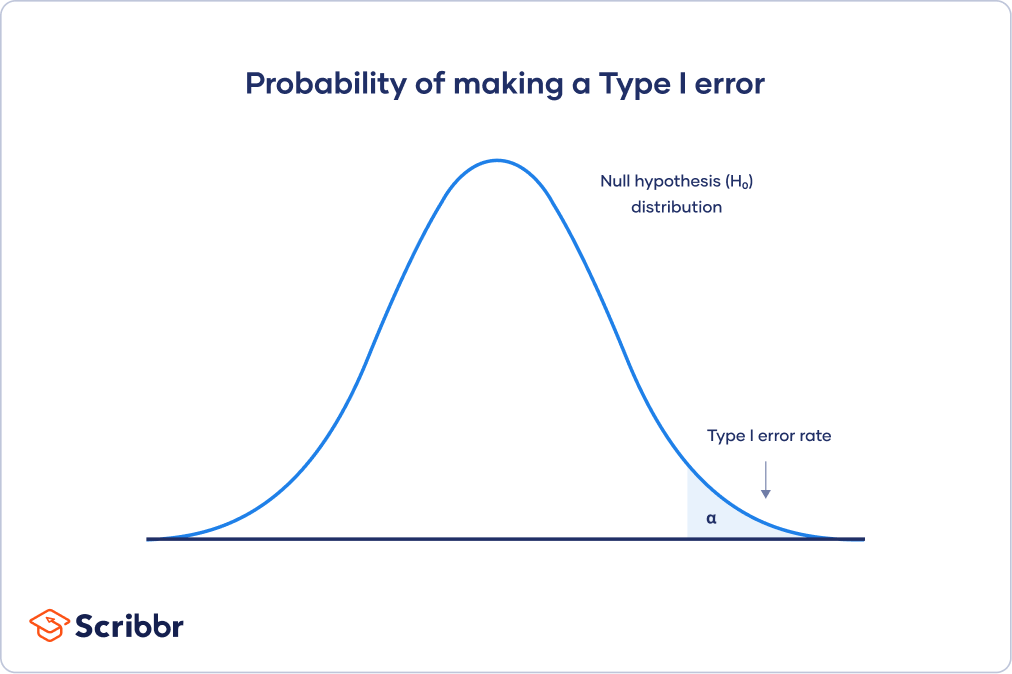

Type I error rate

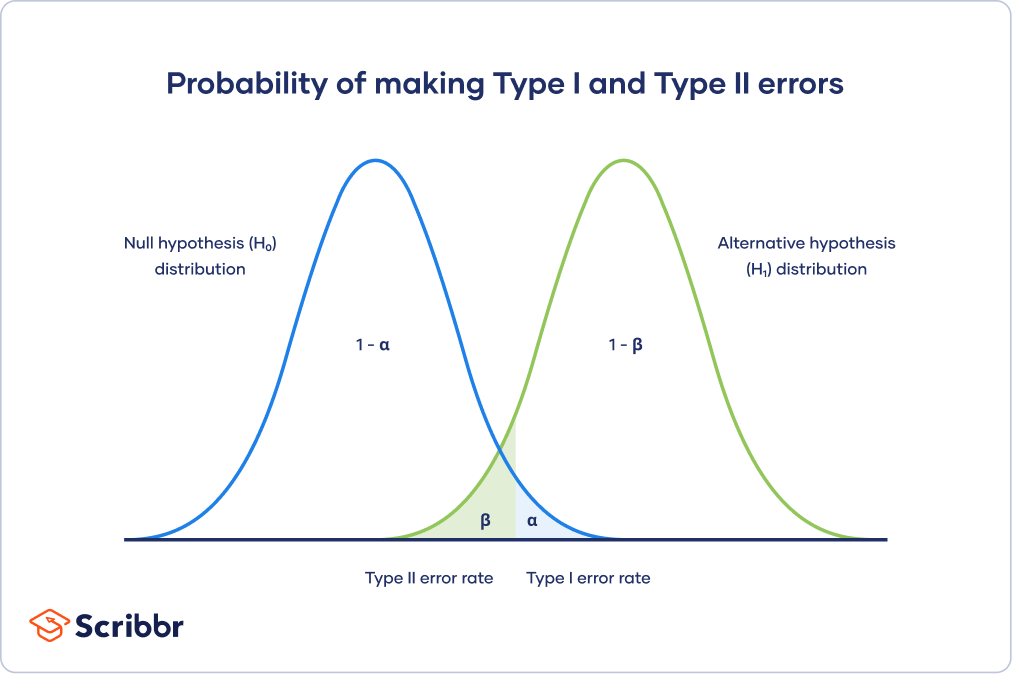

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

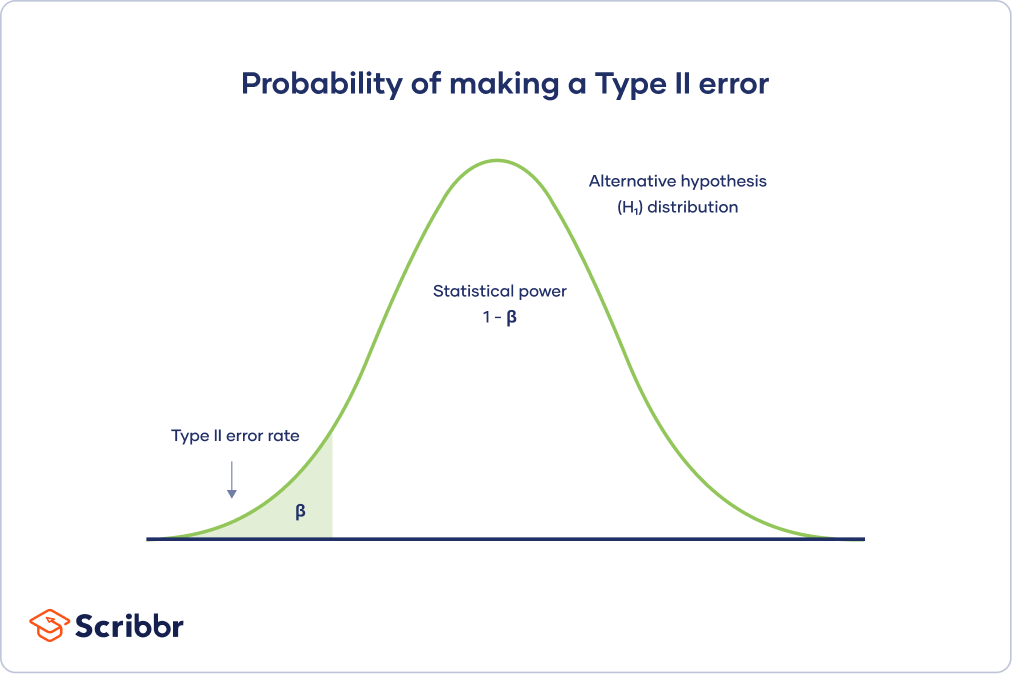

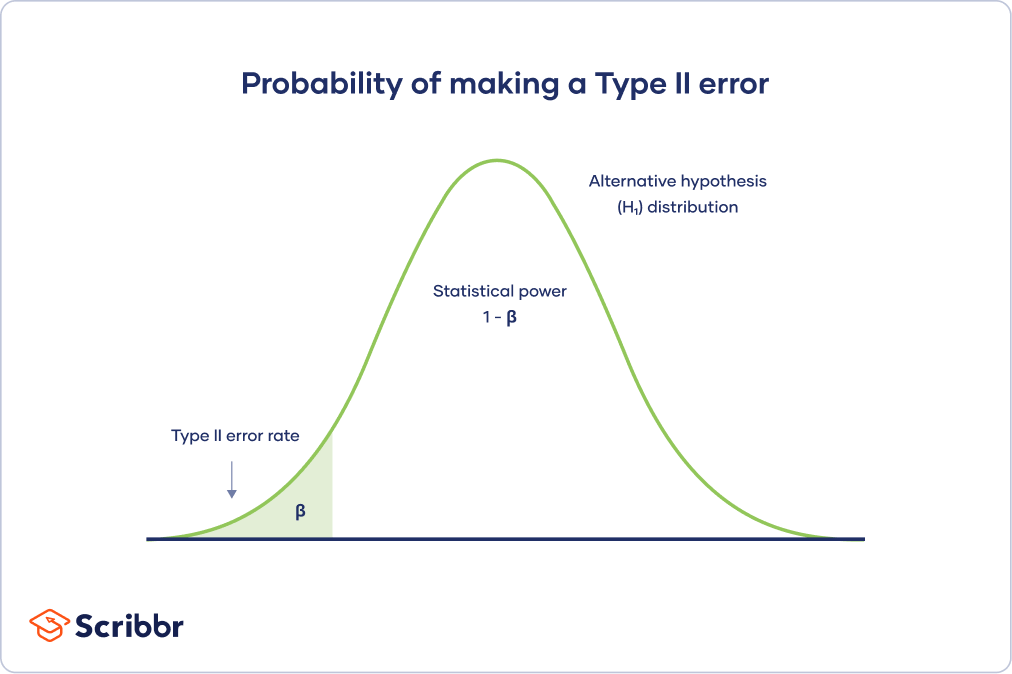

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

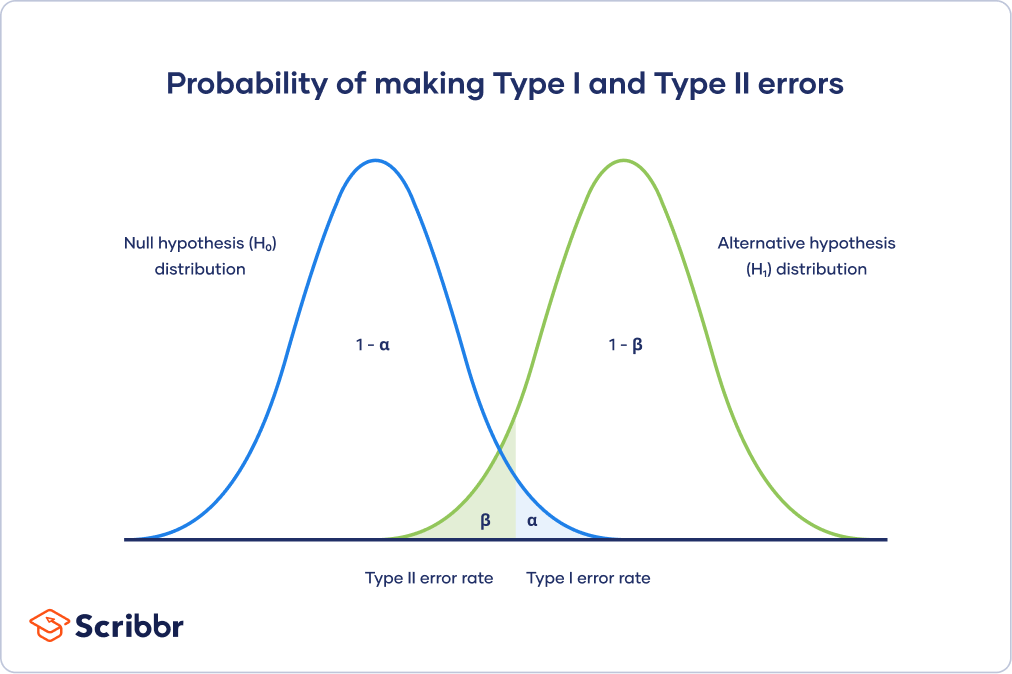

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

- Null hypothesis

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved August 22, 2024, from https://www.scribbr.com/statistics/type-i-and-type-ii-errors/

Is this article helpful?

Pritha Bhandari

Other students also liked, an easy introduction to statistical significance (with examples), understanding p values | definition and examples, statistical power and why it matters | a simple introduction, what is your plagiarism score.

Type 1 and Type 2 Errors in Statistics

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

On This Page:

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty). Because a p -value is based on probabilities, there is always a chance of making an incorrect conclusion regarding accepting or rejecting the null hypothesis ( H 0 ).

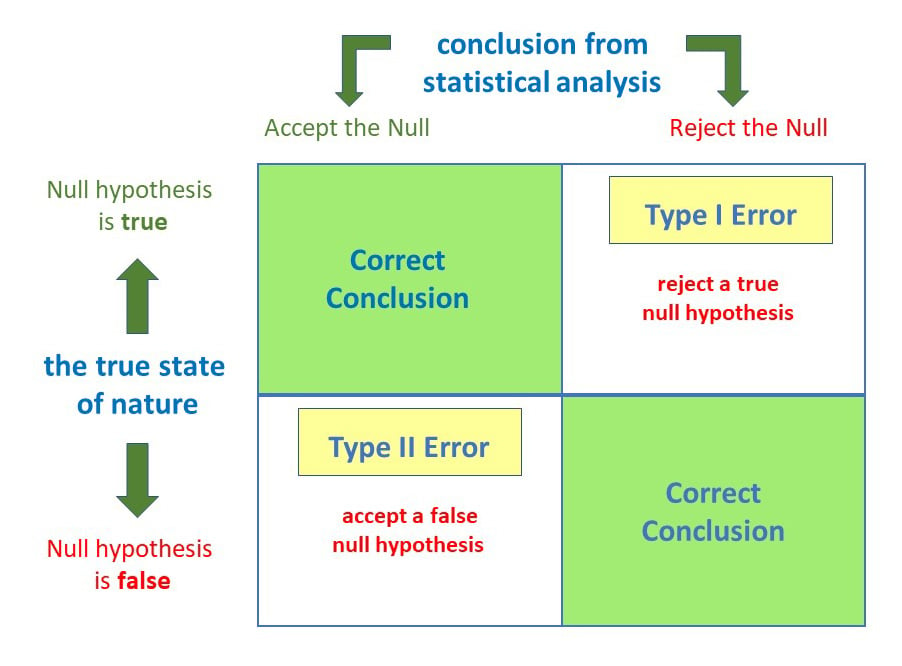

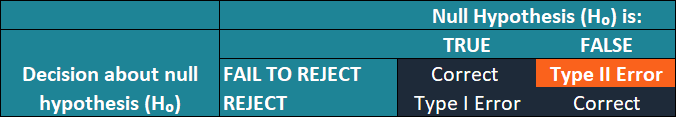

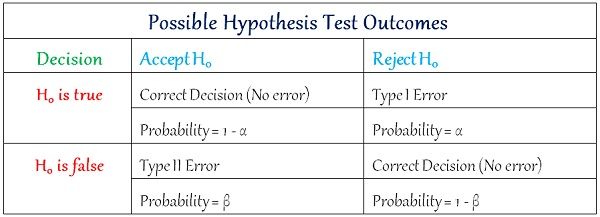

Anytime we make a decision using statistics, there are four possible outcomes, with two representing correct decisions and two representing errors.

The chances of committing these two types of errors are inversely proportional: that is, decreasing type I error rate increases type II error rate and vice versa.

As the significance level (α) increases, it becomes easier to reject the null hypothesis, decreasing the chance of missing a real effect (Type II error, β). If the significance level (α) goes down, it becomes harder to reject the null hypothesis , increasing the chance of missing an effect while reducing the risk of falsely finding one (Type I error).

Type I error

A type 1 error is also known as a false positive and occurs when a researcher incorrectly rejects a true null hypothesis. Simply put, it’s a false alarm.

This means that you report that your findings are significant when they have occurred by chance.

The probability of making a type 1 error is represented by your alpha level (α), the p- value below which you reject the null hypothesis.

A p -value of 0.05 indicates that you are willing to accept a 5% chance of getting the observed data (or something more extreme) when the null hypothesis is true.

You can reduce your risk of committing a type 1 error by setting a lower alpha level (like α = 0.01). For example, a p-value of 0.01 would mean there is a 1% chance of committing a Type I error.

However, using a lower value for alpha means that you will be less likely to detect a true difference if one really exists (thus risking a type II error).

Scenario: Drug Efficacy Study

Imagine a pharmaceutical company is testing a new drug, named “MediCure”, to determine if it’s more effective than a placebo at reducing fever. They experimented with two groups: one receives MediCure, and the other received a placebo.

- Null Hypothesis (H0) : MediCure is no more effective at reducing fever than the placebo.

- Alternative Hypothesis (H1) : MediCure is more effective at reducing fever than the placebo.

After conducting the study and analyzing the results, the researchers found a p-value of 0.04.

If they use an alpha (α) level of 0.05, this p-value is considered statistically significant, leading them to reject the null hypothesis and conclude that MediCure is more effective than the placebo.

However, MediCure has no actual effect, and the observed difference was due to random variation or some other confounding factor. In this case, the researchers have incorrectly rejected a true null hypothesis.

Error : The researchers have made a Type 1 error by concluding that MediCure is more effective when it isn’t.

Implications

Resource Allocation : Making a Type I error can lead to wastage of resources. If a business believes a new strategy is effective when it’s not (based on a Type I error), they might allocate significant financial and human resources toward that ineffective strategy.

Unnecessary Interventions : In medical trials, a Type I error might lead to the belief that a new treatment is effective when it isn’t. As a result, patients might undergo unnecessary treatments, risking potential side effects without any benefit.

Reputation and Credibility : For researchers, making repeated Type I errors can harm their professional reputation. If they frequently claim groundbreaking results that are later refuted, their credibility in the scientific community might diminish.

Type II error

A type 2 error (or false negative) happens when you accept the null hypothesis when it should actually be rejected.

Here, a researcher concludes there is not a significant effect when actually there really is.

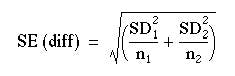

The probability of making a type II error is called Beta (β), which is related to the power of the statistical test (power = 1- β). You can decrease your risk of committing a type II error by ensuring your test has enough power.

You can do this by ensuring your sample size is large enough to detect a practical difference when one truly exists.

Scenario: Efficacy of a New Teaching Method

Educational psychologists are investigating the potential benefits of a new interactive teaching method, named “EduInteract”, which utilizes virtual reality (VR) technology to teach history to middle school students.

They hypothesize that this method will lead to better retention and understanding compared to the traditional textbook-based approach.

- Null Hypothesis (H0) : The EduInteract VR teaching method does not result in significantly better retention and understanding of history content than the traditional textbook method.

- Alternative Hypothesis (H1) : The EduInteract VR teaching method results in significantly better retention and understanding of history content than the traditional textbook method.

The researchers designed an experiment where one group of students learns a history module using the EduInteract VR method, while a control group learns the same module using a traditional textbook.

After a week, the student’s retention and understanding are tested using a standardized assessment.

Upon analyzing the results, the psychologists found a p-value of 0.06. Using an alpha (α) level of 0.05, this p-value isn’t statistically significant.

Therefore, they fail to reject the null hypothesis and conclude that the EduInteract VR method isn’t more effective than the traditional textbook approach.

However, let’s assume that in the real world, the EduInteract VR truly enhances retention and understanding, but the study failed to detect this benefit due to reasons like small sample size, variability in students’ prior knowledge, or perhaps the assessment wasn’t sensitive enough to detect the nuances of VR-based learning.

Error : By concluding that the EduInteract VR method isn’t more effective than the traditional method when it is, the researchers have made a Type 2 error.

This could prevent schools from adopting a potentially superior teaching method that might benefit students’ learning experiences.

Missed Opportunities : A Type II error can lead to missed opportunities for improvement or innovation. For example, in education, if a more effective teaching method is overlooked because of a Type II error, students might miss out on a better learning experience.

Potential Risks : In healthcare, a Type II error might mean overlooking a harmful side effect of a medication because the research didn’t detect its harmful impacts. As a result, patients might continue using a harmful treatment.

Stagnation : In the business world, making a Type II error can result in continued investment in outdated or less efficient methods. This can lead to stagnation and the inability to compete effectively in the marketplace.

How do Type I and Type II errors relate to psychological research and experiments?

Type I errors are like false alarms, while Type II errors are like missed opportunities. Both errors can impact the validity and reliability of psychological findings, so researchers strive to minimize them to draw accurate conclusions from their studies.

How does sample size influence the likelihood of Type I and Type II errors in psychological research?

Sample size in psychological research influences the likelihood of Type I and Type II errors. A larger sample size reduces the chances of Type I errors, which means researchers are less likely to mistakenly find a significant effect when there isn’t one.

A larger sample size also increases the chances of detecting true effects, reducing the likelihood of Type II errors.

Are there any ethical implications associated with Type I and Type II errors in psychological research?

Yes, there are ethical implications associated with Type I and Type II errors in psychological research.

Type I errors may lead to false positive findings, resulting in misleading conclusions and potentially wasting resources on ineffective interventions. This can harm individuals who are falsely diagnosed or receive unnecessary treatments.

Type II errors, on the other hand, may result in missed opportunities to identify important effects or relationships, leading to a lack of appropriate interventions or support. This can also have negative consequences for individuals who genuinely require assistance.

Therefore, minimizing these errors is crucial for ethical research and ensuring the well-being of participants.

Further Information

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Types I & Type II Errors in Hypothesis Testing

By Jim Frost 8 Comments

In hypothesis testing, a Type I error is a false positive while a Type II error is a false negative. In this blog post, you will learn about these two types of errors, their causes, and how to manage them.

Hypothesis tests use sample data to make inferences about the properties of a population . You gain tremendous benefits by working with random samples because it is usually impossible to measure the entire population.

However, there are tradeoffs when you use samples. The samples we use are typically a minuscule percentage of the entire population. Consequently, they occasionally misrepresent the population severely enough to cause hypothesis tests to make Type I and Type II errors.

Potential Outcomes in Hypothesis Testing

Hypothesis testing is a procedure in inferential statistics that assesses two mutually exclusive theories about the properties of a population. For a generic hypothesis test, the two hypotheses are as follows:

- Null hypothesis : There is no effect

- Alternative hypothesis : There is an effect.

The sample data must provide sufficient evidence to reject the null hypothesis and conclude that the effect exists in the population. Ideally, a hypothesis test fails to reject the null hypothesis when the effect is not present in the population, and it rejects the null hypothesis when the effect exists.

Statisticians define two types of errors in hypothesis testing. Creatively, they call these errors Type I and Type II errors. Both types of error relate to incorrect conclusions about the null hypothesis.

The table summarizes the four possible outcomes for a hypothesis test.

|

|

| |

|

|

|

Related post : How Hypothesis Tests Work: P-values and the Significance Level

Fire alarm analogy for the types of errors

Using hypothesis tests correctly improves your chances of drawing trustworthy conclusions. However, errors are bound to occur.

Unlike the fire alarm analogy, there is no sure way to determine whether an error occurred after you perform a hypothesis test. Typically, a clearer picture develops over time as other researchers conduct similar studies and an overall pattern of results appears. Seeing how your results fit in with similar studies is a crucial step in assessing your study’s findings.

Now, let’s take a look at each type of error in more depth.

Type I Error: False Positives

When you see a p-value that is less than your significance level , you get excited because your results are statistically significant. However, it could be a type I error . The supposed effect might not exist in the population. Again, there is usually no warning when this occurs.

Why do these errors occur? It comes down to sample error. Your random sample has overestimated the effect by chance. It was the luck of the draw. This type of error doesn’t indicate that the researchers did anything wrong. The experimental design, data collection, data validity , and statistical analysis can all be correct, and yet this type of error still occurs.

Even though we don’t know for sure which studies have false positive results, we do know their rate of occurrence. The rate of occurrence for Type I errors equals the significance level of the hypothesis test, which is also known as alpha (α).

The significance level is an evidentiary standard that you set to determine whether your sample data are strong enough to reject the null hypothesis. Hypothesis tests define that standard using the probability of rejecting a null hypothesis that is actually true. You set this value based on your willingness to risk a false positive.

Related post : How to Interpret P-values Correctly

Using the significance level to set the Type I error rate

When the significance level is 0.05 and the null hypothesis is true, there is a 5% chance that the test will reject the null hypothesis incorrectly. If you set alpha to 0.01, there is a 1% of a false positive. If 5% is good, then 1% seems even better, right? As you’ll see, there is a tradeoff between Type I and Type II errors. If you hold everything else constant, as you reduce the chance for a false positive, you increase the opportunity for a false negative.

Type I errors are relatively straightforward. The math is beyond the scope of this article, but statisticians designed hypothesis tests to incorporate everything that affects this error rate so that you can specify it for your studies. As long as your experimental design is sound, you collect valid data, and the data satisfy the assumptions of the hypothesis test, the Type I error rate equals the significance level that you specify. However, if there is a problem in one of those areas, it can affect the false positive rate.

Warning about a potential misinterpretation of Type I errors and the Significance Level

When the null hypothesis is correct for the population, the probability that a test produces a false positive equals the significance level. However, when you look at a statistically significant test result, you cannot state that there is a 5% chance that it represents a false positive.

Why is that the case? Imagine that we perform 100 studies on a population where the null hypothesis is true. If we use a significance level of 0.05, we’d expect that five of the studies will produce statistically significant results—false positives. Afterward, when we go to look at those significant studies, what is the probability that each one is a false positive? Not 5 percent but 100%!

That scenario also illustrates a point that I made earlier. The true picture becomes more evident after repeated experimentation. Given the pattern of results that are predominantly not significant, it is unlikely that an effect exists in the population.

Type II Error: False Negatives

When you perform a hypothesis test and your p-value is greater than your significance level, your results are not statistically significant. That’s disappointing because your sample provides insufficient evidence for concluding that the effect you’re studying exists in the population. However, there is a chance that the effect is present in the population even though the test results don’t support it. If that’s the case, you’ve just experienced a Type II error . The probability of making a Type II error is known as beta (β).

What causes Type II errors? Whereas Type I errors are caused by one thing, sample error, there are a host of possible reasons for Type II errors—small effect sizes, small sample sizes, and high data variability. Furthermore, unlike Type I errors, you can’t set the Type II error rate for your analysis. Instead, the best that you can do is estimate it before you begin your study by approximating properties of the alternative hypothesis that you’re studying. When you do this type of estimation, it’s called power analysis.

To estimate the Type II error rate, you create a hypothetical probability distribution that represents the properties of a true alternative hypothesis. However, when you’re performing a hypothesis test, you typically don’t know which hypothesis is true, much less the specific properties of the distribution for the alternative hypothesis. Consequently, the true Type II error rate is usually unknown!

Type II errors and the power of the analysis

The Type II error rate (beta) is the probability of a false negative. Therefore, the inverse of Type II errors is the probability of correctly detecting an effect. Statisticians refer to this concept as the power of a hypothesis test. Consequently, 1 – β = the statistical power. Analysts typically estimate power rather than beta directly.

If you read my post about power and sample size analysis , you know that the three factors that affect power are sample size, variability in the population, and the effect size. As you design your experiment, you can enter estimates of these three factors into statistical software and it calculates the estimated power for your test.

Suppose you perform a power analysis for an upcoming study and calculate an estimated power of 90%. For this study, the estimated Type II error rate is 10% (1 – 0.9). Keep in mind that variability and effect size are based on estimates and guesses. Consequently, power and the Type II error rate are just estimates rather than something you set directly. These estimates are only as good as the inputs into your power analysis.

Low variability and larger effect sizes decrease the Type II error rate, which increases the statistical power. However, researchers usually have less control over those aspects of a hypothesis test. Typically, researchers have the most control over sample size, making it the critical way to manage your Type II error rate. Holding everything else constant, increasing the sample size reduces the Type II error rate and increases power.

Learn more about Power in Statistics .

Graphing Type I and Type II Errors

The graph below illustrates the two types of errors using two sampling distributions. The critical region line represents the point at which you reject or fail to reject the null hypothesis. Of course, when you perform the hypothesis test, you don’t know which hypothesis is correct. And, the properties of the distribution for the alternative hypothesis are usually unknown. However, use this graph to understand the general nature of these errors and how they are related.

The distribution on the left represents the null hypothesis. If the null hypothesis is true, you only need to worry about Type I errors, which is the shaded portion of the null hypothesis distribution. The rest of the null distribution represents the correct decision of failing to reject the null.

On the other hand, if the alternative hypothesis is true, you need to worry about Type II errors. The shaded region on the alternative hypothesis distribution represents the Type II error rate. The rest of the alternative distribution represents the probability of correctly detecting an effect—power.

Moving the critical value line is equivalent to changing the significance level. If you move the line to the left, you’re increasing the significance level (e.g., α 0.05 to 0.10). Holding everything else constant, this adjustment increases the Type I error rate while reducing the Type II error rate. Moving the line to the right reduces the significance level (e.g., α 0.05 to 0.01), which decreases the Type I error rate but increases the type II error rate.

Is One Error Worse Than the Other?

As you’ve seen, the nature of the two types of error, their causes, and the certainty of their rates of occurrence are all very different.

A common question is whether one type of error is worse than the other? Statisticians designed hypothesis tests to control Type I errors while Type II errors are much less defined. Consequently, many statisticians state that it is better to fail to detect an effect when it exists than it is to conclude an effect exists when it doesn’t. That is to say, there is a tendency to assume that Type I errors are worse.

However, reality is more complex than that. You should carefully consider the consequences of each type of error for your specific test.

Suppose you are assessing the strength of a new jet engine part that is under consideration. Peoples lives are riding on the part’s strength. A false negative in this scenario merely means that the part is strong enough but the test fails to detect it. This situation does not put anyone’s life at risk. On the other hand, Type I errors are worse in this situation because they indicate the part is strong enough when it is not.

Now suppose that the jet engine part is already in use but there are concerns about it failing. In this case, you want the test to be more sensitive to detecting problems even at the risk of false positives. Type II errors are worse in this scenario because the test fails to recognize the problem and leaves these problematic parts in use for longer.

Using hypothesis tests effectively requires that you understand their error rates. By setting the significance level and estimating your test’s power, you can manage both error rates so they meet your requirements.

The error rates in this post are all for individual tests. If you need to perform multiple comparisons, such as comparing group means in ANOVA, you’ll need to use post hoc tests to control the experiment-wise error rate or use the Bonferroni correction .

Share this:

Reader Interactions

June 4, 2024 at 2:04 pm

Very informative.

June 9, 2023 at 9:54 am

Hi Jim- I just signed up for your newsletter and this is my first question to you. I am not a statistician but work with them in my professional life as a QC consultant in biopharmaceutical development. I have a question about Type I and Type II errors in the realm of equivalence testing using two one sided difference testing (TOST). In a recent 2020 publication that I co-authored with a statistician, we stated that the probability of concluding non-equivalence when that is the truth, (which is the opposite of power, the probability of concluding equivalence when it is correct) is 1-2*alpha. This made sense to me because one uses a 90% confidence interval on a mean to evaluate whether the result is within established equivalence bounds with an alpha set to 0.05. However, it appears that specificity (1-alpha) is always the case as is power always being 1-beta. For equivalence testing the latter is 1-2*beta/2 but for specificity it stays as 1-alpha because only one of the null hypotheses in a two-sided test can fail at one time. I still see 1-2*alpha as making more sense as we show in Figure 3 of our paper which shows the white space under the distribution of the alternative hypothesis as 1-2 alpha. The paper can be downloaded as open access here if that would make my question more clear. https://bioprocessingjournal.com/index.php/article-downloads/890-vol-19-open-access-2020-defining-therapeutic-window-for-viral-vectors-a-statistical-framework-to-improve-consistency-in-assigning-product-dose-values I have consulted with other statistical colleagues and cannot get consensus so I would love your opinion and explanation! Thanks in advance!

June 10, 2023 at 1:00 am

Let me preface my response by saying that I’m not an expert in equivalence testing. But here’s my best guess about your question.

The alpha is for each of the hypothesis tests. Each one has a type I error rate of 0.05. Or, as you say, a specificity of 1-alpha. However, there are two tests so we need to consider the family-wise error rate. The formula is the following:

FWER = 1 – (1 – α)^N

Where N is the number of hypothesis tests.

For two tests, there’s a family-wise error rate of 0.0975. Or a family-wise specificity of 0.9025.

However, I believe they use 90% CI for a different reason (although it’s a very close match to the family-wise error rate). The 90% CI provides consistent results with the two one-side 95% tests. In other words, if the 90% CI is within the equivalency bounds, then the two tests will be significant. If the CI extends above the upper bound, the corresponding test won’t be significant. Etc.

However, using either rational, I’d say the overall type I error rate is about 0.1.

I hope that answers your question. And, again, I’m not an expert in this particular test.

July 18, 2022 at 5:15 am

Thank you for your valuable content. I have a question regarding correcting for multiple tests. My question is: for exactly how many tests should I correct in the scenario below?

Background: I’m testing for differences between groups A (patient group) and B (control group) in variable X. Variable X is a biological variable present in the body’s left and right side. Variable Y is a questionnaire for group A.

Step 1. Is there a significant difference within groups in the weight of left and right variable X? (I will conduct two paired sample t-tests)

If I find a significant difference in step 1, then I will conduct steps 2A and 2B. However, if I don’t find a significant difference in step 1, then I will only conduct step 2C.

Step 2A. Is there a significant difference between groups in left variable X? (I will conduct one independent sample t-test) Step 2B. Is there a significant difference between groups in right variable X? (I will conduct one independent sample t-test)

Step 2C. Is there a significant difference between groups in total variable X (left + right variable X)? (I will conduct one independent sample t-test)

If I find a significant difference in step 1, then I will conduct with steps 3A and 3B. However, if I don’t find a significant difference in step 1, then I will only conduct step 3C.

Step 3A. Is there a significant correlation between left variable X in group A and variable Y? (I will conduct Pearson correlation) Step 3B. Is there a significant correlation between right variable X in group A and variable Y? (I will conduct Pearson correlation)

Step 3C. Is there a significant correlation between total variable X in group A and variable Y? (I will conduct a Pearson correlation)

Regards, De

January 2, 2021 at 1:57 pm

I should say that being a budding statistician, this site seems to be pretty reliable. I have few doubts in here. It would be great if you can clarify it:

“A significance level of 0.05 indicates a 5% risk of concluding that a difference exists when there is no actual difference. ”

My understanding : When we say that the significance level is 0.05 then it means we are taking 5% risk to support alternate hypothesis even though there is no difference ?( I think i am not allowed to say Null is true, because null is assumed to be true/ Right)

January 2, 2021 at 6:48 pm

The sentence as I write it is correct. Here’s a simple way to understand it. Imagine you’re conducting a computer simulation where you control the population parameters and have the computer draw random samples from the populations that you define. Now, imagine you draw samples from two populations where the means and standard deviations are equal. You know this for a fact because you set the parameters yourself. Then you conduct a series of 2-sample t-tests.

In this example, you know the null hypothesis is correct. However, thanks to random sampling error, some proportion of the t-tests will have statistically significant results (i.e., false positives or Type I errors). The proportion of false positives will equal your significance level over the long run.

Of course, in real-world experiments, you never know for sure whether the null is true or not. However, given the properties of the hypothesis, you do know what proportion of tests will give you a false positive IF the null is true–and that’s the significance level.

I’m thinking through the wording of how you wrote it and I believe it is equivalent to what I wrote. If there is no difference (the null is true), then you have a 5% chance of incorrectly supporting the alternative. And, again, you’re correct that in the real world you don’t know for sure whether the null is true. But, you can still know the false positive (Type I) error rate. For more information about that property, read my post about how hypothesis tests work .

July 9, 2018 at 11:43 am

I like to use the analogy of a trial. The null hypothesis is that the defendant is innocent. A type I error would be convicting an innocent person and a type II error would be acquitting a guilty one. I like to think that our system makes a type I error very unlikely with the trade off being that a type II error is greater.

July 9, 2018 at 12:03 pm

Hi Doug, I think that is an excellent analogy on multiple levels. As you mention, a trial would set a high bar for the significance level by choosing a very low value for alpha. This helps prevent innocent people from being convicted (Type I error) but does increase the probability of allowing the guilty to go free (Type II error). I often refer to the significant level as a evidentiary standard with this legalistic analogy in mind.

Additionally, in the justice system in the U.S., there is a presumption of innocence and the prosecutor must present sufficient evidence to prove that the defendant is guilty. That’s just like in a hypothesis test where the assumption is that the null hypothesis is true and your sample must contain sufficient evidence to be able to reject the null hypothesis and suggest that the effect exists in the population.

This analogy even works for the similarities behind the phrases “Not guilty” and “Fail to reject the null hypothesis.” In both cases, you aren’t proving innocence or that the null hypothesis is true. When a defendant is “not guilty” it might be that the evidence was insufficient to convince the jury. In a hypothesis test, when you fail to reject the null hypothesis, it’s possible that an effect exists in the population but you have insufficient evidence to detect it. Perhaps the effect exists but the sample size or effect size is too small, or the variability might be too high.

Comments and Questions Cancel reply

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on 18 January 2021 by Pritha Bhandari . Revised on 2 February 2023.

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

Type I error rate

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, February 02). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved 21 August 2024, from https://www.scribbr.co.uk/stats/type-i-and-type-ii-error/

Is this article helpful?

Pritha Bhandari

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Ind Psychiatry J

- v.18(2); Jul-Dec 2009

Hypothesis testing, type I and type II errors

Amitav banerjee.

Department of Community Medicine, D. Y. Patil Medical College, Pune, India

U. B. Chitnis

S. l. jadhav, j. s. bhawalkar, s. chaudhury.

1 Department of Psychiatry, RINPAS, Kanke, Ranchi, India

Hypothesis testing is an important activity of empirical research and evidence-based medicine. A well worked up hypothesis is half the answer to the research question. For this, both knowledge of the subject derived from extensive review of the literature and working knowledge of basic statistical concepts are desirable. The present paper discusses the methods of working up a good hypothesis and statistical concepts of hypothesis testing.

Karl Popper is probably the most influential philosopher of science in the 20 th century (Wulff et al ., 1986). Many scientists, even those who do not usually read books on philosophy, are acquainted with the basic principles of his views on science. The popularity of Popper’s philosophy is due partly to the fact that it has been well explained in simple terms by, among others, the Nobel Prize winner Peter Medawar (Medawar, 1969). Popper makes the very important point that empirical scientists (those who stress on observations only as the starting point of research) put the cart in front of the horse when they claim that science proceeds from observation to theory, since there is no such thing as a pure observation which does not depend on theory. Popper states, “… the belief that we can start with pure observation alone, without anything in the nature of a theory, is absurd: As may be illustrated by the story of the man who dedicated his life to natural science, wrote down everything he could observe, and bequeathed his ‘priceless’ collection of observations to the Royal Society to be used as inductive (empirical) evidence.

STARTING POINT OF RESEARCH: HYPOTHESIS OR OBSERVATION?

The first step in the scientific process is not observation but the generation of a hypothesis which may then be tested critically by observations and experiments. Popper also makes the important claim that the goal of the scientist’s efforts is not the verification but the falsification of the initial hypothesis. It is logically impossible to verify the truth of a general law by repeated observations, but, at least in principle, it is possible to falsify such a law by a single observation. Repeated observations of white swans did not prove that all swans are white, but the observation of a single black swan sufficed to falsify that general statement (Popper, 1976).

CHARACTERISTICS OF A GOOD HYPOTHESIS

A good hypothesis must be based on a good research question. It should be simple, specific and stated in advance (Hulley et al ., 2001).

Hypothesis should be simple

A simple hypothesis contains one predictor and one outcome variable, e.g. positive family history of schizophrenia increases the risk of developing the condition in first-degree relatives. Here the single predictor variable is positive family history of schizophrenia and the outcome variable is schizophrenia. A complex hypothesis contains more than one predictor variable or more than one outcome variable, e.g., a positive family history and stressful life events are associated with an increased incidence of Alzheimer’s disease. Here there are 2 predictor variables, i.e., positive family history and stressful life events, while one outcome variable, i.e., Alzheimer’s disease. Complex hypothesis like this cannot be easily tested with a single statistical test and should always be separated into 2 or more simple hypotheses.

Hypothesis should be specific

A specific hypothesis leaves no ambiguity about the subjects and variables, or about how the test of statistical significance will be applied. It uses concise operational definitions that summarize the nature and source of the subjects and the approach to measuring variables (History of medication with tranquilizers, as measured by review of medical store records and physicians’ prescriptions in the past year, is more common in patients who attempted suicides than in controls hospitalized for other conditions). This is a long-winded sentence, but it explicitly states the nature of predictor and outcome variables, how they will be measured and the research hypothesis. Often these details may be included in the study proposal and may not be stated in the research hypothesis. However, they should be clear in the mind of the investigator while conceptualizing the study.

Hypothesis should be stated in advance

The hypothesis must be stated in writing during the proposal state. This will help to keep the research effort focused on the primary objective and create a stronger basis for interpreting the study’s results as compared to a hypothesis that emerges as a result of inspecting the data. The habit of post hoc hypothesis testing (common among researchers) is nothing but using third-degree methods on the data (data dredging), to yield at least something significant. This leads to overrating the occasional chance associations in the study.

TYPES OF HYPOTHESES

For the purpose of testing statistical significance, hypotheses are classified by the way they describe the expected difference between the study groups.

Null and alternative hypotheses

The null hypothesis states that there is no association between the predictor and outcome variables in the population (There is no difference between tranquilizer habits of patients with attempted suicides and those of age- and sex- matched “control” patients hospitalized for other diagnoses). The null hypothesis is the formal basis for testing statistical significance. By starting with the proposition that there is no association, statistical tests can estimate the probability that an observed association could be due to chance.

The proposition that there is an association — that patients with attempted suicides will report different tranquilizer habits from those of the controls — is called the alternative hypothesis. The alternative hypothesis cannot be tested directly; it is accepted by exclusion if the test of statistical significance rejects the null hypothesis.

One- and two-tailed alternative hypotheses

A one-tailed (or one-sided) hypothesis specifies the direction of the association between the predictor and outcome variables. The prediction that patients of attempted suicides will have a higher rate of use of tranquilizers than control patients is a one-tailed hypothesis. A two-tailed hypothesis states only that an association exists; it does not specify the direction. The prediction that patients with attempted suicides will have a different rate of tranquilizer use — either higher or lower than control patients — is a two-tailed hypothesis. (The word tails refers to the tail ends of the statistical distribution such as the familiar bell-shaped normal curve that is used to test a hypothesis. One tail represents a positive effect or association; the other, a negative effect.) A one-tailed hypothesis has the statistical advantage of permitting a smaller sample size as compared to that permissible by a two-tailed hypothesis. Unfortunately, one-tailed hypotheses are not always appropriate; in fact, some investigators believe that they should never be used. However, they are appropriate when only one direction for the association is important or biologically meaningful. An example is the one-sided hypothesis that a drug has a greater frequency of side effects than a placebo; the possibility that the drug has fewer side effects than the placebo is not worth testing. Whatever strategy is used, it should be stated in advance; otherwise, it would lack statistical rigor. Data dredging after it has been collected and post hoc deciding to change over to one-tailed hypothesis testing to reduce the sample size and P value are indicative of lack of scientific integrity.

STATISTICAL PRINCIPLES OF HYPOTHESIS TESTING

A hypothesis (for example, Tamiflu [oseltamivir], drug of choice in H1N1 influenza, is associated with an increased incidence of acute psychotic manifestations) is either true or false in the real world. Because the investigator cannot study all people who are at risk, he must test the hypothesis in a sample of that target population. No matter how many data a researcher collects, he can never absolutely prove (or disprove) his hypothesis. There will always be a need to draw inferences about phenomena in the population from events observed in the sample (Hulley et al ., 2001). In some ways, the investigator’s problem is similar to that faced by a judge judging a defendant [ Table 1 ]. The absolute truth whether the defendant committed the crime cannot be determined. Instead, the judge begins by presuming innocence — the defendant did not commit the crime. The judge must decide whether there is sufficient evidence to reject the presumed innocence of the defendant; the standard is known as beyond a reasonable doubt. A judge can err, however, by convicting a defendant who is innocent, or by failing to convict one who is actually guilty. In similar fashion, the investigator starts by presuming the null hypothesis, or no association between the predictor and outcome variables in the population. Based on the data collected in his sample, the investigator uses statistical tests to determine whether there is sufficient evidence to reject the null hypothesis in favor of the alternative hypothesis that there is an association in the population. The standard for these tests is shown as the level of statistical significance.

The analogy between judge’s decisions and statistical tests

| Judge’s decision | Statistical test |

|---|---|

| Innocence: The defendant did not commit crime | Null hypothesis: No association between Tamiflu and psychotic manifestations |

| Guilt: The defendant did commit the crime | Alternative hypothesis: There is association between Tamiflu and psychosis |

| Standard for rejecting innocence: Beyond a reasonable doubt | Standard for rejecting null hypothesis: Level of statistical significance (à) |

| Correct judgment: Convict a criminal | Correct inference: Conclude that there is an association when one does exist in the population |

| Correct judgment: Acquit an innocent person | Correct inference: Conclude that there is no association between Tamiflu and psychosis when one does not exist |

| Incorrect judgment: Convict an innocent person. | Incorrect inference (Type I error): Conclude that there is an association when there actually is none |

| Incorrect judgment: Acquit a criminal | Incorrect inference (Type II error): Conclude that there is no association when there actually is one |

TYPE I (ALSO KNOWN AS ‘α’) AND TYPE II (ALSO KNOWN AS ‘β’)ERRORS

Just like a judge’s conclusion, an investigator’s conclusion may be wrong. Sometimes, by chance alone, a sample is not representative of the population. Thus the results in the sample do not reflect reality in the population, and the random error leads to an erroneous inference. A type I error (false-positive) occurs if an investigator rejects a null hypothesis that is actually true in the population; a type II error (false-negative) occurs if the investigator fails to reject a null hypothesis that is actually false in the population. Although type I and type II errors can never be avoided entirely, the investigator can reduce their likelihood by increasing the sample size (the larger the sample, the lesser is the likelihood that it will differ substantially from the population).

False-positive and false-negative results can also occur because of bias (observer, instrument, recall, etc.). (Errors due to bias, however, are not referred to as type I and type II errors.) Such errors are troublesome, since they may be difficult to detect and cannot usually be quantified.

EFFECT SIZE

The likelihood that a study will be able to detect an association between a predictor variable and an outcome variable depends, of course, on the actual magnitude of that association in the target population. If it is large (such as 90% increase in the incidence of psychosis in people who are on Tamiflu), it will be easy to detect in the sample. Conversely, if the size of the association is small (such as 2% increase in psychosis), it will be difficult to detect in the sample. Unfortunately, the investigator often does not know the actual magnitude of the association — one of the purposes of the study is to estimate it. Instead, the investigator must choose the size of the association that he would like to be able to detect in the sample. This quantity is known as the effect size. Selecting an appropriate effect size is the most difficult aspect of sample size planning. Sometimes, the investigator can use data from other studies or pilot tests to make an informed guess about a reasonable effect size. When there are no data with which to estimate it, he can choose the smallest effect size that would be clinically meaningful, for example, a 10% increase in the incidence of psychosis. Of course, from the public health point of view, even a 1% increase in psychosis incidence would be important. Thus the choice of the effect size is always somewhat arbitrary, and considerations of feasibility are often paramount. When the number of available subjects is limited, the investigator may have to work backward to determine whether the effect size that his study will be able to detect with that number of subjects is reasonable.

α,β,AND POWER

After a study is completed, the investigator uses statistical tests to try to reject the null hypothesis in favor of its alternative (much in the same way that a prosecuting attorney tries to convince a judge to reject innocence in favor of guilt). Depending on whether the null hypothesis is true or false in the target population, and assuming that the study is free of bias, 4 situations are possible, as shown in Table 2 below. In 2 of these, the findings in the sample and reality in the population are concordant, and the investigator’s inference will be correct. In the other 2 situations, either a type I (α) or a type II (β) error has been made, and the inference will be incorrect.

Truth in the population versus the results in the study sample: The four possibilities

| Truth in the population | Association + nt | No association |

|---|---|---|

| Reject null hypothesis | Correct | Type I error |

| Fail to reject null hypothesis | Type II error | Correct |

The investigator establishes the maximum chance of making type I and type II errors in advance of the study. The probability of committing a type I error (rejecting the null hypothesis when it is actually true) is called α (alpha) the other name for this is the level of statistical significance.

If a study of Tamiflu and psychosis is designed with α = 0.05, for example, then the investigator has set 5% as the maximum chance of incorrectly rejecting the null hypothesis (and erroneously inferring that use of Tamiflu and psychosis incidence are associated in the population). This is the level of reasonable doubt that the investigator is willing to accept when he uses statistical tests to analyze the data after the study is completed.

The probability of making a type II error (failing to reject the null hypothesis when it is actually false) is called β (beta). The quantity (1 - β) is called power, the probability of observing an effect in the sample (if one), of a specified effect size or greater exists in the population.

If β is set at 0.10, then the investigator has decided that he is willing to accept a 10% chance of missing an association of a given effect size between Tamiflu and psychosis. This represents a power of 0.90, i.e., a 90% chance of finding an association of that size. For example, suppose that there really would be a 30% increase in psychosis incidence if the entire population took Tamiflu. Then 90 times out of 100, the investigator would observe an effect of that size or larger in his study. This does not mean, however, that the investigator will be absolutely unable to detect a smaller effect; just that he will have less than 90% likelihood of doing so.

Ideally alpha and beta errors would be set at zero, eliminating the possibility of false-positive and false-negative results. In practice they are made as small as possible. Reducing them, however, usually requires increasing the sample size. Sample size planning aims at choosing a sufficient number of subjects to keep alpha and beta at acceptably low levels without making the study unnecessarily expensive or difficult.

Many studies s et al pha at 0.05 and beta at 0.20 (a power of 0.80). These are somewhat arbitrary values, and others are sometimes used; the conventional range for alpha is between 0.01 and 0.10; and for beta, between 0.05 and 0.20. In general the investigator should choose a low value of alpha when the research question makes it particularly important to avoid a type I (false-positive) error, and he should choose a low value of beta when it is especially important to avoid a type II error.

The null hypothesis acts like a punching bag: It is assumed to be true in order to shadowbox it into false with a statistical test. When the data are analyzed, such tests determine the P value, the probability of obtaining the study results by chance if the null hypothesis is true. The null hypothesis is rejected in favor of the alternative hypothesis if the P value is less than alpha, the predetermined level of statistical significance (Daniel, 2000). “Nonsignificant” results — those with P value greater than alpha — do not imply that there is no association in the population; they only mean that the association observed in the sample is small compared with what could have occurred by chance alone. For example, an investigator might find that men with family history of mental illness were twice as likely to develop schizophrenia as those with no family history, but with a P value of 0.09. This means that even if family history and schizophrenia were not associated in the population, there was a 9% chance of finding such an association due to random error in the sample. If the investigator had set the significance level at 0.05, he would have to conclude that the association in the sample was “not statistically significant.” It might be tempting for the investigator to change his mind about the level of statistical significance ex post facto and report the results “showed statistical significance at P < 10”. A better choice would be to report that the “results, although suggestive of an association, did not achieve statistical significance ( P = .09)”. This solution acknowledges that statistical significance is not an “all or none” situation.

Hypothesis testing is the sheet anchor of empirical research and in the rapidly emerging practice of evidence-based medicine. However, empirical research and, ipso facto, hypothesis testing have their limits. The empirical approach to research cannot eliminate uncertainty completely. At the best, it can quantify uncertainty. This uncertainty can be of 2 types: Type I error (falsely rejecting a null hypothesis) and type II error (falsely accepting a null hypothesis). The acceptable magnitudes of type I and type II errors are set in advance and are important for sample size calculations. Another important point to remember is that we cannot ‘prove’ or ‘disprove’ anything by hypothesis testing and statistical tests. We can only knock down or reject the null hypothesis and by default accept the alternative hypothesis. If we fail to reject the null hypothesis, we accept it by default.

Source of Support: Nil

Conflict of Interest: None declared.

- Daniel W. W. In: Biostatistics. 7th ed. New York: John Wiley and Sons, Inc; 2002. Hypothesis testing; pp. 204–294. [ Google Scholar ]

- Hulley S. B, Cummings S. R, Browner W. S, Grady D, Hearst N, Newman T. B. 2nd ed. Philadelphia: Lippincott Williams and Wilkins; 2001. Getting ready to estimate sample size: Hypothesis and underlying principles In: Designing Clinical Research-An epidemiologic approach; pp. 51–63. [ Google Scholar ]

- Medawar P. B. Philadelphia: American Philosophical Society; 1969. Induction and intuition in scientific thought. [ Google Scholar ]

- Popper K. Unended Quest. An Intellectual Autobiography. Fontana Collins; p. 42. [ Google Scholar ]

- Wulff H. R, Pedersen S. A, Rosenberg R. Oxford: Blackwell Scientific Publicatons; Empirism and Realism: A philosophical problem. In: Philosophy of Medicine. [ Google Scholar ]

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

6.1 - type i and type ii errors.

When conducting a hypothesis test there are two possible decisions: reject the null hypothesis or fail to reject the null hypothesis. You should remember though, hypothesis testing uses data from a sample to make an inference about a population. When conducting a hypothesis test we do not know the population parameters. In most cases, we don't know if our inference is correct or incorrect.

When we reject the null hypothesis there are two possibilities. There could really be a difference in the population, in which case we made a correct decision. Or, it is possible that there is not a difference in the population (i.e., \(H_0\) is true) but our sample was different from the hypothesized value due to random sampling variation. In that case we made an error. This is known as a Type I error.

When we fail to reject the null hypothesis there are also two possibilities. If the null hypothesis is really true, and there is not a difference in the population, then we made the correct decision. If there is a difference in the population, and we failed to reject it, then we made a Type II error.

Rejecting \(H_0\) when \(H_0\) is really true, denoted by \(\alpha\) ("alpha") and commonly set at .05

\(\alpha=P(Type\;I\;error)\)

Failing to reject \(H_0\) when \(H_0\) is really false, denoted by \(\beta\) ("beta")

\(\beta=P(Type\;II\;error)\)

| Decision | Reality | |

|---|---|---|

| \(H_0\) is true | \(H_0\) is false | |

| Reject \(H_0\), (conclude \(H_a\)) | Type I error | Correct decision |

| Fail to reject \(H_0\) | Correct decision | Type II error |

Example: Trial Section

A man goes to trial where he is being tried for the murder of his wife.

We can put it in a hypothesis testing framework. The hypotheses being tested are:

- \(H_0\) : Not Guilty

- \(H_a\) : Guilty

Type I error is committed if we reject \(H_0\) when it is true. In other words, did not kill his wife but was found guilty and is punished for a crime he did not really commit.

Type II error is committed if we fail to reject \(H_0\) when it is false. In other words, if the man did kill his wife but was found not guilty and was not punished.

Example: Culinary Arts Study Section

A group of culinary arts students is comparing two methods for preparing asparagus: traditional steaming and a new frying method. They want to know if patrons of their school restaurant prefer their new frying method over the traditional steaming method. A sample of patrons are given asparagus prepared using each method and asked to select their preference. A statistical analysis is performed to determine if more than 50% of participants prefer the new frying method:

- \(H_{0}: p = .50\)

- \(H_{a}: p>.50\)

Type I error occurs if they reject the null hypothesis and conclude that their new frying method is preferred when in reality is it not. This may occur if, by random sampling error, they happen to get a sample that prefers the new frying method more than the overall population does. If this does occur, the consequence is that the students will have an incorrect belief that their new method of frying asparagus is superior to the traditional method of steaming.

Type II error occurs if they fail to reject the null hypothesis and conclude that their new method is not superior when in reality it is. If this does occur, the consequence is that the students will have an incorrect belief that their new method is not superior to the traditional method when in reality it is.

- Search Search Please fill out this field.

What Is a Type II Error?

- How It Works

- Type I vs. Type II Error

The Bottom Line

- Corporate Finance

- Financial Analysis

Type II Error: Definition, Example, vs. Type I Error

Adam Hayes, Ph.D., CFA, is a financial writer with 15+ years Wall Street experience as a derivatives trader. Besides his extensive derivative trading expertise, Adam is an expert in economics and behavioral finance. Adam received his master's in economics from The New School for Social Research and his Ph.D. from the University of Wisconsin-Madison in sociology. He is a CFA charterholder as well as holding FINRA Series 7, 55 & 63 licenses. He currently researches and teaches economic sociology and the social studies of finance at the Hebrew University in Jerusalem.

:max_bytes(150000):strip_icc():format(webp)/adam_hayes-5bfc262a46e0fb005118b414.jpg)

Michela Buttignol / Investopedia