What is The Null Hypothesis & When Do You Reject The Null Hypothesis

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A null hypothesis is a statistical concept suggesting no significant difference or relationship between measured variables. It’s the default assumption unless empirical evidence proves otherwise.

The null hypothesis states no relationship exists between the two variables being studied (i.e., one variable does not affect the other).

The null hypothesis is the statement that a researcher or an investigator wants to disprove.

Testing the null hypothesis can tell you whether your results are due to the effects of manipulating the dependent variable or due to random chance.

How to Write a Null Hypothesis

Null hypotheses (H0) start as research questions that the investigator rephrases as statements indicating no effect or relationship between the independent and dependent variables.

It is a default position that your research aims to challenge or confirm.

For example, if studying the impact of exercise on weight loss, your null hypothesis might be:

There is no significant difference in weight loss between individuals who exercise daily and those who do not.

Examples of Null Hypotheses

| Research Question | Null Hypothesis |

|---|---|

| Do teenagers use cell phones more than adults? | Teenagers and adults use cell phones the same amount. |

| Do tomato plants exhibit a higher rate of growth when planted in compost rather than in soil? | Tomato plants show no difference in growth rates when planted in compost rather than soil. |

| Does daily meditation decrease the incidence of depression? | Daily meditation does not decrease the incidence of depression. |

| Does daily exercise increase test performance? | There is no relationship between daily exercise time and test performance. |

| Does the new vaccine prevent infections? | The vaccine does not affect the infection rate. |

| Does flossing your teeth affect the number of cavities? | Flossing your teeth has no effect on the number of cavities. |

When Do We Reject The Null Hypothesis?

We reject the null hypothesis when the data provide strong enough evidence to conclude that it is likely incorrect. This often occurs when the p-value (probability of observing the data given the null hypothesis is true) is below a predetermined significance level.

If the collected data does not meet the expectation of the null hypothesis, a researcher can conclude that the data lacks sufficient evidence to back up the null hypothesis, and thus the null hypothesis is rejected.

Rejecting the null hypothesis means that a relationship does exist between a set of variables and the effect is statistically significant ( p > 0.05).

If the data collected from the random sample is not statistically significance , then the null hypothesis will be accepted, and the researchers can conclude that there is no relationship between the variables.

You need to perform a statistical test on your data in order to evaluate how consistent it is with the null hypothesis. A p-value is one statistical measurement used to validate a hypothesis against observed data.

Calculating the p-value is a critical part of null-hypothesis significance testing because it quantifies how strongly the sample data contradicts the null hypothesis.

The level of statistical significance is often expressed as a p -value between 0 and 1. The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

Usually, a researcher uses a confidence level of 95% or 99% (p-value of 0.05 or 0.01) as general guidelines to decide if you should reject or keep the null.

When your p-value is less than or equal to your significance level, you reject the null hypothesis.

In other words, smaller p-values are taken as stronger evidence against the null hypothesis. Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis.

In this case, the sample data provides insufficient data to conclude that the effect exists in the population.

Because you can never know with complete certainty whether there is an effect in the population, your inferences about a population will sometimes be incorrect.

When you incorrectly reject the null hypothesis, it’s called a type I error. When you incorrectly fail to reject it, it’s called a type II error.

Why Do We Never Accept The Null Hypothesis?

The reason we do not say “accept the null” is because we are always assuming the null hypothesis is true and then conducting a study to see if there is evidence against it. And, even if we don’t find evidence against it, a null hypothesis is not accepted.

A lack of evidence only means that you haven’t proven that something exists. It does not prove that something doesn’t exist.

It is risky to conclude that the null hypothesis is true merely because we did not find evidence to reject it. It is always possible that researchers elsewhere have disproved the null hypothesis, so we cannot accept it as true, but instead, we state that we failed to reject the null.

One can either reject the null hypothesis, or fail to reject it, but can never accept it.

Why Do We Use The Null Hypothesis?

We can never prove with 100% certainty that a hypothesis is true; We can only collect evidence that supports a theory. However, testing a hypothesis can set the stage for rejecting or accepting this hypothesis within a certain confidence level.

The null hypothesis is useful because it can tell us whether the results of our study are due to random chance or the manipulation of a variable (with a certain level of confidence).

A null hypothesis is rejected if the measured data is significantly unlikely to have occurred and a null hypothesis is accepted if the observed outcome is consistent with the position held by the null hypothesis.

Rejecting the null hypothesis sets the stage for further experimentation to see if a relationship between two variables exists.

Hypothesis testing is a critical part of the scientific method as it helps decide whether the results of a research study support a particular theory about a given population. Hypothesis testing is a systematic way of backing up researchers’ predictions with statistical analysis.

It helps provide sufficient statistical evidence that either favors or rejects a certain hypothesis about the population parameter.

Purpose of a Null Hypothesis

- The primary purpose of the null hypothesis is to disprove an assumption.

- Whether rejected or accepted, the null hypothesis can help further progress a theory in many scientific cases.

- A null hypothesis can be used to ascertain how consistent the outcomes of multiple studies are.

Do you always need both a Null Hypothesis and an Alternative Hypothesis?

The null (H0) and alternative (Ha or H1) hypotheses are two competing claims that describe the effect of the independent variable on the dependent variable. They are mutually exclusive, which means that only one of the two hypotheses can be true.

While the null hypothesis states that there is no effect in the population, an alternative hypothesis states that there is statistical significance between two variables.

The goal of hypothesis testing is to make inferences about a population based on a sample. In order to undertake hypothesis testing, you must express your research hypothesis as a null and alternative hypothesis. Both hypotheses are required to cover every possible outcome of the study.

What is the difference between a null hypothesis and an alternative hypothesis?

The alternative hypothesis is the complement to the null hypothesis. The null hypothesis states that there is no effect or no relationship between variables, while the alternative hypothesis claims that there is an effect or relationship in the population.

It is the claim that you expect or hope will be true. The null hypothesis and the alternative hypothesis are always mutually exclusive, meaning that only one can be true at a time.

What are some problems with the null hypothesis?

One major problem with the null hypothesis is that researchers typically will assume that accepting the null is a failure of the experiment. However, accepting or rejecting any hypothesis is a positive result. Even if the null is not refuted, the researchers will still learn something new.

Why can a null hypothesis not be accepted?

We can either reject or fail to reject a null hypothesis, but never accept it. If your test fails to detect an effect, this is not proof that the effect doesn’t exist. It just means that your sample did not have enough evidence to conclude that it exists.

We can’t accept a null hypothesis because a lack of evidence does not prove something that does not exist. Instead, we fail to reject it.

Failing to reject the null indicates that the sample did not provide sufficient enough evidence to conclude that an effect exists.

If the p-value is greater than the significance level, then you fail to reject the null hypothesis.

Is a null hypothesis directional or non-directional?

A hypothesis test can either contain an alternative directional hypothesis or a non-directional alternative hypothesis. A directional hypothesis is one that contains the less than (“<“) or greater than (“>”) sign.

A nondirectional hypothesis contains the not equal sign (“≠”). However, a null hypothesis is neither directional nor non-directional.

A null hypothesis is a prediction that there will be no change, relationship, or difference between two variables.

The directional hypothesis or nondirectional hypothesis would then be considered alternative hypotheses to the null hypothesis.

Gill, J. (1999). The insignificance of null hypothesis significance testing. Political research quarterly , 52 (3), 647-674.

Krueger, J. (2001). Null hypothesis significance testing: On the survival of a flawed method. American Psychologist , 56 (1), 16.

Masson, M. E. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behavior research methods , 43 , 679-690.

Nickerson, R. S. (2000). Null hypothesis significance testing: a review of an old and continuing controversy. Psychological methods , 5 (2), 241.

Rozeboom, W. W. (1960). The fallacy of the null-hypothesis significance test. Psychological bulletin , 57 (5), 416.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Hypothesis Testing with One Sample

Null and Alternative Hypotheses

OpenStaxCollege

[latexpage]

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 : The null hypothesis: It is a statement about the population that either is believed to be true or is used to put forth an argument unless it can be shown to be incorrect beyond a reasonable doubt.

H a : The alternative hypothesis: It is a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are “reject H 0 ” if the sample information favors the alternative hypothesis or “do not reject H 0 ” or “decline to reject H 0 ” if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

| equal (=) | not equal (≠) greater than (>) less than (<) |

| greater than or equal to (≥) | less than (<) |

| less than or equal to (≤) | more than (>) |

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers (including one of the co-authors in research work) use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

H 0 : No more than 30% of the registered voters in Santa Clara County voted in the primary election. p ≤ 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25%. State the null and alternative hypotheses.

H 0 : The drug reduces cholesterol by 25%. p = 0.25

H a : The drug does not reduce cholesterol by 25%. p ≠ 0.25

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are:

H 0 : μ = 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ = 66

- H a : μ ≠ 66

We want to test if college students take less than five years to graduate from college, on the average. The null and alternative hypotheses are:

H 0 : μ ≥ 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ ≥ 45

- H a : μ < 45

In an issue of U. S. News and World Report , an article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third pass. The same article stated that 6.6% of U.S. students take advanced placement exams and 4.4% pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6%. State the null and alternative hypotheses.

H 0 : p ≤ 0.066

On a state driver’s test, about 40% pass the test on the first try. We want to test if more than 40% pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p = 0.40

- H a : p > 0.40

<!– ??? –>

Bring to class a newspaper, some news magazines, and some Internet articles . In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

Chapter Review

In a hypothesis test , sample data is evaluated in order to arrive at a decision about some type of claim. If certain conditions about the sample are satisfied, then the claim can be evaluated for a population. In a hypothesis test, we:

Formula Review

H 0 and H a are contradictory.

| has: | equal (=) | greater than or equal to (≥) | less than or equal to (≤) |

| has: | not equal (≠) greater than (>) less than (<) | less than (<) | greater than (>) |

If α ≤ p -value, then do not reject H 0 .

If α > p -value, then reject H 0 .

α is preconceived. Its value is set before the hypothesis test starts. The p -value is calculated from the data.

You are testing that the mean speed of your cable Internet connection is more than three Megabits per second. What is the random variable? Describe in words.

The random variable is the mean Internet speed in Megabits per second.

You are testing that the mean speed of your cable Internet connection is more than three Megabits per second. State the null and alternative hypotheses.

The American family has an average of two children. What is the random variable? Describe in words.

The random variable is the mean number of children an American family has.

The mean entry level salary of an employee at a company is 💲58,000. You believe it is higher for IT professionals in the company. State the null and alternative hypotheses.

A sociologist claims the probability that a person picked at random in Times Square in New York City is visiting the area is 0.83. You want to test to see if the proportion is actually less. What is the random variable? Describe in words.

The random variable is the proportion of people picked at random in Times Square visiting the city.

A sociologist claims the probability that a person picked at random in Times Square in New York City is visiting the area is 0.83. You want to test to see if the claim is correct. State the null and alternative hypotheses.

In a population of fish, approximately 42% are female. A test is conducted to see if, in fact, the proportion is less. State the null and alternative hypotheses.

Suppose that a recent article stated that the mean time spent in jail by a first–time convicted burglar is 2.5 years. A study was then done to see if the mean time has increased in the new century. A random sample of 26 first-time convicted burglars in a recent year was picked. The mean length of time in jail from the survey was 3 years with a standard deviation of 1.8 years. Suppose that it is somehow known that the population standard deviation is 1.5. If you were conducting a hypothesis test to determine if the mean length of jail time has increased, what would the null and alternative hypotheses be? The distribution of the population is normal.

A random survey of 75 death row inmates revealed that the mean length of time on death row is 17.4 years with a standard deviation of 6.3 years. If you were conducting a hypothesis test to determine if the population mean time on death row could likely be 15 years, what would the null and alternative hypotheses be?

- H 0 : __________

- H a : __________

- H 0 : μ = 15

- H a : μ ≠ 15

The National Institute of Mental Health published an article stating that in any one-year period, approximately 9.5 percent of American adults suffer from depression or a depressive illness. Suppose that in a survey of 100 people in a certain town, seven of them suffered from depression or a depressive illness. If you were conducting a hypothesis test to determine if the true proportion of people in that town suffering from depression or a depressive illness is lower than the percent in the general adult American population, what would the null and alternative hypotheses be?

Some of the following statements refer to the null hypothesis, some to the alternate hypothesis.

State the null hypothesis, H 0 , and the alternative hypothesis. H a , in terms of the appropriate parameter ( μ or p ).

- The mean number of years Americans work before retiring is 34.

- At most 60% of Americans vote in presidential elections.

- The mean starting salary for San Jose State University graduates is at least 💲100,000 per year.

- Twenty-nine percent of high school seniors get drunk each month.

- Fewer than 5% of adults ride the bus to work in Los Angeles.

- The mean number of cars a person owns in her lifetime is not more than ten.

- About half of Americans prefer to live away from cities, given the choice.

- Europeans have a mean paid vacation each year of six weeks.

- The chance of developing breast cancer is under 11% for women.

- Private universities’ mean tuition cost is more than 💲20,000 per year.

- H 0 : μ = 34; H a : μ ≠ 34

- H 0 : p ≤ 0.60; H a : p > 0.60

- H 0 : μ ≥ 100,000; H a : μ < 100,000

- H 0 : p = 0.29; H a : p ≠ 0.29

- H 0 : p = 0.05; H a : p < 0.05

- H 0 : μ ≤ 10; H a : μ > 10

- H 0 : p = 0.50; H a : p ≠ 0.50

- H 0 : μ = 6; H a : μ ≠ 6

- H 0 : p ≥ 0.11; H a : p < 0.11

- H 0 : μ ≤ 20,000; H a : μ > 20,000

Over the past few decades, public health officials have examined the link between weight concerns and teen girls’ smoking. Researchers surveyed a group of 273 randomly selected teen girls living in Massachusetts (between 12 and 15 years old). After four years the girls were surveyed again. Sixty-three said they smoked to stay thin. Is there good evidence that more than thirty percent of the teen girls smoke to stay thin? The alternative hypothesis is:

- p < 0.30

- p > 0.30

A statistics instructor believes that fewer than 20% of Evergreen Valley College (EVC) students attended the opening night midnight showing of the latest Harry Potter movie. She surveys 84 of her students and finds that 11 attended the midnight showing. An appropriate alternative hypothesis is:

- p > 0.20

- p < 0.20

Previously, an organization reported that teenagers spent 4.5 hours per week, on average, on the phone. The organization thinks that, currently, the mean is higher. Fifteen randomly chosen teenagers were asked how many hours per week they spend on the phone. The sample mean was 4.75 hours with a sample standard deviation of 2.0. Conduct a hypothesis test. The null and alternative hypotheses are:

- H o : \(\overline{x}\) = 4.5, H a : \(\overline{x}\) > 4.5

- H o : μ ≥ 4.5, H a : μ < 4.5

- H o : μ = 4.75, H a : μ > 4.75

- H o : μ = 4.5, H a : μ > 4.5

Data from the National Institute of Mental Health. Available online at http://www.nimh.nih.gov/publicat/depression.cfm.

Null and Alternative Hypotheses Copyright © 2013 by OpenStaxCollege is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

13.1 Understanding Null Hypothesis Testing

Learning objectives.

- Explain the purpose of null hypothesis testing, including the role of sampling error.

- Describe the basic logic of null hypothesis testing.

- Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

The Purpose of Null Hypothesis Testing

As we have seen, psychological research typically involves measuring one or more variables in a sample and computing descriptive statistics for that sample. In general, however, the researcher’s goal is not to draw conclusions about that sample but to draw conclusions about the population that the sample was selected from. Thus researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters . Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 adults with clinical depression and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for adults with clinical depression).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of adults with clinical depression, 6.45 in a second sample, and 9.44 in a third—even though these samples are selected randomly from the same population. Similarly, the correlation (Pearson’s r ) between two variables might be +.24 in one sample, −.04 in a second sample, and +.15 in a third—again, even though these samples are selected randomly from the same population. This random variability in a statistic from sample to sample is called sampling error . (Note that the term error here refers to random variability and does not imply that anyone has made a mistake. No one “commits a sampling error.”)

One implication of this is that when there is a statistical relationship in a sample, it is not always clear that there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of −.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any statistical relationship in a sample can be interpreted in two ways:

- There is a relationship in the population, and the relationship in the sample reflects this.

- There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of null hypothesis testing is simply to help researchers decide between these two interpretations.

The Logic of Null Hypothesis Testing

Null hypothesis testing is a formal approach to deciding between two interpretations of a statistical relationship in a sample. One interpretation is called the null hypothesis (often symbolized H 0 and read as “H-naught”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance.” The other interpretation is called the alternative hypothesis (often symbolized as H 1 ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

- Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

- Determine how likely the sample relationship would be if the null hypothesis were true.

- If the sample relationship would be extremely unlikely, then reject the null hypothesis in favor of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis .

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favor of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

A crucial step in null hypothesis testing is finding the likelihood of the sample result if the null hypothesis were true. This probability is called the p value . A low p value means that the sample result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A p value that is not low means that the sample result would be likely if the null hypothesis were true and leads to the retention of the null hypothesis. But how low must the p value be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called α (alpha) and is almost always set to .05. If there is a 5% chance or less of a result as extreme as the sample result if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant . If there is greater than a 5% chance of a result as extreme as the sample result when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true—only that there is not currently enough evidence to reject it. Researchers often use the expression “fail to reject the null hypothesis” rather than “retain the null hypothesis,” but they never use the expression “accept the null hypothesis.”

The Misunderstood p Value

The p value is one of the most misunderstood quantities in psychological research (Cohen, 1994) [1] . Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true—that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect . The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

“Null Hypothesis” retrieved from http://imgs.xkcd.com/comics/null_hypothesis.png (CC-BY-NC 2.5)

Role of Sample Size and Relationship Strength

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” In other words, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Table 13.1 shows roughly how relationship strength and sample size combine to determine whether a sample result is statistically significant. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes , then this combination would be statistically significant for both Cohen’s d and Pearson’s r . If it contains the word No , then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed in Section 13.2 “Some Basic Null Hypothesis Tests”

| Sample Size | Weak | Medium | Strong |

| Small ( = 20) | No | No | = Maybe = Yes |

| Medium ( = 50) | No | Yes | Yes |

| Large ( = 100) | = Yes = No | Yes | Yes |

| Extra large ( = 500) | Yes | Yes | Yes |

Although Table 13.1 provides only a rough guideline, it shows very clearly that weak relationships based on medium or small samples are never statistically significant and that strong relationships based on medium or larger samples are always statistically significant. If you keep this lesson in mind, you will often know whether a result is statistically significant based on the descriptive statistics alone. It is extremely useful to be able to develop this kind of intuitive judgment. One reason is that it allows you to develop expectations about how your formal null hypothesis tests are going to come out, which in turn allows you to detect problems in your analyses. For example, if your sample relationship is strong and your sample is medium, then you would expect to reject the null hypothesis. If for some reason your formal null hypothesis test indicates otherwise, then you need to double-check your computations and interpretations. A second reason is that the ability to make this kind of intuitive judgment is an indication that you understand the basic logic of this approach in addition to being able to do the computations.

Statistical Significance Versus Practical Significance

Table 13.1 illustrates another extremely important point. A statistically significant result is not necessarily a strong one. Even a very weak result can be statistically significant if it is based on a large enough sample. This is closely related to Janet Shibley Hyde’s argument about sex differences (Hyde, 2007) [2] . The differences between women and men in mathematical problem solving and leadership ability are statistically significant. But the word significant can cause people to interpret these differences as strong and important—perhaps even important enough to influence the college courses they take or even who they vote for. As we have seen, however, these statistically significant differences are actually quite weak—perhaps even “trivial.”

This is why it is important to distinguish between the statistical significance of a result and the practical significance of that result. Practical significance refers to the importance or usefulness of the result in some real-world context. Many sex differences are statistically significant—and may even be interesting for purely scientific reasons—but they are not practically significant. In clinical practice, this same concept is often referred to as “clinical significance.” For example, a study on a new treatment for social phobia might show that it produces a statistically significant positive effect. Yet this effect still might not be strong enough to justify the time, effort, and other costs of putting it into practice—especially if easier and cheaper treatments that work almost as well already exist. Although statistically significant, this result would be said to lack practical or clinical significance.

“Conditional Risk” retrieved from http://imgs.xkcd.com/comics/conditional_risk.png (CC-BY-NC 2.5)

Key Takeaways

- Null hypothesis testing is a formal approach to deciding whether a statistical relationship in a sample reflects a real relationship in the population or is just due to chance.

- The logic of null hypothesis testing involves assuming that the null hypothesis is true, finding how likely the sample result would be if this assumption were correct, and then making a decision. If the sample result would be unlikely if the null hypothesis were true, then it is rejected in favor of the alternative hypothesis. If it would not be unlikely, then the null hypothesis is retained.

- The probability of obtaining the sample result if the null hypothesis were true (the p value) is based on two considerations: relationship strength and sample size. Reasonable judgments about whether a sample relationship is statistically significant can often be made by quickly considering these two factors.

- Statistical significance is not the same as relationship strength or importance. Even weak relationships can be statistically significant if the sample size is large enough. It is important to consider relationship strength and the practical significance of a result in addition to its statistical significance.

- Discussion: Imagine a study showing that people who eat more broccoli tend to be happier. Explain for someone who knows nothing about statistics why the researchers would conduct a null hypothesis test.

- The correlation between two variables is r = −.78 based on a sample size of 137.

- The mean score on a psychological characteristic for women is 25 ( SD = 5) and the mean score for men is 24 ( SD = 5). There were 12 women and 10 men in this study.

- In a memory experiment, the mean number of items recalled by the 40 participants in Condition A was 0.50 standard deviations greater than the mean number recalled by the 40 participants in Condition B.

- In another memory experiment, the mean scores for participants in Condition A and Condition B came out exactly the same!

- A student finds a correlation of r = .04 between the number of units the students in his research methods class are taking and the students’ level of stress.

- Cohen, J. (1994). The world is round: p < .05. American Psychologist, 49 , 997–1003. ↵

- Hyde, J. S. (2007). New directions in the study of gender similarities and differences. Current Directions in Psychological Science, 16 , 259–263. ↵

Share This Book

- Increase Font Size

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null & Alternative Hypotheses | Definitions, Templates & Examples

Published on May 6, 2022 by Shaun Turney . Revised on June 22, 2023.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis ( H 0 ): There’s no effect in the population .

- Alternative hypothesis ( H a or H 1 ) : There’s an effect in the population.

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, similarities and differences between null and alternative hypotheses, how to write null and alternative hypotheses, other interesting articles, frequently asked questions.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”:

- The null hypothesis ( H 0 ) answers “No, there’s no effect in the population.”

- The alternative hypothesis ( H a ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample. It’s critical for your research to write strong hypotheses .

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept . Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect,” “no difference,” or “no relationship.” When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

You can never know with complete certainty whether there is an effect in the population. Some percentage of the time, your inference about the population will be incorrect. When you incorrectly reject the null hypothesis, it’s called a type I error . When you incorrectly fail to reject it, it’s a type II error.

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

| ( ) | ||

| Does tooth flossing affect the number of cavities? | Tooth flossing has on the number of cavities. | test: The mean number of cavities per person does not differ between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ = µ . |

| Does the amount of text highlighted in the textbook affect exam scores? | The amount of text highlighted in the textbook has on exam scores. | : There is no relationship between the amount of text highlighted and exam scores in the population; β = 0. |

| Does daily meditation decrease the incidence of depression? | Daily meditation the incidence of depression.* | test: The proportion of people with depression in the daily-meditation group ( ) is greater than or equal to the no-meditation group ( ) in the population; ≥ . |

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis ( H a ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect,” “a difference,” or “a relationship.” When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes < or >). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

| Does tooth flossing affect the number of cavities? | Tooth flossing has an on the number of cavities. | test: The mean number of cavities per person differs between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ ≠ µ . |

| Does the amount of text highlighted in a textbook affect exam scores? | The amount of text highlighted in the textbook has an on exam scores. | : There is a relationship between the amount of text highlighted and exam scores in the population; β ≠ 0. |

| Does daily meditation decrease the incidence of depression? | Daily meditation the incidence of depression. | test: The proportion of people with depression in the daily-meditation group ( ) is less than the no-meditation group ( ) in the population; < . |

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question.

- They both make claims about the population.

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

| A claim that there is in the population. | A claim that there is in the population. | |

|

| ||

| Equality symbol (=, ≥, or ≤) | Inequality symbol (≠, <, or >) | |

| Rejected | Supported | |

| Failed to reject | Not supported |

Prevent plagiarism. Run a free check.

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

General template sentences

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis ( H 0 ): Independent variable does not affect dependent variable.

- Alternative hypothesis ( H a ): Independent variable affects dependent variable.

Test-specific template sentences

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

| ( ) | ||

| test

with two groups | The mean dependent variable does not differ between group 1 (µ ) and group 2 (µ ) in the population; µ = µ . | The mean dependent variable differs between group 1 (µ ) and group 2 (µ ) in the population; µ ≠ µ . |

| with three groups | The mean dependent variable does not differ between group 1 (µ ), group 2 (µ ), and group 3 (µ ) in the population; µ = µ = µ . | The mean dependent variable of group 1 (µ ), group 2 (µ ), and group 3 (µ ) are not all equal in the population. |

| There is no correlation between independent variable and dependent variable in the population; ρ = 0. | There is a correlation between independent variable and dependent variable in the population; ρ ≠ 0. | |

| There is no relationship between independent variable and dependent variable in the population; β = 0. | There is a relationship between independent variable and dependent variable in the population; β ≠ 0. | |

| Two-proportions test | The dependent variable expressed as a proportion does not differ between group 1 ( ) and group 2 ( ) in the population; = . | The dependent variable expressed as a proportion differs between group 1 ( ) and group 2 ( ) in the population; ≠ . |

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, June 22). Null & Alternative Hypotheses | Definitions, Templates & Examples. Scribbr. Retrieved August 5, 2024, from https://www.scribbr.com/statistics/null-and-alternative-hypotheses/

Is this article helpful?

Shaun Turney

Other students also liked, inferential statistics | an easy introduction & examples, hypothesis testing | a step-by-step guide with easy examples, type i & type ii errors | differences, examples, visualizations, what is your plagiarism score.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

8.6 - interaction effects, example 8-4: depression treatments section .

Now that we've clarified what additive effects are, let's take a look at an example where including " interaction terms " is appropriate.

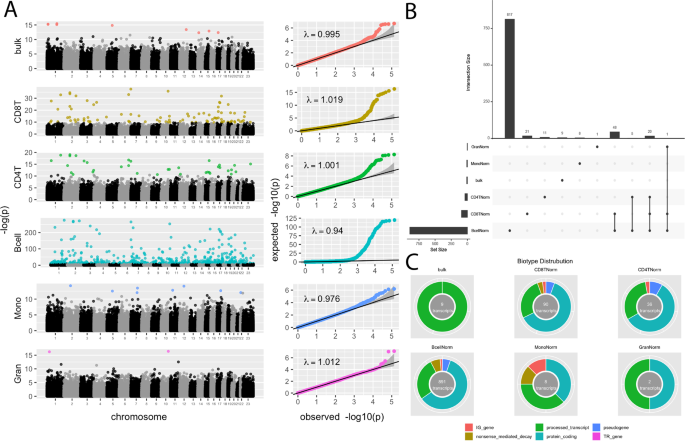

Some researchers (Daniel, 1999) were interested in comparing the effectiveness of three treatments for severe depression. For the sake of simplicity, we denote the three treatments A, B, and C. The researchers collected the following data ( Depression Data ) on a random sample of n = 36 severely depressed individuals:

- \(y_{i} =\) measure of the effectiveness of the treatment for individual i

- \(x_{i1} =\) age (in years) of individual i

- \(x_{i2} = 1\) if individual i received treatment A and 0, if not

- \(x_{i3} = 1\) if individual i received treatment B and 0, if not

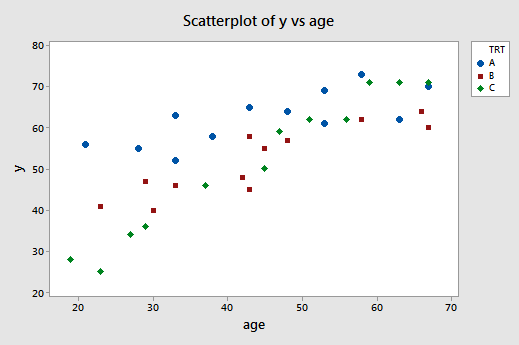

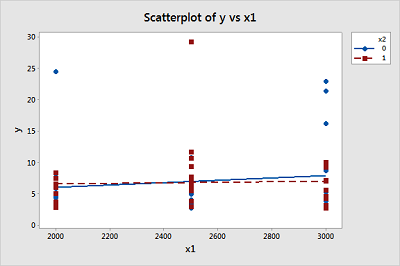

A scatter plot of the data with treatment effectiveness on the y -axis and age on the x -axis looks like this:

The blue circles represent the data for individuals receiving treatment A, the red squares represent the data for individuals receiving treatment B, and the green diamonds represent the data for individuals receiving treatment C.

In the previous example, the two estimated regression functions had the same slopes —that is, they were parallel. If you tried to draw three best-fitting lines through the data of this example, do you think the slopes of your lines would be the same? Probably not! In this case, we need to include what are called " interaction terms " in our formulated regression model.

A (second-order) multiple regression model with interaction terms is:

\(y_i=\beta_0+\beta_1x_{i1}+\beta_2x_{i2}+\beta_3x_{i3}+\beta_{12}x_{i1}x_{i2}+\beta_{13}x_{i1}x_{i3}+\epsilon_i\)

and the independent error terms \(\epsilon_i\) follow a normal distribution with mean 0 and equal variance \(\sigma^{2}\). Perhaps not surprisingly, the terms \(x_{i} x_{i2}\) and \(x_{i1} x_{i3}\) are the interaction terms in the model.

Let's investigate our formulated model to discover in what way the predictors have an " interaction effect " on the response. We start by determining the formulated regression function for each of the three treatments. In short —after a little bit of algebra (see below) —we learn that the model defines three different regression functions —one for each of the three treatments:

| Treatment | Formulated regression function |

|---|---|

| If patient receives A, then \( \left(x_{i2} = 1, x_{i3} = 0 \right) \) and ... | \(\mu_Y=(\beta_0+\beta_2)+(\beta_1+\beta_{12})x_{i1}\) |

| If patient receives B, then \( \left(x_{i2} = 0, x_{i3} = 1 \right) \) and ... | \(\mu_Y=(\beta_0+\beta_3)+(\beta_1+\beta_{13})x_{i1}\) |

| If patient receives C, then \( \left(x_{i2} = 0, x_{i3} = 0 \right) \) and ... | \(\mu_Y=\beta_0+\beta_{1}x_{i1}\) |

So, in what way does including the interaction terms, \(x_{i1} x_{i2}\) and \(x_{i1} x_{i3}\), in the model imply that the predictors have an " interaction effect " on the mean response? Note that the slopes of the three regression functions differ —the slope of the first line is \(\beta_1 + \beta_{12}\), the slope of the second line is \(\beta_1 + \beta_{13}\), and the slope of the third line is \(\beta_1\). What does this mean in a practical sense? It means that...

- the effect of the individual's age \(\left( x_1 \right)\) on the treatment's mean effectiveness \(\left(\mu_Y \right)\) depends on the treatment \(\left(x_2 \text{ and } x_3\right)\), and ...

- the effect of treatment \(\left(x_2 \text{ and } x_3\right)\) on the treatment's mean effectiveness \(\left(\mu_Y \right)\) depends on the individual's age \(\left( x_1 \right)\).

In general, then, what does it mean for two predictors " to interact "?

- Two predictors interact if the effect on the response variable of one predictor depends on the value of the other .

- A slope parameter can no longer be interpreted as the change in the mean response for each unit increase in the predictor, while the other predictors are held constant.

And, what are " interaction effects "?

A regression model contains interaction effects if the response function is not additive and cannot be written as a sum of functions of the predictor variables. That is, a regression model contains interaction effects if:

\(\mu_Y \ne f_1(x_1)+f_1(x_1)+ \cdots +f_{p-1}(x_{p-1})\)

For our example concerning treatment for depression, the mean response:

\(\mu_Y=\beta_0+\beta_1x_{1}+\beta_2x_{2}+\beta_3x_{3}+\beta_{12}x_{1}x_{2}+\beta_{13}x_{1}x_{3}\)

can not be separated into distinct functions of each of the individual predictors. That is, there is no way of "breaking apart" \(\beta_{12} x_1 x_2 \text{ and } \beta_{13} x_1 x_3\) into distinct pieces. Therefore, we say that \(x_1 \text{ and } x_2\) interact, and \(x_1 \text{ and } x_3\) interact.

In returning to our example, let's recall that the appropriate steps in any regression analysis are:

- Model formulation

- Model estimation

- Model evaluation

So far, within the model-building step, all we've done is formulate the regression model as:

We can use Minitab —or any other statistical software for that matter —to estimate the model. Doing so, Minitab reports:

Regression Equation

y = 6.21 + 1.0334 age + 41.30 x2+ 22.71 x3 - 0.703 agex2 - 0.510 agex3

Now, if we plug the possible values for \(x_2 \text{ and } x_3\) into the estimated regression function, we obtain the three "best fitting" lines —one for each treatment (A, B, and C) —through the data. Here's the algebra for determining the estimated regression function for patients receiving treatment A.

Doing similar algebra for patients receiving treatments B and C, we obtain:

| Treatment | Estimated regression function |

|---|---|

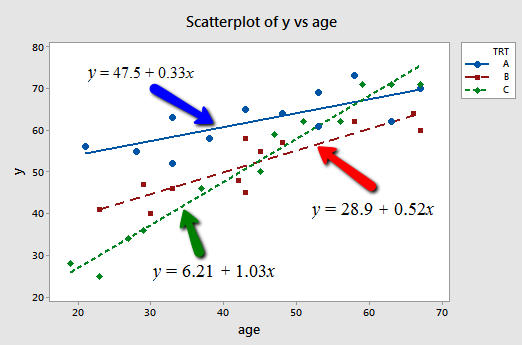

| If patient receives A, then \(\left(x_2 = 1, x_3 = 0 \right)\) and ... | \(\hat{y}=47.5+0.33x_1\) |

| If patient receives B, then \(\left(x_2 = 0, x_3 = 1 \right)\) and ... | \(\hat{y}=28.9+0.52x_1\) |

| If patient receives C, then \(\left(x_2 = 0, x_3 = 0 \right)\) and ... | \(\hat{y}=6.21+1.03x_1\) |

And, plotting the three "best fitting" lines, we obtain:

What do the estimated slopes tell us?

- For patients in this study receiving treatment A, the effectiveness of the treatment is predicted to increase by 0.33 units for every additional year in age.

- For patients in this study receiving treatment B, the effectiveness of the treatment is predicted to increase by 0.52 units for every additional year in age.

- For patients in this study receiving treatment C, the effectiveness of the treatment is predicted to increase by 1.03 units for every additional year in age.

In short, the effect of age on the predicted treatment effectiveness depends on the treatment given. That is, age appears to interact with treatment in its impact on treatment effectiveness. The interaction is exhibited graphically by the "nonparallelness" (is that a word?) of the lines.

Of course, our primary goal is not to draw conclusions about this particular sample of depressed individuals, but rather about the entire population of depressed individuals. That is, we want to use our estimated model to draw conclusions about the larger population of depressed individuals. Before we do so, however, we first should evaluate the model.

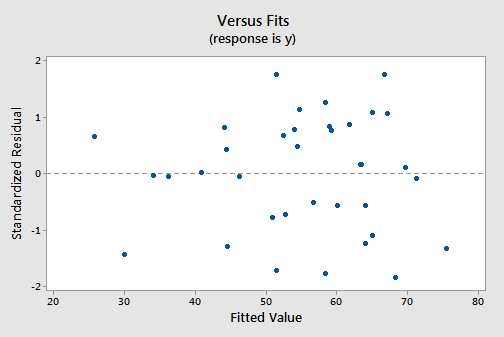

The residuals versus fits plot:

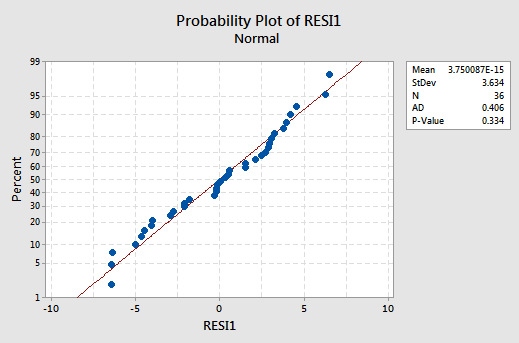

exhibits all of the "good" behavior, suggesting that the model fits well, there are no obvious outliers, and the error variances are indeed constant. And, the normal probability plot:

exhibits a linear trend and a large P -value, suggesting that the error terms are indeed normally distributed.

Having successfully built —formulated, estimated, and evaluated —a model, we now can use the model to answer our research questions. Let's consider two different questions that we might want to be answered.

First research question. For every age, is there a difference in the mean effectiveness for the three treatments? As is usually the case, our formulated regression model helps determine how to answer the research question. Our formulated regression model suggests that answering the question involves testing whether the population regression functions are identical.

That is, we need to test the null hypothesis \(H_0 \colon \beta_2 = \beta_3 =\beta_{12} = \beta_{13} = 0\) against the alternative \(H_A \colon\) at least one of these slope parameters is not 0.

We know how to do that! The relevant software output:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 5 | 4932.85 | 986.57 | 64.04 | 0.000 |

| age | 5 | 3424.43 | 3424.43 | 222.29 | 0.000 |

| x2 | 1 | 803.80 | 803.80 | 52.18 | 0.000 |

| x3 | 1 | 1.19 | 1.19 | 0.08 | 0.783 |

| agex2 | 1 | 375.00 | 375.00 | 24.34 | 0.000 |

| agex3 | 1 | 328.42 | 328.42 | 21.32 | 0.000 |

| Error | 30 | 462.15 | 15.40 | ||

| Lack-of-Fit | 27 | 285.15 | 10.56 | 0.18 | 0.996 |

| Pure Error | 3 | 177.00 | 59.00 | ||

| Total | 35 | 5395.0 |

tells us that the appropriate partial F -statistic for testing the above hypothesis is:

\(F=\frac{(803.8+1.19+375+328.42)/4}{15.4}=24.49\)

And, Minitab tells us:

F Distribution with 4 DF in Numerator and 30 DF in denominator

| x | \(p(X \leq x)\) |

|---|---|

| 24.49 | 1.00000 |

that the probability of observing an F -statistic —with 4 numerator and 30 denominator degrees of freedom —less than our observed test statistic 24.49 is > 0.999. Therefore, our P -value is < 0.001. We can reject our null hypothesis. There is sufficient evidence at the \(\alpha = 0.05\) level to conclude that there is a significant difference in the mean effectiveness for the three treatments.

Second research question. Does the effect of age on the treatment's effectiveness depend on the treatment? Our formulated regression model suggests that answering the question involves testing whether the two interaction parameters \(\beta_{12} \text{ and } \beta_{13}\) are significant. That is, we need to test the null hypothesis \(H_0 \colon \beta_{12} = \beta_{13} = 0\) against the alternative \(H_A \colon\) at least one of the interaction parameters is not 0.

The relevant software output:

\(F=\dfrac{(375+328.42)/2}{15.4}=22.84\)

F Distribution with 2 DF in Numerator and 30 DF in denominator

| x | \(p(X \leq x)\) |

|---|---|

| 22.84 | 1.00000 |

that the probability of observing an F -statistic — with 2 numerator and 30 denominator degrees of freedom — less than our observed test statistic 22.84 is > 0.999. Therefore, our P -value is < 0.001. We can reject our null hypothesis. There is sufficient evidence at the \(\alpha = 0.05\) level to conclude that the effect of age on the treatment's effectiveness depends on the treatment.

A model with an interaction term Section

- The formulated regression function for patients receiving treatment B.

- The formulated regression function for patients receiving treatment C.

\(\mu_Y = \beta_0+\beta_1x1+\beta_2 x_2+\beta_3 x_3+\beta_{12}x_1 x_2+\beta_{13} x_1 x_3\)

\(\mu_Y = \beta_0+\beta_1 x_1+\beta_2(0)+\beta_3(1)+\beta_{12} x_1(0)+\beta_{13} x_1(1) = (\beta_0+\beta_3)+(\beta_1+\beta_{13})x_1\)

\(\mu_Y = \beta_0+\beta_1 x_1+\beta_2(0)+\beta_3(0)+\beta_{12} x_1(0)+\beta_{13} x_1(0) = \beta_0+\beta_1 x_1\)

Treatment B, \(x_2 = 0 , x_3 = 1\), so

Treatment C, \(x_2 = 0 , x_3 = 0\), so

For the depression study, plug the appropriate values for \(x_2 \text{ and } x_3\) into the estimated regression function and perform the necessary algebra to determine:

- The estimated regression function for patients receiving treatment B.

- The estimated regression function for patients receiving treatment C.

\(\hat{y} = 6.21 + 1.0334 x_1 + 41.30 x_2+22.71 x_3 - 0.703 x_1 x_2 - 0.510 x_1 x_3\)

\(\hat{y} = 6.21 + 1.0334 x_1 + 41.30(0) + 22.71(1) - 0.703 x_1(0) - 0.510 x_1(1) = (6.21 + 22.71)+(1.0334 - 0.510) x_1 = 28.92 + 0.523 x_1\)

\(\hat{y} = 6.21 + 1.0334 x_1 + 41.30(0) + 22.71(0) - 0.703 x_1(0) - 0.510 x_1(0) = 6.21 + 1.033 x_1\)

For the first research question that we addressed for the depression study, show that there is no difference in the mean effectiveness between treatments B and C, for all ages, provided that \(\beta_3 = 0 \text{ and } \beta_{13} = 0\). ( HINT : Follow the argument presented in the chalk-talk comparing treatments A and C.)

\(\mu_Y|\text{Treatment B} - \mu_Y|\text{Treatment C} = (\beta_0 + \beta_3)+(\beta_1 + \beta_{13}) x_1 - (\beta_0 + \beta_1 x_1) = \beta_3 + \beta_{13} x_1 = 0\), if \(\beta_3 = \beta_{13} = 0\)

A study of atmospheric pollution on the slopes of the Blue Ridge Mountains (Tennessee) was conducted. The Lead Moss data contains the levels of lead found in 70 fern moss specimens (in micrograms of lead per gram of moss tissue) collected from the mountain slopes, as well as the elevation of the moss specimen (in feet) and the direction (1 if east, 0 if west) of the slope face.

- Write the equation of a second-order model relating mean lead level, E ( y ), to elevation \(\left(x_1 \right)\) and the slope face \(\left(x_2 \right)\) that includes an interaction between elevation and slope face in the model.

- Graph the relationship between mean lead level and elevation for the different slope faces that are hypothesized by the model in part a.

- In terms of the β's of the model in part a, give the change in lead level for every one-foot increase in elevation for moss specimens on the east slope.

- Fit the model in part a to the data using an available statistical software package. Is the overall model statistically useful for predicting lead level? Test using \(α = 0.10\).

- Write the estimated equation of the model in part a relating mean lead level, E ( y ), to elevation \(\left(x_1 \right)\) and slope face \(\left(x_2 \right)\).

Since the p-value for testing whether the overall model is statistically useful for predicting lead level is 0.857, we conclude that this model is not statistically useful.

(e) The estimated equation is shown in the Minitab output above, but since the model is not statistically useful, this equation doesn’t do us much good.

\(\mu_Y = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + \beta_{12} x_1 x_2\)

East slope, \(x_2=1\), \(\mu_Y = \beta_0 + \beta_1 x_1 + \beta_2(1) + \beta_{12} x_1(1) = (\beta_0 + \beta_2)+(\beta_1 + \beta_{12}) x_1\), so average lead level changes by \(\beta_1 + \beta_{12}\) micrograms of lead per gram of moss tissue for every one foot increase in elevation for moss specimens on the east slope.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Understanding Null Hypothesis Testing

Rajiv S. Jhangiani; I-Chant A. Chiang; Carrie Cuttler; and Dana C. Leighton

Learning Objectives

- Explain the purpose of null hypothesis testing, including the role of sampling error.

- Describe the basic logic of null hypothesis testing.

- Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

The Purpose of Null Hypothesis Testing

As we have seen, psychological research typically involves measuring one or more variables in a sample and computing descriptive summary data (e.g., means, correlation coefficients) for those variables. These descriptive data for the sample are called statistics . In general, however, the researcher’s goal is not to draw conclusions about that sample but to draw conclusions about the population that the sample was selected from. Thus researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters . Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 adults with clinical depression and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for adults with clinical depression).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of adults with clinical depression, 6.45 in a second sample, and 9.44 in a third—even though these samples are selected randomly from the same population. Similarly, the correlation (Pearson’s r ) between two variables might be +.24 in one sample, −.04 in a second sample, and +.15 in a third—again, even though these samples are selected randomly from the same population. This random variability in a statistic from sample to sample is called sampling error . (Note that the term error here refers to random variability and does not imply that anyone has made a mistake. No one “commits a sampling error.”)

One implication of this is that when there is a statistical relationship in a sample, it is not always clear that there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of −.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any statistical relationship in a sample can be interpreted in two ways:

- There is a relationship in the population, and the relationship in the sample reflects this.

- There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of null hypothesis testing is simply to help researchers decide between these two interpretations.

The Logic of Null Hypothesis Testing

Null hypothesis testing (often called null hypothesis significance testing or NHST) is a formal approach to deciding between two interpretations of a statistical relationship in a sample. One interpretation is called the null hypothesis (often symbolized H 0 and read as “H-zero”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance.” The other interpretation is called the alternative hypothesis (often symbolized as H 1 ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

- Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

- Determine how likely the sample relationship would be if the null hypothesis were true.

- If the sample relationship would be extremely unlikely, then reject the null hypothesis in favor of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis .

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favor of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

A crucial step in null hypothesis testing is finding the probability of the sample result or a more extreme result if the null hypothesis were true (Lakens, 2017). [1] This probability is called the p value . A low p value means that the sample or more extreme result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A p value that is not low means that the sample or more extreme result would be likely if the null hypothesis were true and leads to the retention of the null hypothesis. But how low must the p value criterion be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called α (alpha) and is almost always set to .05. If there is a 5% chance or less of a result at least as extreme as the sample result if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant . If there is greater than a 5% chance of a result as extreme as the sample result when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true—only that there is not currently enough evidence to reject it. Researchers often use the expression “fail to reject the null hypothesis” rather than “retain the null hypothesis,” but they never use the expression “accept the null hypothesis.”

The Misunderstood p Value

The p value is one of the most misunderstood quantities in psychological research (Cohen, 1994) [2] . Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true—that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect . The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

Role of Sample Size and Relationship Strength

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” In other words, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Table 13.1 shows roughly how relationship strength and sample size combine to determine whether a sample result is statistically significant. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes , then this combination would be statistically significant for both Cohen’s d and Pearson’s r . If it contains the word No , then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed in Section 13.2 “Some Basic Null Hypothesis Tests”

| Sample Size | Weak | Medium | Strong |

| Small ( = 20) | No | No | = Maybe = Yes |

| Medium ( = 50) | No | Yes | Yes |

| Large ( = 100) | = Yes = No | Yes | Yes |

| Extra large ( = 500) | Yes | Yes | Yes |