|

| |

|

|

This course is about projects with real world data for students in data science. Prerequisite : (statistical) machine learning.

Instructors:

Time and place:.

TuTh 3:00-4:20pm, Rm 5510, Lift 25-26, Zoom online, HKUST Tutorial session: Tu 6:00-6:50pm, Rm 5510, Lift 25-26, Zoom online, HKUST This term we will be using Piazza for class discussion. The system is highly catered to getting you help fast and efficiently from classmates and myself. Rather than emailing questions to the teaching staff, I encourage you to post your questions on Piazza. If you have any problems or feedback for the developers, email [email protected]. Find our class page at: https://piazza.com/ust.hk/spring2020/mafs6010u/home --->

Reference (参考教材)

An Introduction to Statistical Learning, with applications in R (ISLR). By James, Witten, Hastie, and Tibshirani

ISLR-python, By Jordi Warmenhoven .

ISLR-Python: Labs and Applied, by Matt Caudill .

Manning: Deep Learning with Python , by Francois Chollet [ GitHub source in Python 3.6 and Keras 2.0.8 ]

MIT: Deep Learning , by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

Kaggle Contest: Predict Survival on the Titanic .

Kaggle Contest: Home Credit Default Risk Prediction .

Kaggle Contest: Nexperia Image Classification (Second Stage, on-going) .

Kaggle Contest: Nexperia Image Classification (First Stage, finished) .

Tutorials: preparation for beginners

Python-Numpy Tutorials by Justin Johnson

scikit-learn Tutorials : An Introduction of Machine Learning in Python

Jupyter Notebook Tutorials

PyTorch Tutorials

Deep Learning: Do-it-yourself with PyTorch , A course at ENS

Tensorflow Tutorials

MXNet Tutorials

Theano Tutorials

statlearning-notebooks , by Sujit Pal, Python implementations of the R labs for the StatLearning: Statistical Learning online course from Stanford taught by Profs Trevor Hastie and Rob Tibshirani.

Homework and Projects:

TBA (To Be Announced)

Teaching Assistant:

Email: Mr. LIANG, Zhicong zliangak (add "AT connect DOT ust DOT hk" afterwards) >

| 02/09/2021, Tue | Lecture 01: History and Overview of Artificial Intelligence. [ ] | Y.Y. | |

| 07/09/2021, Tue | Lecture 02: Supervised Learning: Linear Regression with Python | Y.Y. | |

| 09/09/2021, Thu | Lecture 03: Linear Classification with Python | Y.Y. | |

| 14/09/2021, Tue | Lecture 04: Project 1 , Model Assessment and Selection I: Subset, Forward, and Backward Selection [ ] ] [ ] [ ] [ ] [ ]

| Y.Y. | |

| 16/09/2021, Thu | Lecture 05: Model Assessment and Selection II: Ridge, Lasso, and Principal Component Regression | Y.Y. | |

| 21/09/2021, Tue | Lecture 06: Decision Trees | Y.Y. | |

| 23/09/2021, Thu | Lecture 07: Bagging, Random Forests and Boosting | Y.Y. | |

| 28/09/2021, Tue | Lecture 08: Support Vector Machines I | Y.Y. | |

| 30/09/2021, Thu | Lecture 09: Support Vector Machines II | Y.Y. | 05/10/2021, Tue | Lecture 10: An Introduction to Convolutional Neural Networks [ ] ] ] ] ] | Y.Y. | 07/10/2021, Thu | Lecture 11: Examples of Convolutional Neural Networks. ] ] ] ] | Y.Y. |

| 12/10/2021, Tue | Lecture 12: Seminar

| Y.Y. | |

| 19/10/2021, Tue | Lecture 13: Seminar Model Selection and Regularization on Prediction of Survival on the Titanic [ ] [ ] [ ]

| Y.Y. | |

| 21/10/2021, Thu | Lecture 14: Seminar and Project 2 [ ] Machine Learning Basics Kaggle Contest: Home Credit Default Risk [ ] [ ] [ ] ] | Y.Y. | 26/10/2021, Tue | Lecture 15: An Introduction to Recurrent Neural Networks (RNN) [ ] ] ] [ ] ] ---> | Y.Y. | 28/10/2021, Thu | Lecture 16: Long-Short-Term-Memory (LSTM) [ ] ] ] [ ] [ ] [ ] ---> ] [ ] ] [ ] ---> | Y.Y. | 02/11/2021, Tue | Lecture 17: Attention and Transformer [ ] ] [ ] [ ] ] ] ] ] ---> | Y.Y. | 04/11/2021, Thu | Lecture 18: BERT (Bidirectional Encoder Representations from Transformers) [ ] ] [ ] [ ] [ ] ] ] ---> ] ] ] ] ] of the group reports that you reviewed, and please send your changes of ratings if any.

| Y.Y. | 09/11/2021, Tue | Lecture 19: An Introduction to Reinforcement Learning and Deep Q-Learning [ ] ] ] ] ] ] ---> | Y.Y. | 11/11/2021, Thu | Lecture 20: An Introduction to Reinforcement Learning: Policy Gradient and Actor-Critic Methods [ ] ] ] ] [ ] ] ] ] ] ---> | Y.Y. | 16/11/2021, Tue | Lecture 21: An Introduction to Unsupervised Learning: PCA, AutoEncoder, VAE, and GANs [ ] ] ]

| Y.Y. |

| 18/11/2021, Thu | Lecture 22: Seminar Limitations of Translation: How much translation affect the analysis of Chinese text in different models? [ ] [ ] [ ] ] [ ] --->

| Y.Y. | |

| 23/11/2021, Tue | Lecture 23: Seminar Pawpularity Prediction. [ ] [ ] [ ] ] [ ] --->

| Y.Y. | |

| 25/11/2021, Thu | Lecture 24: Seminar G-Research Crypto Forecasting (Kaggle) [ ] [ ] [ ] ] [ ] --->

| Y.Y. | |

| 30/11/2021, Tue | Lecture 25: Seminar Workers Supervision for Construction Safety [ ] [ ] [ ] ] [ ] [ ] --->

| Y.Y. | Lecture 08: Bagging, Random Forests and Boosting

| Y.Y. | 10/11/2020, Tue | Lecture 17: Topics in CNN: Visualization, Transfer Learning [ ] ] ] ] | Y.Y. | 12/11/2020, Thu | Lecture 18: Topics in CNN: Visualization, Transfer Learning, Neural Style, and Adversarial Examples [ ] ] ] ] ] ] | Y.Y. | 05/19/2020, Tue | Lecture 13: Tutorial on Reinforcement Learning in Quantitative Trading [ ] ] ] ] ] ] ] and Anthony Woo ]

| Weizhi ZHU A.W. Y.Y. |

| 03/8/2019, Fri | Lecture 05: Tutorials | Yifei Huang; Katrina Fong; Anthony Woo | |

| 03/15/2019, Fri | Lecture 06. Topics in Blockchains : , CEO, VEE Technology LLC and Dr. Chen NING. : This is a brief introduction of Blockchain consensus and its current application in Finance, vision and outlook of Blockchain in Fintech. : Dr Alex Yang is a FinTech entrepreneur/investor with over 14 years of experience in banking and finance. VEE Technology is led by Sunny King, a blockchain legendary developer and creator of Proof-of-Stake consensus. As CEO of VEE Tech, Alex is driving the project to solve the core scalability and stability problems in the development of the blockchain industry. His deep experience of the industry has been gained through his investing activity where he has sponsored many world-leading blockchain foundations. Prior to his role at VEE, Alex was the founder and CEO of Fund V, one of the first token funds to focus on blockchain companies and related investment opportunities. He was also the founding partner of Beam VC and CyberCarrier Capital which together have successfully invested in over 30 startups in the TMT sector. Alex is a founding partner of Protoss Global Opportunity Fund, a fixed income hedge fund based in Hong Kong. Prior to moving into venture capital investing, Alex was based in Hong Kong as head of APAC structured rates trading at Nomura International, and VP of exotic derivatives trading at UBS. He started his career as a quantitative developer at Jump Trading in Chicago. Alex has a PhD from Northwestern University and a BA in Mathematics from Peking University. : [ ] | A.W. Y.Y. | 05/03/2019, Fri | Seminar: Investment Trends and FinTech Outlook : Sales and Trading Business in Global Investment Banks Ripe for Disruption by AI? Mr. Christopher Lee Mr. Chris Lee is a partner at FAA Investments and a board director with expertise in financial markets, risk management, governance and leadership development. Currently, he serves as an Independent Board Member with Matthews Asia Funds (AUM: US$30.2 billion), the largest US investment company with a focus on Asia Pacific markets and Asian Masters Fund, an investment company listed in Australia. Previously, Chris was an investment banker for 18 years, acting as Managing Director and divisional and regional heads at Deutsche Bank AG, UBS Investment Bank and Bank of America Merrill Lynch. He worked in global capital markets, managed derivative products, and provided equity sales and trading functions to institutional investors. Academically, Chris is an associate professor of science practice at HKUST and teaches financial mathematics and risk management courses. He completed the AMP at Harvard University and holds a BS in Mechanical Engineering and an MBA from U.C. Berkeley. Bloomberg Profile: [ ] | Chris Lee A.W. | 05/10/2019, Fri | Lecture 12: Tutorial on deep learning in Python ] | Yifei Huang | ---> |

DataScienceCapstone

Capstone project for the johns hopkins 10 course data science specilization, data science capstone project.

The Capston Project of the Coursera - Johns Hopkins Data Science Specilization. This is course 10 of the 10-course program.

See the GitHub Pages page, Data Science Capstone .

The Project

An analysis of text data and natural language processing delivered as a Data Science Product.

Deliverables

- Data Exploration

- Presentation

Modern Data Science with R

3rd edition (light edits and updates)

Benjamin S. Baumer, Daniel T. Kaplan, and Nicholas J. Horton

July 25, 2024

3rd edition

This is the work-in-progress of the 3rd edition. At present, there are relatively modest changes from the second edition beyond those necessitated by changes in the R ecosystem.

Key changes include:

- Transition to Quarto from RMarkdown

- Transition from magrittr pipe ( %>% ) to base R pipe ( |> )

- Minor updates to specific examples (e.g., updating tables scraped from Wikipedia) and code (e.g., new group options within the dplyr package).

At the main website for the book , you will find other reviews, instructor resources, errata, and other information.

Do you see issues or have suggestions? To submit corrections, please visit our website’s public GitHub repository and file an issue.

Known issues with the 3rd edition

This is a work in progress. At present there are a number of known issues:

- nuclear reactors example ( 6.4.4 Example: Japanese nuclear reactors ) needs to be updated to account for Wikipedia changes

- Python code not yet implemented ( Chapter 21 Epilogue: Towards “big data” )

- Spark code not yet implemented ( Chapter 21 Epilogue: Towards “big data” )

- SQL output captions not working ( Chapter 15 Database querying using SQL )

- Open street map geocoding not yet implemented ( Chapter 18 Geospatial computations )

- ggmosaic() warnings ( Figure 3.19 )

- RMarkdown introduction ( Appendix Appendix D — Reproducible analysis and workflow ) not yet converted to Quarto examples

- issues with references in Appendix Appendix A — Packages used in the book

- Exercises not yet available (throughout)

- Links have not all been verified (help welcomed here!)

2nd edition

The online version of the 2nd edition of Modern Data Science with R is available. You can purchase the book from CRC Press or from Amazon .

The main website for the book includes more information, including reviews, instructor resources, and errata.

To submit corrections, please visit our website’s public GitHub repository and file an issue.

1st edition

The 1st edition may still be available for purchase. Although much of the material has been updated and improved, the general framework is the same ( reviews ).

© 2021 by Taylor & Francis Group, LLC . Except as permitted under U.S. copyright law, no part of this book may be reprinted, reproduced, transmitted, or utilized in any form by an electronic, mechanical, or other means, now known or hereafter invented, including photocopying, microfilming, and recording, or in any information storage or retrieval system, without written permission from the publishers.

Background and motivation

The increasing volume and sophistication of data poses new challenges for analysts, who need to be able to transform complex data sets to answer important statistical questions. A consensus report on data science for undergraduates ( National Academies of Science, Engineering, and Medicine 2018 ) noted that data science is revolutionizing science and the workplace. They defined a data scientist as “a knowledge worker who is principally occupied with analyzing complex and massive data resources.”

Michael I. Jordan has described data science as the marriage of computational thinking and inferential (statistical) thinking. Without the skills to be able to “wrangle” or “marshal” the increasingly rich and complex data that surround us, analysts will not be able to use these data to make better decisions.

Demand is strong for graduates with these skills. According to the company ratings site Glassdoor , “data scientist” was the best job in America every year from 2016–2019 ( Columbus 2019 ) .

New data technologies make it possible to extract data from more sources than ever before. Streamlined data processing libraries enable data scientists to express how to restructure those data into a form suitable for analysis. Database systems facilitate the storage and retrieval of ever-larger collections of data. State-of-the-art workflow tools foster well-documented and reproducible analysis. Modern statistical and machine learning methods allow the analyst to fit and assess models as well as to undertake supervised or unsupervised learning to glean information about the underlying real-world phenomena. Contemporary data science requires tight integration of these statistical, computing, data-related, and communication skills.

Intended audience

This book is intended for readers who want to develop the appropriate skills to tackle complex data science projects and “think with data” (as coined by Diane Lambert of Google). The desire to solve problems using data is at the heart of our approach.

We acknowledge that it is impossible to cover all these topics in any level of detail within a single book: Many of the chapters could productively form the basis for a course or series of courses. Instead, our goal is to lay a foundation for analysis of real-world data and to ensure that analysts see the power of statistics and data analysis. After reading this book, readers will have greatly expanded their skill set for working with these data, and should have a newfound confidence about their ability to learn new technologies on-the-fly.

This book was originally conceived to support a one-semester, 13-week undergraduate course in data science. We have found that the book will be useful for more advanced students in related disciplines, or analysts who want to bolster their data science skills. At the same time, Part I of the book is accessible to a general audience with no programming or statistics experience.

Key features of this book

Focus on case studies and extended examples.

We feature a series of complex, real-world extended case studies and examples from a broad range of application areas, including politics, transportation, sports, environmental science, public health, social media, and entertainment. These rich data sets require the use of sophisticated data extraction techniques, modern data visualization approaches, and refined computational approaches.

Context is king for such questions, and we have structured the book to foster the parallel developments of statistical thinking, data-related skills, and communication. Each chapter focuses on a different extended example with diverse applications, while exercises allow for the development and refinement of the skills learned in that chapter.

The book has three main sections plus supplementary appendices. Part I provides an introduction to data science, which includes an introduction to data visualization, a foundation for data management (or “wrangling”), and ethics. Part II extends key modeling notions from introductory statistics, including regression modeling, classification and prediction, statistical foundations, and simulation. Part III introduces more advanced topics, including interactive data visualization, SQL and relational databases, geospatial data, text mining, and network science.

We conclude with appendices that introduce the book’s R package, R and RStudio , key aspects of algorithmic thinking, reproducible analysis, a review of regression, and how to set up a local SQL database.

The book features extensive cross-referencing (given the inherent connections between topics and approaches).

Supporting materials

In addition to many examples and extended case studies, the book incorporates exercises at the end of each chapter along with supplementary exercises available online. Many of the exercises are quite open-ended, and are designed to allow students to explore their creativity in tackling data science questions. (A solutions manual for instructors is available from the publisher.)

The book website at https://mdsr-book.github.io/mdsr3e includes the table of contents, the full text of each chapter, and bibliography. The instructor’s website at https://mdsr-book.github.io/ contains code samples, supplementary exercises, additional activities, and a list of errata.

Changes in the second edition

Data science moves quickly. A lot has changed since we wrote the first edition. We have updated all chapters to account for many of these changes and to take advantage of state-of-the-art R packages.

First, the chapter on working with geospatial data has been expanded and split into two chapters. The first focuses on working with geospatial data, and the second focuses on geospatial computations. Both chapters now use the sf package and the new geom_sf() function in ggplot2 . These changes allow students to penetrate deeper into the world of geospatial data analysis.

Second, the chapter on tidy data has undergone significant revisions. A new section on list-columns has been added, and the section on iteration has been expanded into a full chapter. This new chapter makes consistent use of the functional programming style provided by the purrr package. These changes help students develop a habit of mind around scalability: if you are copying-and-pasting code more than twice, there is probably a more efficient way to do it.

Third, the chapter on supervised learning has been split into two chapters and updated to use the tidymodels suite of packages. The first chapter now covers model evaluation in generality, while the second introduces several models. The tidymodels ecosystem provides a consistent syntax for fitting, interpreting, and evaluating a wide variety of machine learning models, all in a manner that is consistent with the tidyverse . These changes significantly reduce the cognitive overhead of the code in this chapter.

The content of several other chapters has undergone more minor—but nonetheless substantive—revisions. All of the code in the book has been revised to adhere more closely to the tidyverse syntax and style. Exercises and solutions from the first edition have been revised, and new exercises have been added. The code from each chapter is now available on the book website. The book has been ported to bookdown , so that a full version can be found online at https://mdsr-book.github.io/mdsr2e .

Key role of technology

While many tools can be used effectively to undertake data science, and the technologies to undertake analyses are quickly changing, R and Python have emerged as two powerful and extensible environments. While it is important for data scientists to be able to use multiple technologies for their analyses, we have chosen to focus on the use of R and RStudio (an open source integrated development environment created by Posit) to avoid cognitive overload. We describe a powerful and coherent set of tools that can be introduced within the confines of a single semester and that provide a foundation for data wrangling and exploration.

We take full advantage of the ( RStudio ) environment. This powerful and easy-to-use front end adds innumerable features to R including package support, code-completion, integrated help, a debugger, and other coding tools. In our experience, the use of ( RStudio ) dramatically increases the productivity of R users, and by tightly integrating reproducible analysis tools, helps avoid error-prone “cut-and-paste” workflows. Our students and colleagues find ( RStudio ) to be an accessible interface. No prior knowledge or experience with R or ( RStudio ) is required: we include an introduction within the Appendix.

As noted earlier, we have comprehensively integrated many substantial improvements in the tidyverse , an opinionated set of packages that provide a more consistent interface to R ( Wickham 2023 ) . Many of the design decisions embedded in the tidyverse packages address issues that have traditionally complicated the use of R for data analysis. These decisions allow novice users to make headway more quickly and develop good habits.

We used a reproducible analysis system ( knitr ) to generate the example code and output in this book. Code extracted from these files is provided on the book’s website. We provide a detailed discussion of the philosophy and use of these systems. In particular, we feel that the knitr and rmarkdown packages for R , which are tightly integrated with Posit’s ( RStudio ) IDE, should become a part of every R user’s toolbox. We can’t imagine working on a project without them (and we’ve incorporated reproducibility into all of our courses).

Modern data science is a team sport. To be able to fully engage, analysts must be able to pose a question, seek out data to address it, ingest this into a computing environment, model and explore, then communicate results. This is an iterative process that requires a blend of statistics and computing skills.

How to use this book

The material from this book has supported several courses to date at Amherst, Smith, and Macalester Colleges, as well as many others around the world. From our personal experience, this includes an intermediate course in data science (in 2013 and 2014 at Smith College and since 2017 at Amherst College), an introductory course in data science (since 2016 at Smith), and a capstone course in advanced data analysis (multiple years at Amherst).

The introductory data science course at Smith has no prerequisites and includes the following subset of material:

- Data Visualization: three weeks, covering Chapters 1 Prologue: Why data science? – 3 A grammar for graphics

- Data Wrangling: five weeks, covering Chapters 4 Data wrangling on one table – 7 Iteration

- Ethics: one week, covering Chapter 8 Data science ethics

- Database Querying: two weeks, covering Chapter 15 Database querying using SQL

- Geospatial Data: two weeks, covering Chapter 17 Working with geospatial data and part of Chapter 18 Geospatial computations

A intermediate course at Amherst followed the approach of Baumer ( 2015 ) with a pre-requisite of some statistics and some computer science and an integrated final project. The course generally covers the following chapters:

- Data Visualization: two weeks, covering Chapters 1 Prologue: Why data science? – 3 A grammar for graphics and 14 Dynamic and customized data graphics

- Data Wrangling: four weeks, covering Chapters 4 Data wrangling on one table – 7 Iteration

- Unsupervised Learning: one week, covering Chapter 12 Unsupervised learning

- Database Querying: one week, covering Chapter 15 Database querying using SQL

- Geospatial Data: one week, covering Chapter 17 Working with geospatial data and some of Chapter 18 Geospatial computations

- Text Mining: one week, covering Chapter 19 Text as data

- Network Science: one week, covering Chapter 20 Network science

The capstone course at Amherst reviewed much of that material in more depth:

- Data Visualization: three weeks, covering Chapters 1 Prologue: Why data science? – 3 A grammar for graphics and Chapter 14 Dynamic and customized data graphics

- Data Wrangling: two weeks, covering Chapters 4 Data wrangling on one table – 7 Iteration

- Simulation: one week, covering Chapter 13 Simulation

- Statistical Learning: two weeks, covering Chapters 10 Predictive modeling – 12 Unsupervised learning

- Databases: one week, covering Chapter 15 Database querying using SQL and Appendix Appendix F — Setting up a database server

- Spatial Data: one week, covering Chapter 17 Working with geospatial data

- Big Data: one week, covering Chapter 21 Epilogue: Towards “big data”

We anticipate that this book could serve as the primary text for a variety of other courses, such as a Data Science 2 course, with or without additional supplementary material.

The content in Part I—particularly the ggplot2 visualization concepts presented in Chapter 3 A grammar for graphics and the dplyr data wrangling operations presented in Chapter 4 Data wrangling on one table —is fundamental and is assumed in Parts II and III. Each of the topics in Part III are independent of each other and the material in Part II. Thus, while most instructors will want to cover most (if not all) of Part I in any course, the material in Parts II and III can be added with almost total freedom.

The material in Part II is designed to expose students with a beginner’s understanding of statistics (i.e., basic inference and linear regression) to a richer world of statistical modeling and statistical inference.

Acknowledgments

We would like to thank John Kimmel at Informa CRC/Chapman and Hall for his support and guidance. We also thank Jim Albert, Nancy Boynton, Jon Caris, Mine Çetinkaya-Rundel, Jonathan Che, Patrick Frenett, Scott Gilman, Maria-Cristiana Gîrjău, Johanna Hardin, Alana Horton, John Horton, Kinari Horton, Azka Javaid, Andrew Kim, Eunice Kim, Caroline Kusiak, Ken Kleinman, Priscilla (Wencong) Li, Amelia McNamara, Melody Owen, Randall Pruim, Tanya Riseman, Gabriel Sosa, Katie St. Clair, Amy Wagaman, Susan (Xiaofei) Wang, Hadley Wickham, J. J. Allaire and the Posit (formerly RStudio) developers, the anonymous reviewers, multiple classes at Smith and Amherst Colleges, and many others for contributions to the R and ( RStudio ) environment, comments, guidance, and/or helpful suggestions on drafts of the manuscript. Rose Porta was instrumental in proofreading and easing the transition from Sweave to R Markdown. Jessica Yu converted and tagged most of the exercises from the first edition to the new format based on etude .

Above all we greatly appreciate Cory, Maya, and Julia for their patience and support.

Northampton, MA and St. Paul, MN August, 2023 (third edition [light edits and updates])

Northampton, MA and St. Paul, MN December, 2020 (second edition)

🏥👩🏽⚕️ Data Science Course Capstone Project - Healthcare domain - Diabetes Detection

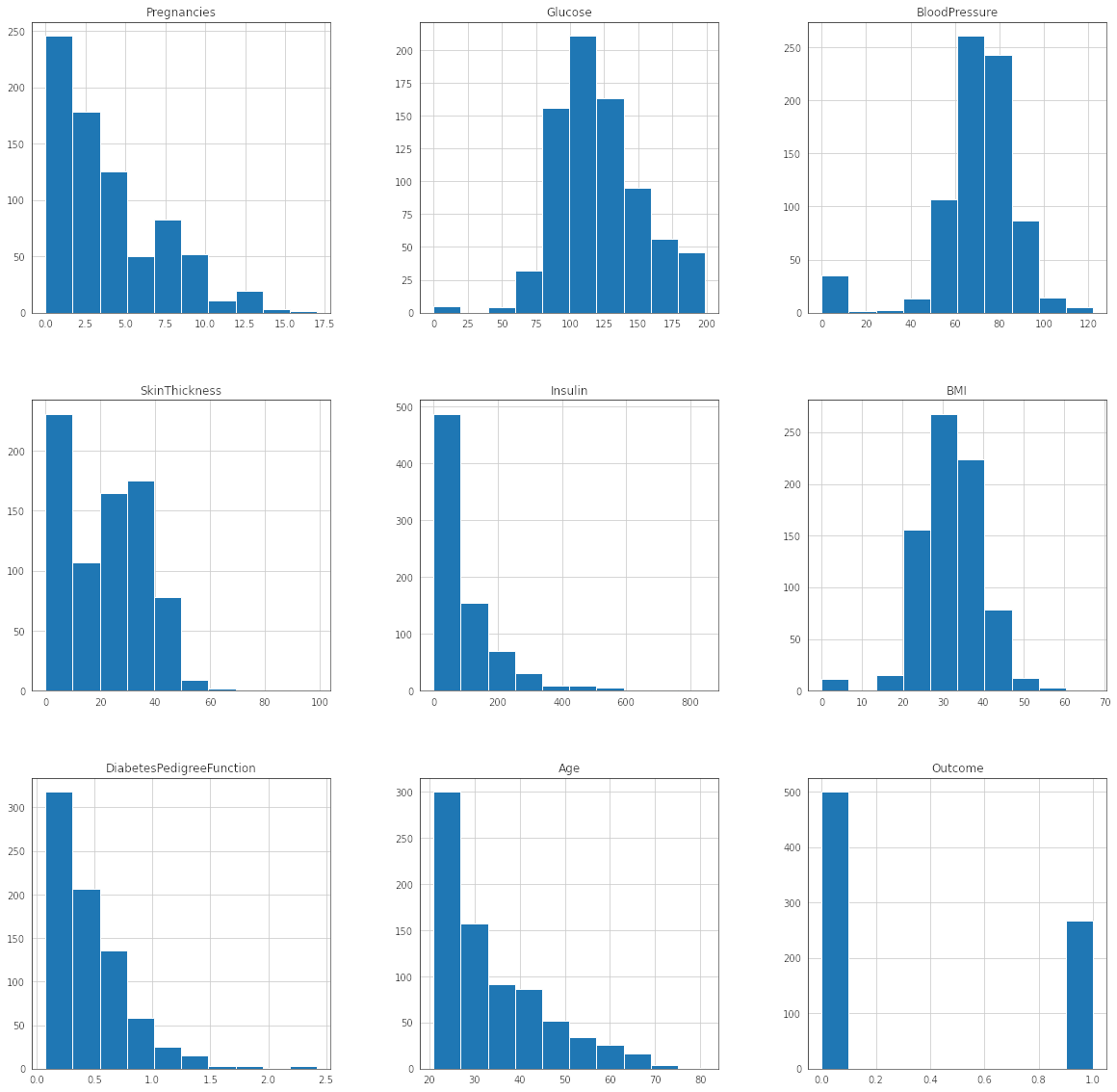

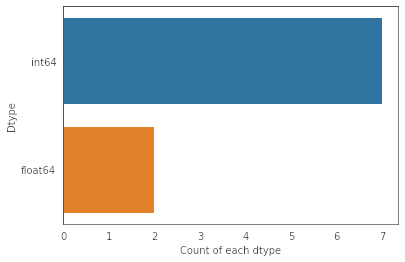

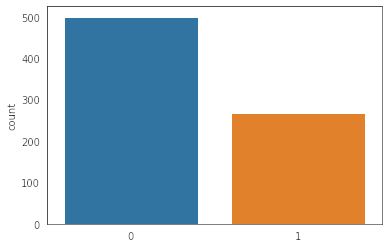

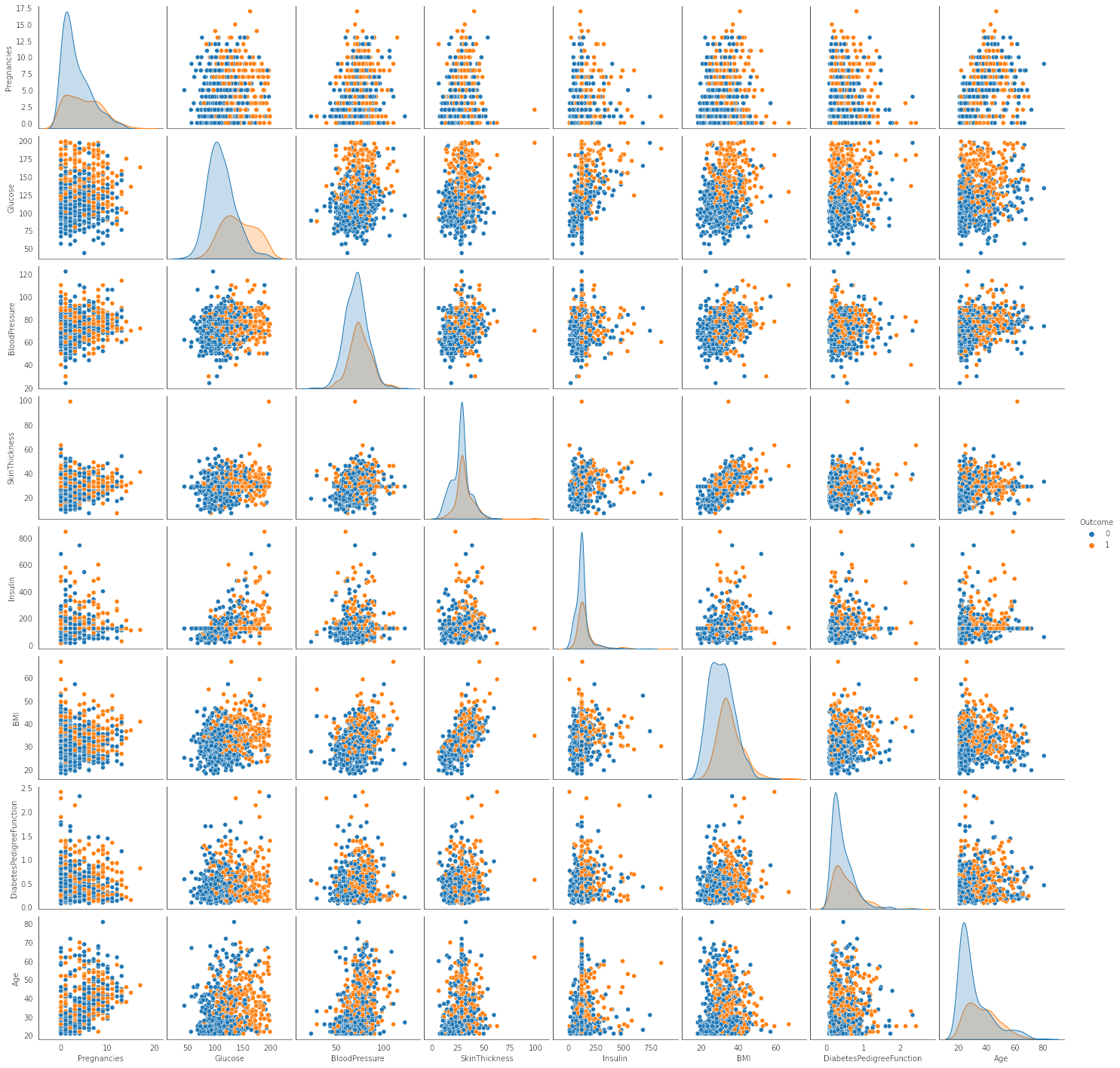

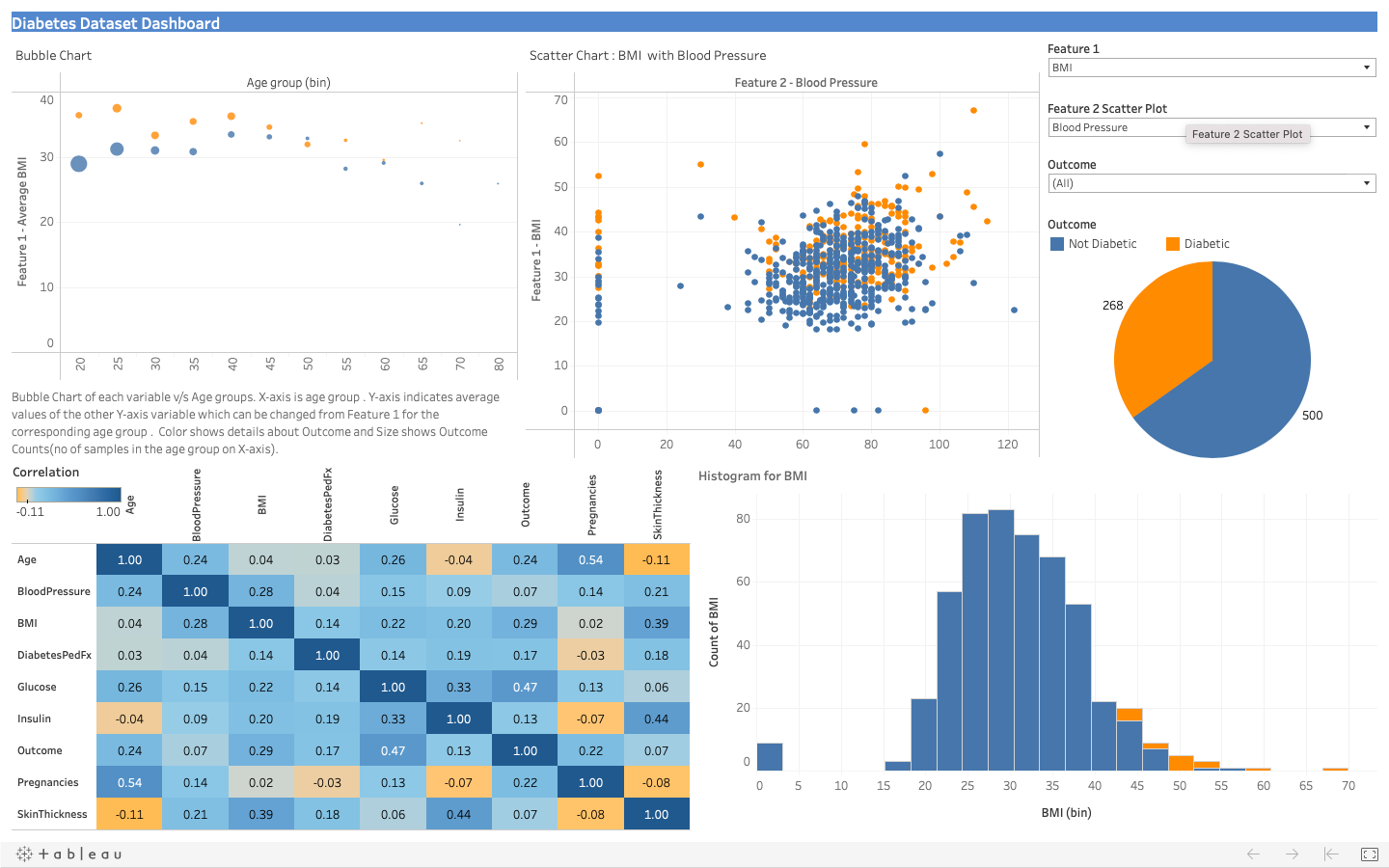

This is comprehensive project completed by me as part of the Data Science Post Graduate Programme. This project includes multiple classification algorithms over a dataset collected on health/diagnostic variables to predict of a person has diabetes or not based on the data points. Apart from extensive EDA to understand the distribution and other aspects of the data, pre-processing was done to identify data which was missing or did not make sense within certain columns and imputation techniques were deployed to treat missing values. For classification the balance of classes was also reviewed and treated using SMOTE. Finally models were built using various classification algorithms and compared for accuracy on various metrics.Lastly the project contains a dashboard on the original data using Tableau.

You can view the full project code on this Github link

Note: This is an academic project completed by me as part of my Post Graduate program in Data Science from Purdue University through Simplilearn. This project was towards final course completion.

Bussiness Scenario

This dataset is originally from the National Institute of Diabetes and Digestive and Kidney Diseases. The objective of the dataset is to diagnostically predict whether or not a patient has diabetes, based on certain diagnostic measurements included in the dataset. Several constraints were placed on the selection of these instances from a larger database. In particular, all patients here are females at least 21 years old of Pima Indian heritage.

Build a model to accurately predict whether the patients in the dataset have diabetes or not.

Analysis Steps

Data cleaning and exploratory data analysis -.

There are integer as well as float data-type of variables in this dataset. Create a count (frequency) plot describing the data types and the count of variables.

Check the balance of the data (to review imbalanced classes for the classification problem) by plotting the count of outcomes by their value. Review findings and plan future course of actions.

We notice that there is class imbalance . The diabetic class (1) is the minority class and there are 35% samples for this class. However for the non-diabetic class(0) there are 65% of the total samples present. We need to balance the data using any oversampling for minority class or undersampling for majority class. This would help to ensure the model is balanced across both classes.We can apply the SMOTE (synthetic minority oversampling technique) method for balancing the samples by oversampling the minority class (class 1 - diabetic) as we would want to ensure model more accurately predicts when an individual has diabetes in our problem.

Create scatter charts between the pair of variables to understand the relationships. Describe findings.

We review scatter charts for analysing inter-relations between the variables and observe the following

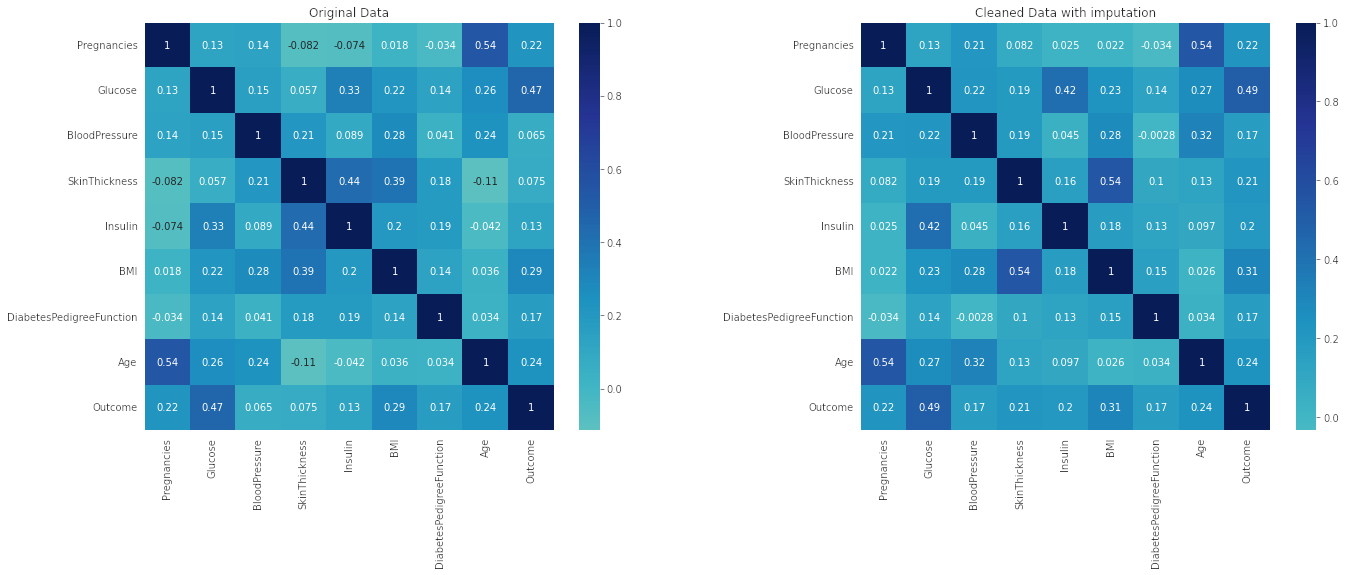

Perform correlation analysis. Visually explore it using a heat map.

Observation : As mentioned in the pairplot analysis the variable Glucose has the highest correlation to outcome.

Model Building

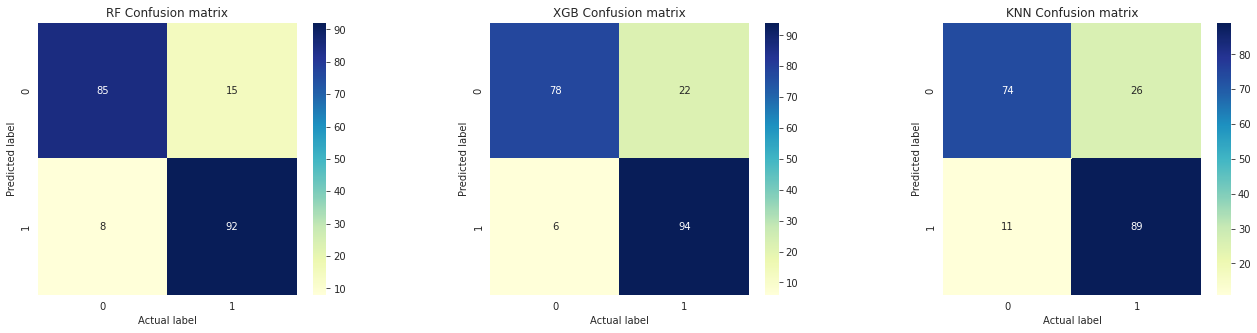

| Model | AUC | Sensitivity | Specificity |

|---|---|---|---|

| RF | 94.260 | 92.0 | 85.0 |

| XGB | 93.010 | 94.0 | 78.0 |

| KNN | 89.555 | 89.0 | 74.0 |

Note: ROC (Receiver Operating Characteristic) Curve tells us about how good the model can distinguish between two things (e.g If a patient has a disease or no). Better models can accurately distinguish between the two. Whereas, a poor model will have difficulties in distinguishing between the two. This is quantified in the AUC Score. Final Analysis Based on the classification report:

Data Reporting

Tools used:

This project was completed in Python using Jupyter notebooks. Common libraries used for analysis include numpy, pandas, sci-kit learn, matplotlib, seaborn, xgboost

Further Reading

🏡🏷️ california housing price prediction using linear regression in python.

Summary- The project includes analysis on the California Housing Dataset with some Exploratory data analysis . There was encoding of categorical data using the one-hot encoding present in pandas. ...

🔎📊 Principal Component Analysis with XGBoost Regression in Python

Summary- This project is based on data from the Mercedes Benz test bench for vehicles at the testing and quality assurance phase during the production cycle. The dataset consists of high number of...

💬⚙️ NLP Project - Phone Review Analysis and Topic Modeling with Latent Dirichlet Allocation in Python

Summary- This is a Natural Language Processing project which includes analysis of buyer’s reviews/comments of a popular mobile phone by Lenovo from an e-commerce website. Analysis done for the proj...

Data Science Capstone Project: Milestone Report

Alexey serdyuk, table of content, prerequisites, obtaining the data, splitting the data, first glance on the data and general plan, cleaning up and preprocessing the corpus, analyzing words (1-grams), analyzing bigrams, pruning bigrams, 3-grams to 6-grams, conclusions and next steps.

This is a milestone report for Week 2 of the capstone project for the cycle of courses Data Science Specialization offered on Coursera by Johns Hopkins University .

The purpose of the capstone project is to build a Natural Language Processing (NLP) application, that, given a chunk of text, predicts the next most probable word. The application may be used, for example, in mobile devided to provide suggestions as the user tips in some text.

In this report we will provide initial analysis of the data, as well as discuss approach to building the application.

An important question is which library to use for processing and analyzing the corpora, as R provides several alternatives. Initially we attempted to use the library tm , but quickly found that the library is very memory-hungry, and an attempt to build bi- or trigrams for a large corpus are not practical. After some googling we decided to use the library quanteda instead.

We start by loading required libraries.

To speed up processing of large data sets, we will apply parallel version of lapply function from the library parallel . To use all the available resources, we detect a number of CPU cores and configure the library to use them all.

Here and at some times later we use caching to speed up rendering of this document. Results of long-running operations are stored, and used again during the next run. If you wish to re-run all operations, just remove the cache directory.

We download the data from the URL provided in the course description, and unzip it.

The downloaded zip file contains corpora in several languages: English, German, Russian and Finnish. In our project we will use only English corpora.

Corpora in each language, including English, contains 3 files with content obtained from different sources: news, blogs and twitter.

As the first step, we will split each relevant file on 3 parts:

- Training set (60%) will be used to build and train the algorithm.

- Testing set (20%) will be used to test the algorithm during it’s development. This set may be used more than once.

- Validation set (20%) will be used for a final validation and estimation of out-of-sample performance. This set will be used only once.

We define a function which splits the specified file on parts described above:

To make results reproduceable, we set the seed of the random number generator.

Finally, we split each of the data files.

As a sanity check, we count a number of lines in each source file, as well in the partial files produced by the split.

| Rows | % | Rows | % | Rows | % | |

|---|---|---|---|---|---|---|

| Training | 539572 | 59.99991 | 606145 | 59.99998 | 1416088 | 59.99997 |

| Testing | 179858 | 20.00004 | 202048 | 19.99996 | 472030 | 20.00002 |

| Validation | 179858 | 20.00004 | 202049 | 20.00006 | 472030 | 20.00002 |

| Total | 899288 | 100.00000 | 1010242 | 100.00000 | 2360148 | 100.00000 |

| Control (expected to be 0) | 0 | NA | 0 | NA | 0 | NA |

As the table shows, we have splitted the data on sub-sets as intended.

In the section above we have already counted a number of lines. Let us load training data sets and take a look on the first 3 lines of each data set.

we could see that the data contains not only words, but also numbers and punctuation. The punctuation may be non-ASCII (Unicode), as the first example in the blogs sample shows (it contains a character “…”, which is different from 3 ASCII point characters “. . .”). Some lines may contain multiple sentences, and probably we have to take this into account.

Here is our plan:

- Split text on sentences.

- Clean up the corpus: remove non-language parts such as e-mail addresses and URLs, etc.

- Preprocess the corpus: remove punctuation and numbers, change all words to lower-case.

- Analyze distribution of words to decide if we should base our prediction on the full dictionary, or just on some sub-set of it.

- Analyze n-grams for small n.

We decided to split text on sentences and do not attempt to predict words across sentence border. We still may use information about sentences to improve prediction of the first word, because the frequency of the first word in a sentence may be very different from an average frequency.

Libraries contains some functions for cleaning up and pre-processing, but for some steps we have to write functions ourselves.

Now we pre-process the data.

In this section we will study distribution of words in corpora, ignoring for the moment interaction between words (n-grams).

We define two helper functions. The first one creates a Document Feature Matrix (DFM) for n-grams in documents, and aggregates it over all documents to a Feature Vector. The second helper function enriches the Feature Vector with additional values useful for our analysis, such as cumulated coverage of text.

Now we may calculate frequency of words in each source, as well as in all sources together (aggregated).

The following chart displays 20 most-frequent words in each source, as well as in the aggregated corpora.

As we see from the chart, top 20 most-frequent words differs between sources. For example, the most frequent word in news is “said”, but this word is not included in the top-20 list for blogs and Twitter at all. At the same time, some words are shared between lists: the word “can” is 2nd most-frequent in blogs, 3rd-most frequest in Twitter, and 5th in and news.

Our next step is to analyze the intersection, that is to find how many words are common to all sources, and how many are unique to a particular source. Not only just a number of words is important, but also a source coverage, that is what percentage of the whole text of a particular source is covered by a particular subset of all words.

The following Venn diagram shows a number of unique words (stems) used in each source, as well as a percentage of the aggregated corpora covered by those words.

As we may see, 46686 words are shared by all 3 corpora, but those words cover 97.46% of the aggregated corpora. On the other hand, there are 83185 words unique to blogs, but these words appear very infrequently, covering just 0.43% of the aggregated corpora.

The Venn diagram indicates that we may get a high coverage of all corpora by choosing common words. Coverage by words specific to a particular corpus is negligible.

The next step in our analysis is to find out how many common words we should choose to achieve a decent coverage of the text. From the Venn diagram we already know that by choosing 46686 words we will cover 97.46% of the aggregated corpora, but maybe we may reduce a number of words without significantly reducing the coverage.

The following chart shows a number of unique words in each source which cover particular percentage of the text. For example, 1000 most-frequent words cover 68.09% of the Twitter corpus. An interesting observation is that Twitter requires less words to cover particular percentage of the text, whereas news requires more words.

| Corpora Coverage | Blogs | News | Aggregated | |

|---|---|---|---|---|

| 75% | 2,004 | 2,171 | 1,539 | 2,136 |

| 90% | 6,395 | 6,718 | 5,325 | 6,941 |

| 95% | 13,369 | 13,689 | 11,922 | 15,002 |

| 99% | 63,110 | 53,294 | 71,575 | 88,267 |

| 99.9% | 149,650 | 126,585 | 161,873 | 302,693 |

The table shows that in order to cover 95% of blogs, we require 13,369 words. The same coverage of news require 13,689 words, and the coverage of twitter 11,922 words. To cover 95% of the aggregated corpora, we require 15,002 unique words. We may use this fact later to reduce a number of n-grams required for predictions.

In this section we will study distribution bigrams, that is combinations of two words.

Using previously defined functions, we may calculate frequency of bigrams in each source, as well as in all sources together (aggregated).

The following chart displays 20 most-frequent bigrams in each source, as well as in the aggregated corpora.

We immediately see a difference with lists of top 20 words: there were much more common words between sources, as there are common bigrams. There are still some common bigrams, but the intersection is smaller.

Similar to how we proceed with words, now we will analyze intersections, that is we will find how many bigrams are common to all sources, and how many are unique to a particular source. We also calculate a percentage of the whole source covered by a particular subset of all bigrams.

The following Venn diagram shows a number of unique bigrams used in each source, as well as a percentage of the aggregated corpora covered by those bigrams.

The difference between words and bigrams is even more pronounced here. Bigrams common to all sources cover just 46.23% of the text, compared to more than 95% covered by words common to all sources.

The next step in our analysis is to find out how many common bigrams we should choose to achieve a decent coverage of the text.

The following chart shows a number of unique bigrams in each source which cover particular percentage of the text. For example, 1000 most-frequent bigrams cover 8.66% of the Twitter corpus.

| Corpora Coverage | Blogs | News | Aggregated | |

|---|---|---|---|---|

| 75% | 1,945,493 | 1,810,320 | 1,154,697 | 2,697,841 |

| 90% | 3,449,146 | 3,393,854 | 2,329,516 | 6,772,302 |

| 95% | 3,950,364 | 3,921,699 | 2,721,122 | 8,192,971 |

| 99% | 4,351,338 | 4,343,975 | 3,034,407 | 9,329,506 |

| 99.9% | 4,441,557 | 4,438,987 | 3,104,896 | 9,585,226 |

The table shows that in order to cover 95% of blogs, we require 3,950,364 bigrams. The same coverage of news require 3,921,699 bigrams, and the coverage of Twitter 2,721,122 bigrams. To cover 95% of the aggregated corpora, we require 8,192,971 bigrams.

The chart is also very different from a similar chart for words. The curve for words had an “S”-shape, that is it’s growth slowed down after some number of words, so that adding more words results in diminishing returns. For bigrams, there is no point of diminishing returns: curves are just rising.

As we have found in the section Analyzing words (1-grams) , our corpora contains \(N_1=\) 335,906 unique word stems. Potentially there could be \(N_1^2=\) 112,832,840,836 bigrams, but we have observed only \(N_2=\) 9,613,640, that is 0.0085% of all possible. Still, the number of observed bigrams is pretty large. In the section Analyzing words (1-grams) we have found that we may cover large part of the corpus by relatively small number of unique word stems. In the next section we will see if we may reduce a number of unique 2-grams by utilizing that knowledge.

We have found in the section Analyzing words (1-grams) , that our corpora contains \(N_1=\) 335,906 unique word stems, but just 15,002 of them cover 95% of the corpus. In this section we will analyze whether we may reduce a number of bigrams by utilizing that knowledge.

We will replace seldom words with a speial token UNK . This will reduce a number of bigrams, because different word sequences may now produce the same bigram, if those word sequences contains seldom words. For example, our word list contains names “Andrei”, “Charley” and “Fabio”, but these words do not belong to a subset of most common words required to achieve 95% coverage of the corpus. If our corpus contains bigrams “Andrei told”, “Charley told” and “Fabio told”, we will replace them all with a bigram “UNK told”.

Since we will apply the same approach to 3-grams, 4-grams etc, to save time we prune the corpora once and save results to files which we may load later.

We start by defining a function that accepts a sequence of words, a white-list and a replacement token. All words in the sentence which are not included in the white-list are replaced by the token.

Now we create a white-list that contains:

- frequent words which covers 95% of the corpus,

- stop-words, that is functional words like “a” or “the” which we excluded from our word frequency analysis,

- special tokens Stop-Of-Sentence and End-Of-Sentence introduced earlier.

And now we apply the function defined above to replace all words not included in the white-list with the token UNK .

After pruning seldom words, we re-calculate bigrams. From now on, we will analyze only the aggregated corpus.

The chart shows coverage of corpora by pruned bigrams, where different types of bigrams are indicated by different color. The chart also shows for several numbers points where bigrams were encountered a particular number of times. For example, there are 104,625 unique bigrams encountered more than 30 times.

By pruning we have reduced a number of unique bigrams from 9,613,640 to 7,341,432, that is by 23.64%. On this stage it is hard to tell whether pruning makes sense: on one hand, it reduces the number of unique 2-grams and thus memory requirements of our application, on the other hand it removes information which may be required to achieve good prediction rate.

After analyzing bigrams, now there is a time to take a look on a longer n-grams. We decided to analyze 3-grams to 6-grams.

Charts below show coverage of corpora by pruned 2- to 6-grams, where different color indicates n-grams with a different number of pruned words ( UNK tokens). The same as for 2-grams, charts also show for several number points where n-grams were encountered a particular number of times.

As \(n\) grows, the number of repeated n-grams decreases. This property is quite obvious: for example, there are much more common 2-grams (like “last year” or “good luck”) as common 6-grams. A consequence of this property is less obvious, but is clearly visible on charts: as \(n\) grows, one require more and more unique n-grams to cover the same percentage of the text. For single words we could choose a small subsets that covers 95% of the corpora, but for n-grams achieving a high corpora coverage with a small subset is impossible.

| Corpora Coverage | 2-grams | 3-grams | 4-grams | 5-grams | 6-grams |

|---|---|---|---|---|---|

| 25% | 0.27 | 8.40 | 22.18 | 23.75 | 24.07 |

| 50% | 3.06 | 38.82 | 48.12 | 49.17 | 49.38 |

| 75% | 20.12 | 69.41 | 74.06 | 74.58 | 74.69 |

| 95% | 80.34 | 93.88 | 94.81 | 94.92 | 94.94 |

The table above shows a percentage of n-grams required to cover a particular percentage of the aggregated corpus for various n. For example, one require 3.06% of 2-grams to cover 50% of the corpus, but the same coverage requires 38.82% of 3-grams. As we could see, even for 2-grams we can’t significantly reduce a number of unique n-grams without significantly reducing the coverage as well.

Conclusions from the data analysis:

- If we keep only most often used words required for 95% coverage of the corpora, we may significatly reduce the number of distinct words, but the number of 2- to 6-grams is not significantly affected.

- To get a decent coverage of the corpora by 2- to 6-grams, we require millions of entries. To reduce memory usage, probably we have to use some encoding shema. Here we may again return to the idea of keeping only most often used words to reduce the size of the encoded data.

- For small \(n\) , many n-grams are encountered in the corpora multiple times, but for large \(n\) most n-grams are encountered just once. This is intuitively obvious: we expect common bigrams “last year” or “good luck” to be repeated many time, but a probability that a particular 6-gram repeats is pretty low. When developing a prediction model, we have to test if it makes sense at all to include in the model n-grams with large \(n\) , since this could be an overfitting to the training set without any benefits for the prediction quality.

Open questions:

- Should we include stop-words in our prediction set? On one hand, stop-words are too common and may be considered a syntactical “garbage”, on the other hand they are an important part of a natural language.

- How should we use the stemming? On one hand, stemming may reduce the number of n-grams and improve prediction quality. On the other hand, we want to predict full words, not just stems. At the moment we are inclined to stem words used for the prediction, but not the predicted word. This approach may require a custom implementation of the tokenization algorithm.

- Should we replace seldom words with the special token UNK or should we keep such words?

- Does it make sense to use n-grams for large \(n\) , or could we overfit our model with them?

- Should we replace common abbreviations with full text before training our algorithm, or should we keep the abbreviations (for example, AFAIK = “as far as I know”)?

- Should we remove profanity from the corpora, or should we keep it as a part of the natural language? At the moment we are inclined to keep profanity in the dictionary, but never predict it. Keeping profanity in the dictionary may improve quality of prediction for non-profane words. For example, after the bigram “for fuck’s” we may predict the non-profane word “sake”. If we would have excluded profanity from our dictionary, we may miss the right prediction in this case.

To answer most questions above, we have to create several models and run them against a test data set.

Next steps:

- Implement the simplest possible prediction algorithm.

- Test the algorithm on the test data set, analyze quality of prediction and optimize the algorithm.

- Coursera Data Science Capstone Project

- by Ibrahim Hassan

- Last updated over 2 years ago

- Hide Comments (–) Share Hide Toolbars

Twitter Facebook Google+

Or copy & paste this link into an email or IM:

Instantly share code, notes, and snippets.

ryandotjames / Data-Science_Capstone-Project-Report.pdf

- Download ZIP

- Star ( 0 ) 0 You must be signed in to star a gist

- Fork ( 0 ) 0 You must be signed in to fork a gist

- Embed Embed this gist in your website.

- Share Copy sharable link for this gist.

- Clone via HTTPS Clone using the web URL.

- Learn more about clone URLs

- Save ryandotjames/b7f6f2fc4cc66b6fc9110bb0f1439395 to your computer and use it in GitHub Desktop.

| Gist for Data science capstone report. |

COMMENTS

This repository contains the ui.R and server.R files for the developed Shiny Application as well as the RStudio Presenter files for the Data Science Capstone Course Project. Data File In order to build a function that can provide word-prediction, a predictive model is needed.

Throughout this Professional Certificate, you will complete hands-on labs and projects to help you gain practical experience with Excel, Cognos Analytics, SQL, and the R programing language and related libraries for data science, including Tidyverse, Tidymodels, R Shiny, ggplot2, Leaflet, and rvest. In the final course in this Professional ...

Add this topic to your repo. To associate your repository with the data-science-capstone topic, visit your repo's landing page and select "manage topics." GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

In this capstone course, you will apply various data science skills and techniques that you have learned as part of the previous courses in the IBM Data Science with R Specialization or IBM Data Analytics with Excel and R Professional Certificate. For this project, you will assume the role of a Data Scientist who has recently joined an ...

A course for students in data science to work on real world data projects with Python and R. See the course information, schedule, homework, projects, and GitHub repositories for reports and source codes.

This repository contains a capstone project for the cycle of courses Data Science Specialization offered on Coursera by Johns Hopkins University. The purpose of the capstone project is to create a R application for predicting a next word as a user types text. The algorithm may be used, for example, in mobile phones to assist typing.

Module 1 - Capstone Overview and Data Collection. Module 2 - Data Wrangling. Module 3: Performing Exploratory Data Analysis with SQL, Tidyverse & ggplot2. At this stage of the Capstone Project, you have gained some valuable working knowledge of data collection and data wrangling. You have also learned a lot about SQL querying and visualization.

If the issue persists, it's likely a problem on our side. Unexpected token < in JSON at position 4. keyboard_arrow_up. content_copy. SyntaxError: Unexpected token < in JSON at position 4. Refresh. Explore and run machine learning code with Kaggle Notebooks | Using data from [Private Datasource]

EXECUTIVE SUMMARY. This research project investigated the relationship between demand for bike rentals, the weather and date/time. The data collection used webscraping to create csv files from wikipedia and the Open Weather API. The data analysis focused on Seoul, South Korea and used SQL queries and a variety of graphs and models.

The Capston Project of the Coursera - Johns Hopkins Data Science Specilization. This is course 10 of the 10-course program. See the GitHub Pages page, Data Science Capstone. The Project. An analysis of text data and natural language processing delivered as a Data Science Product. Deliverables. Data Exploration; Shiny App; Presentation ...

This is helpful. 1. Find helpful learner reviews, feedback, and ratings for Data Science with R - Capstone Project from IBM. Read stories and highlights from Coursera learners who completed Data Science with R - Capstone Project and wanted to share their experience. I had the best learning experience with this course.

GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects. ... This project is part of the HarvardX course PH125.9x Data Science: Capstone Project. r rstudio datascience movielens harvardx dataanalysis capstone-project netflix-challenge Updated Jul 21, 2020; R;

Modern data science is a team sport. To be able to fully engage, analysts must be able to pose a question, seek out data to address it, ingest this into a computing environment, model and explore, then communicate results. This is an iterative process that requires a blend of statistics and computing skills.

ConfusionMatrix 42 Since all models performed the same for the test set, the confusionmatrix isthe same acrossall models. The models predicted12 successfullandings when the true label was successfullanding.

Summary- This is comprehensive project completed by me as part of the Data Science Post Graduate Programme. This project includes multiple classification algorithms over a dataset collected on health/diagnostic variables to predict of a person has diabetes or not based on the data points. Apart from extensive EDA to understand the distribution and other aspects of the data, pre-processing was ...

Synopsis. This is a milestone report for Week 2 of the capstone project for the cycle of courses Data Science Specialization offered on Coursera by Johns Hopkins University. The purpose of the capstone project is to build a Natural Language Processing (NLP) application, that, given a chunk of text, predicts the next most probable word.

R-code to create the 4-grams (after the data analytics phase) create5grams R-code to create the 5-grams (after the data analytics phase) model1.R R-code with the first model used for (the model used in the final App is the derived and optimized version of this model) plotTestResults.R R-code to plot the results of the model testing

This repository contains the ui.R and server.R files for the developed Shiny Application as well as the RStudio Presenter files for the Data Science Capstone Course Project. About Data The corpora are collected from publicly available sources by a web crawler.

R Pubs by RStudio. Sign in Register Coursera Data Science Capstone Project; by Ibrahim Hassan; Last updated over 2 years ago; Hide Comments (-) Share Hide Toolbars

I Applied Data Science with R - Capstone Project M B Matteo Pio Di Bello - 28/02/2023 Executive Summary Executive summary The project is aimed to analyze the correlation between weather and bike-sharing demand in urban areas to predict the number of bikes rented based on weather. Get started for FREE Continue.

In this capstone course, you will apply various data science skills and techniques that you have learned as part of the previous courses in the IBM Data Science with R Specialization or IBM Data Analytics with Excel and R Professional Certificate. For this project, you will assume the role of a Data Scientist who has recently joined an ...

Data-Science_Capstone-Project-Report.pdf This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Offered by IBM. In this capstone course, you will apply various data science skills and techniques that you have learned as part of the ... Enroll for free.